Atomically Incrementing JSON Column Values In MySQL 5.7.32 And Lucee CFML 5.3.7.47

In the last few days, I've started to look at the JSON column type that I can now leverage in MySQL 5.7.32. It's pretty cool that MySQL now allows for JSON (JavaScript Object Notation) structures; but, the offering in MySQL 5.7.x isn't as robust as it is in more specialized databases like MongoDB or Redis. That said, MySQL appears to provide most of the necessary building blocks in its initial implementation. And, one feature that I wanted to see if I could implement in MySQL 5.7.32 is the ability to atomically increment a JSON column value in Lucee CFML 5.3.7.47.

In databases like MongoDB and Redis, there are functions specifically designed for atomic maths. For example, Redis has an INCR key operation; and, MongoDB has an $inc operation. But, in MySQL 5.7.32 there's really only getting and setting values using functions like JSON_EXTRACT() and JSON_SET(), respectively. However, since MySQL is inherently atomic around updates on a single row, we don't have to worry about the transactional nature of our queries the way you might in a MongoDB or Redis database (which is partly why those database have so many specialized functions).

For example, in MySQL, you can perform atomic maths on a column in an UPDATE query by doing something as simple as:

columnValue = ( columnValue + 1 )

Since a SQL query can reference its own row values, we can atomically increment a column using the current column value right in our update statement.

To perform an atomic increment on a JSON value, I'm going to use the same exact approach. However, since the JSON structures are more complicated than a simple INT column value, our update statements are going to be quite a bit more complicated as well. But, again, the basic premise is the same.

To explore this concept, I'm going to create a user_stats table that can store an arbitrary number of metrics relating to a user. The table only has two columns:

userID- the target user for our metrics collection. This is the primary key for this database record.stats- theJSONpayload that will hold the arbitrary key-value metrics that we want to update / increment atomically.

CREATE TABLE `user_stats` (

`userID` int(10) unsigned NOT NULL,

`stats` json NOT NULL,

PRIMARY KEY (`userID`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

Since the stats JSON column can hold an arbitrary set of metrics that evolves over time, we can't make any assumptions about whether or not a record exists; and, if a record does exist, whether or not it even contains the metric we're about to update. As such, we're going to rely on MySQL's ON DUPLICATE KEY UPDATE syntax to perform an atomic "upsert". Meaning, we're going to attempt an INSERT on every metric operation; and, if that operation fails (due to a primary-key violation), we're going to fall-back to an UPDATE.

To try this out, I created a ColdFusion component - UserStatsGateway.cfc - that provides an abstraction layer over performing these more complicated JSON column manipulations. In the following ColdFusion code, pay special attention to the following methods:

incrementMetric( userID, metricName )incrementMetricBy( userID, metricName, metricDelta )setMetric( userID, metricName, metricValue )

... as these are the methods providing our atomic updates on the JSON payloads:

component

output = false

hint = "I provide data persistence methods for user stats."

{

/**

* I delete the stats associated with the given user.

*

* @userID I am the user whose stats are being deleted.

*/

public void function deleteStatsByUserID( required numeric userID ) {

```

<cfquery name="local.results">

DELETE

s

FROM

user_stats s

WHERE

s.userID = <cfqueryparam value="#userID#" sqltype="integer" />

</cfquery>

```

}

/**

* I get the stats associated with the given user.

*

* @userID I am the user whose stats are being accessed.

*/

public query function getStatsByUserID( required numeric userID ) {

```

<cfquery name="local.results">

SELECT

s.userID,

s.stats

FROM

user_stats s

WHERE

s.userID = <cfqueryparam value="#userID#" sqltype="integer" />

</cfquery>

```

results = results.map(

( row ) => {

return({

userID: row.userID,

// The calling context should always deal in STRUCTS. It should not

// know that the stats go through stringification.

stats: deserializeJson( row.stats )

});

}

);

return( results );

}

/**

* I increment the given metric for the given user.

*

* @userID I am the user whose stats are being updated.

* @metricName I am the metric being incremented.

*/

public numeric function incrementMetric(

required numeric userID,

required string metricName

) {

incrementMetricBy( userID, metricName, 1 );

}

/**

* I increment the given metric by the given amount for the given user.

*

* @userID I am the user whose stats are being updated.

* @metricName I am the metric being incremented.

* @metricDelta I am the amount by which to increment the metric.

*/

public numeric function incrementMetricBy(

required numeric userID,

required string metricName,

required numeric metricDelta

) {

<!--- NOTE: There is a unique key on (USERID) field. --->

```

<cfquery name="local.results">

SET @userID = <cfqueryparam value="#userID#" sqltype="integer" />;

SET @metricName = <cfqueryparam value="#metricName#" sqltype="varchar" />;

SET @metricPath = <cfqueryparam value="$.#metricName#" sqltype="varchar" />;

SET @metricDelta = <cfqueryparam value="#metricDelta#" sqltype="integer" />;

/**

* By default, we're going to assume that this is an INSERT and that the only

* metric we're going to start with is the given metric with the given value.

* This will work for the very first metric; however, it will throw a

* DUPLICATE KEY error on every subsequent increment. At that point, we're

* going to fall-back to performing an UPDATE of the existing JSON payload.

*/

INSERT INTO

user_stats

SET

userID = @userID,

stats = JSON_OBJECT( @metricName, @metricDelta )

/**

* Once the INSERT fails, we're going to perform an UPDATE. However, just

* because the record exists, it DOES NOT mean that the metric we're about to

* increment exists in the JSON column. As such, we have to use the COALESCE()

* function in order to provide a default value as part of the maths.

*/

ON DUPLICATE KEY UPDATE

stats = JSON_SET(

stats,

@metricPath,

( COALESCE( JSON_EXTRACT( stats, @metricPath ), 0 ) + @metricDelta )

)

;

</cfquery>

```

}

/**

* I set the given metric to the given value for the given user.

*

* @userID I am the user whose stats are being updated.

* @metricName I am the metric being set.

* @metricValue I am the value to which the metric is being set.

*/

public numeric function setMetric(

required numeric userID,

required string metricName,

required numeric metricValue

) {

<!--- NOTE: There is a unique key on (USERID) field. --->

```

<cfquery name="local.results">

SET @userID = <cfqueryparam value="#userID#" sqltype="integer" />;

SET @metricName = <cfqueryparam value="#metricName#" sqltype="varchar" />;

SET @metricPath = <cfqueryparam value="$.#metricName#" sqltype="varchar" />;

SET @metricValue = <cfqueryparam value="#metricValue#" sqltype="integer" />;

/**

* By default, we're going to assume that this is an INSERT and that the only

* metric we're going to start with is the given metric with the given value.

* This will work for the very first metric; however, it will throw a

* DUPLICATE KEY error on every subsequent increment. At that point, we're

* going to fall-back to performing an UPDATE of the existing JSON payload.

*/

INSERT INTO

user_stats

SET

userID = @userID,

stats = JSON_OBJECT( @metricName, @metricValue )

ON DUPLICATE KEY UPDATE

stats = JSON_SET( stats, @metricPath, @metricValue )

;

</cfquery>

```

}

}

As you can see, our JSON operations are performing "upserts". They're just more complicated that normal updates. Whereas a simple INT column can receive the following:

columnValue = ( columnValue + delta )

... when using JSON, we have to get more verbose:

columnValue = JSON_SET( columnValue, path, JSON_EXTRACT( columnValue, path ) + delta )

And, since we don't know if any given metric exists within the JSON payload, we have to use COALESCE() to provide a default value as the basis for our increment. So, in reality, the above statement becomes even more verbose:

columnValue = JSON_SET( columnValue, path, ( COALESCE( JSON_EXTRACT( columnValue, path ), 0 ) + delta ) )

But, this all happens atomically, which is the power of SQL.

Now, to see this in action, I've created a simple demo which increments and sets a few metric values:

<cfscript>

gateway = new UserStatsGateway();

userID = 4;

// Reset the demo.

gateway.deleteStatsByUserID( userID );

// Create the initial stats payload for this user. Since we reset the demo, there

// will NOT be any existing record for this user.

gateway.incrementMetric( userID, "uploadCount" );

gateway.incrementMetric( userID, "uploadCount" );

gateway.incrementMetric( userID, "uploadCount" );

gateway.incrementMetric( userID, "uploadCount" );

gateway.incrementMetricBy( userID, "uploadCount", 3 );

// Add a NEW METRIC to the EXISTING user stats payload.

gateway.incrementMetricBy( userID, "loginCount", 10 );

gateway.incrementMetric( userID, "loginCount" );

gateway.incrementMetric( userID, "loginCount" );

gateway.incrementMetric( userID, "loginCount" );

// Not all metrics necessarily make sense from an increment stand-point. Some metrics

// should be set using some IDEMPOTENT calculation in the business logic such that

// they can be calculated over-and-over without getting out-of-sync. In such cases,

// we may want to perform a direct assignment or the metric value.

gateway.setMetric( userID, "projectCount", 32 );

// Get the stats for the given user.

dump( gateway.getStatsByUserID( userID ) );

</cfscript>

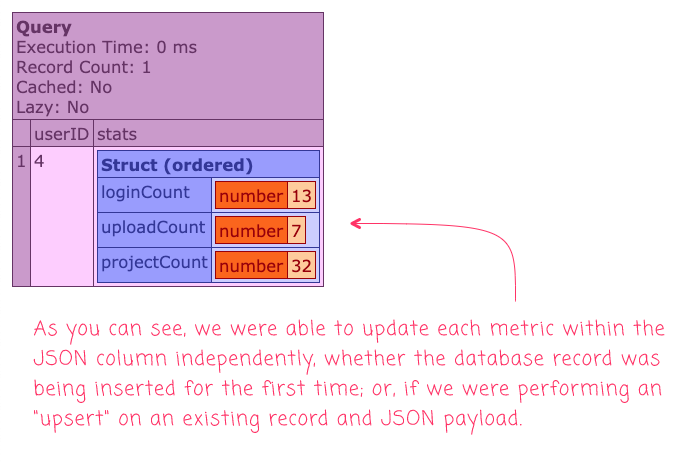

And, when we run the above ColdFusion code, we get the following browser output:

As you can see, we were able to insert new and increment existing metrics within our JSON column payload.

Of course, attempting this in a single, procedural script doesn't necessarily prove that the updates are atomic; after all, the above ColdFusion code sample is single-threaded. To make things a little more interesting, let's see what happens if we start hammering the a single row with atomic increments using Lucee CFML's parallel array iteration features.

In the following test, I'm going to spawn multiple, parallel threads that will all try to increment the same JSON column in parallel. Once the page has finished executing, the value of the metric should match the total number operations performed across all the threads:

<cfscript>

gateway = new UserStatsGateway();

userID = 10;

// Reset the demo.

gateway.deleteStatsByUserID( userID );

// NOTE: Each count here represents the number of operations to execute in a parallel

// thread on the request.

operations = [

10000, 10000, 10000, 10000, 10000, 10000, 10000, 10000, 10000, 10000,

10000, 10000, 10000, 10000, 10000, 10000, 10000, 10000, 10000, 10000

];

// To make sure that the increments are taking place ATOMICALLY, we're going to try

// an perform thousands of concurrent increments on the same record in parallel

// threads using Lucee CFML's AWESOME parallel iteration features.

operations.each(

( count ) => {

loop times = count {

gateway.incrementMetric( userID, "count" );

}

},

true, // Run the operations in PARALLEL thread.

operations.len() // With each set of operations in their own thread.

);

// Get the results of our thundering herd. At this point, if every operation was

// applied in an atomic manner, then the COUNT within the database should match the

// SUM OF ALL THE OPERATIONS that we executed in parallel threads.

userStats = gateway.getStatsByUserID( userID );

echo( "<p> Expected value: #numberFormat( operations.sum() )# </p>" );

echo( "<p> Actual value: #numberFormat( userStats.stats.count )# </p>" );

</cfscript>

Here, we have 20 threads each trying to perform 10,000 operations in parallel. If all of this happens atomically, the final metric should be 200,000. And, when we run this ColdFusion code, we get the following output:

Expected value: 200,000

Actual value: 200,000

Woot woot! As you can see, we get the expected value. Which means, in the 200,000 "upsert" operations that our ColdFusion code performed on the JSON column, it never performed a dirty read. It was totes atomic!

The JSON API in MySQL 5.7 isn't nearly as robust as it is in other, more specialized document databases (MongoDB) and key-value stores (Redis). But, MySQL provides low-level JSON functions on top of the existing ACID (Atomicity, Consistency, Isolation, Durability) properties. Which means, we can build up all kinds of exciting functionality, like atomic increments, with just a little finagling in Lucee CFML 5.3.7.47.

Want to use code from this post? Check out the license.

Reader Comments

Ben. This is really interesting...

One small thing:

I think it should be:

I must say, I never realized you could use map() on a query, but it kind of makes sense, considering a query object is just an array of objects. Each array indice represents a row and each object key represents a column in the array indice row. Is this analysis correct?

Also, I have never seen this syntax before:

I have always used:

OR

Could you explain your syntax in relation to:

@Charles,

So, the

INSERT INTO .. SETsyntax is a MySQL extension to the SQL standard. And, I have to say, it's probably one of my favorite things in the world :D It's really just a short-hand for the baseINSERT INTOsyntax. What I love about it is that it puts the Names and Values right next to each other.The following statements are equivalent:

... and, the MySQL version:

As you can see, the column-name and the column-value are on the same line in the MySQL extension version. Which I love in terms of readability and bugs. In the former example (the normal SQL syntax), it's really easy to accidentally misalign a column and a value - trust me, I've done it plenty :D :D ; but, in the MySQL version, it's virtually impossible since they are right next to each other.

@Charles,

As far as the

ON DUPLICATE KEY UPDATEsyntax, that is used to generally perform what is called an "Upsert"; or, an "Insert" that falls-back to an "Update" when the insert violates a key constraint. So, you can think of those queries using this mental model:The key to this is that the

ON DUPLICATE KEY UPDATEportion only executes if a key constraint on the table is violated. For example, trying to insert a duplicate email address when the email is part of aUNIQUE KEY. Then, when theON DUPLICATE KEY UPDATEportion is executed, it doesn't need aWHEREclause because it is implicitly operating on the row that violated the key constraint.I've only ever used this in R&D so far; but, it's pretty cool. And can help with creating some "idempotent" operations - operations that are safe to perform over and over.

Ben. 100% on the MySQL Syntax:

This is so much easier to read. It is like the standard UPDATE syntax.

I really like the upsert methodology.

I must say that I have never used this before, because I use auto incrementing primary key columns and I usually know before hand whether I need to update or insert. But I can see how this methodology can save a whole load of time. I will use it in my next project:)

Now, I must have a look at the MySQL reference for:

Never seen these methods before, either...

So much to still learn;)

@Charles,

Well, to be honest, this exploration of

JSONcolumns is the first time I ever performed an "upsert" :D So, we're all learning together here.On a related note, one that I have used sometimes in the past is the

INSERT IGNORE INTO, which kind does something similar in that is will quietly ignore theINSERTif it violates a primary key.