Updating Multiple Documents With PouchDB

In SQL, updating multiple documents is as easy as writing an UPDATE statement. And, not only can you update multiple documents, you can even perform updates across complex INNER JOIN statements. In a document database, like PouchDB, you give up this kind of power in return for things like master-master replication (not too shabby). But, at the end of the day, your application may still need to update multiple documents in your PouchDB database. As such, I wanted to look at how we could accomplish this with the bulkDocs() API.

Run this demo in my JavaScript Demos project on GitHub.

With PouchDB, there's no way to update just part of a document. Every time that you want to update a portion of a document, you have to persist the entire document, complete with _id and _rev properties. This way, PouchDB can handle conflicts, ensure atomic actions, and facilitate synchronization across databases.

This also means that every document that we want to update needs to be pulled into memory. With a bulk operation, where we want to update multiple documents at once, we have to read all the documents into memory, update them all, and then push them all back into the database. To store multiple documents at one time, we can use PouchDB's .bulkDocs() method.

NOTE: With massive bulk operations, you might need to paginate through records so as not to exhaust your RAM; but, such considerations are far outside anything that I've had to consider in my learning.

The more documents you update with one .bulkDocs() call, the greater your chance of running into a conflict, if it's a multi-tenant database system. And, since neither PouchDB nor CouchDB offer any Transactional support, it's possible for the .bulkDocs() method to complete successfully while one or more of the documents failed to update. Luckily, the .bulkDocs() result contains success and error information for each document targeted in the operation. For example:

| [ | |

| { | |

| "status": 409, | |

| "name": "conflict", | |

| "message": "Document update conflict", | |

| "error": true | |

| }, | |

| { | |

| "ok": true, | |

| "id": "apple:fuji", | |

| "rev": "2-2f81daf8465c413fb1838d8f5083d238" | |

| }, | |

| { | |

| "ok": true, | |

| "id": "apple:goldendelicious", | |

| "rev": "2-b6287fab09c8a82bbb6c053616d22a18" | |

| } | |

| ] |

While .bulkDocs() is great for saving documents, how you read the documents into memory in the first place doesn't really matter. Whether you get documents individually, read them in from a secondary index using a .query() call, or read them in from the primary index using a .allDocs() call, it doesn't matter. Once the documents are in memory, you just have to update them and then save them back into the database.

To explore this concept, I created a small demo in which our PouchDB database contains Apples and Pears. I'd like to increase the price of all Apples by 10%. And, to do this, I'm going to read the Apple-related documents using the .allDocs() method, increase each document's price by 10%, and then save the documents back into the database using the .bulkDocs() method.

| <!doctype html> | |

| <html> | |

| <head> | |

| <meta charset="utf-8" /> | |

| <title> | |

| Updating Multiple Documents With PouchDB | |

| </title> | |

| </head> | |

| <body> | |

| <h1> | |

| Updating Multiple Documents With PouchDB | |

| </h1> | |

| <p> | |

| <em>Look at console — things being logged, yo!</em> | |

| </p> | |

| <script type="text/javascript" src="../../vendor/pouchdb/6.0.7/pouchdb-6.0.7.min.js"></script> | |

| <script type="text/javascript"> | |

| getPristineDatabase( window, "db" ).then( | |

| function() { | |

| // To experiment with bulk updates, we need to have document to | |

| // experiment on. Let's create some food products with prices that | |

| // we'll update based on the "type", which is being built into the | |

| // _id schema (type:). | |

| var promise = db.bulkDocs([ | |

| { | |

| _id: "apple:fuji", | |

| name: "Fuji", | |

| price: 1.05 | |

| }, | |

| { | |

| _id: "apple:applecrisp", | |

| name: "Apple Crisp", | |

| price: 1.33 | |

| }, | |

| { | |

| _id: "pear:bosc", | |

| name: "Bosc", | |

| price: 1.95 | |

| }, | |

| { | |

| _id: "apple:goldendelicious", | |

| name: "Golden Delicious", | |

| price: 1.27 | |

| }, | |

| { | |

| _id: "pear:bartlett", | |

| name: "Bartlett", | |

| price: 1.02 | |

| } | |

| ]); | |

| return( promise ); | |

| } | |

| ) | |

| .then( | |

| function() { | |

| // Now that we've inserted the documents, let's fetch all the Apples | |

| // and output them so we can see the prices before we do a bulk update. | |

| var promise = db | |

| .allDocs({ | |

| startkey: "apple:", | |

| endkey: "apple:\uffff", | |

| include_docs: true | |

| }) | |

| .then( | |

| function( results ) { | |

| // Prepare docs for logging. | |

| var docs = results.rows.map( | |

| function( row ) { | |

| return( row.doc ) | |

| } | |

| ); | |

| console.table( docs ); | |

| } | |

| ) | |

| ; | |

| return( promise ); | |

| } | |

| ) | |

| .then( | |

| function() { | |

| // In PouchDB, there is no "UPDATE" like there is in SQL; each document | |

| // has to be updated as a single atomic unit. As such, if we want to | |

| // update all Apples, for example, we have to first fetch all Apple | |

| // documents, alter their structure, and then .put() them back into the | |

| // database. This way, PouchDB can compare _rev values and handle any | |

| // conflicts. | |

| // To experiment with this, let's try increasing the price of each Apple | |

| // by 10-percent. To do this, we have to fetch all Apples. Since the | |

| // product type is build into the _id schema, we can use the bulk-fetch | |

| // method to gather them all (into memory) in one shot. | |

| var promise = db | |

| .allDocs({ | |

| startkey: "apple:", | |

| endkey: "apple:\uffff", | |

| include_docs: true | |

| }) | |

| .then( | |

| function( results ) { | |

| // Once we have all of the Apples read into memory, we can | |

| // loop over the collection and alter the price, in memory. | |

| // Since we're going to have to PUT these documents back into | |

| // the database, we might as well transform the results into | |

| // the collection of updated documents we have to persist. | |

| var docsToUpdate = results.rows.map( | |

| function( row ) { | |

| var doc = row.doc; | |

| // Apply the 10% price increase. | |

| // -- | |

| // CAUTION: Using the "+" to coerce the rounded number | |

| // String back to a numeric data type for simple demo. | |

| doc.price = +( doc.price * 1.1 ).toFixed( 2 ) | |

| return( doc ); | |

| } | |

| ); | |

| // To look at how conflicts are handled, let's delete the | |

| // revision for the first document. Since we can't PUT an | |

| // object without a revision, we know that the first document | |

| // will hit a conflict. | |

| delete( docsToUpdate[ 0 ]._rev ); | |

| // To PUT all documents in one operation (NOT A TRANSACTION), | |

| // we can use the bulkDocs() method. | |

| return( db.bulkDocs( docsToUpdate ) ); | |

| } | |

| ) | |

| .then( | |

| function( results ) { | |

| // We know that we're going to get a conflict with the first | |

| // document (since we deleted its revision value). With a bulk | |

| // operation, however, the result won't fail (ie, reject the | |

| // Promise) simply because one of the documents failed. As | |

| // such, we have to examine the result to see if any indicate | |

| // an error occurred. | |

| var errors = results.filter( | |

| function( result ) { | |

| // NOTE: On success objects, { ok: true } and on error | |

| // objects, { error: true }. | |

| return( result.error === true ); | |

| } | |

| ); | |

| // We should have at least ONE error (revision conflict). | |

| if ( errors.length ) { | |

| console.group( "Bulk update applied to %s docs with %s error(s).", results.length, errors.length ); | |

| errors.forEach( | |

| function( error, i ) { | |

| console.log( "%s : %s", i, error.message ); | |

| } | |

| ); | |

| console.groupEnd(); | |

| } | |

| } | |

| ) | |

| ; | |

| return( promise ); | |

| } | |

| ) | |

| .then( | |

| function() { | |

| // Now that we've [tried] to apply a 10% price increase to all Apples, | |

| // let's re-fetch the Apple documents to see how they've changed. | |

| var promise = db | |

| .allDocs({ | |

| startkey: "apple:", | |

| endkey: "apple:\uffff", | |

| include_docs: true | |

| }) | |

| .then( | |

| function( results ) { | |

| // Prepare docs for logging. | |

| var docs = results.rows.map( | |

| function( row ) { | |

| return( row.doc ) | |

| } | |

| ); | |

| console.table( docs ); | |

| } | |

| ) | |

| ; | |

| return( promise ); | |

| } | |

| ) | |

| .catch( | |

| function( error ) { | |

| console.warn( "An error occurred:" ); | |

| console.error( error ); | |

| } | |

| ); | |

| // --------------------------------------------------------------------------- // | |

| // --------------------------------------------------------------------------- // | |

| // I ensure that a new database is created and stored in the given scope. | |

| function getPristineDatabase( scope, handle ) { | |

| var dbName = "javascript-demos-pouchdb-playground"; | |

| var promise = new PouchDB( dbName ) | |

| .destroy() | |

| .then( | |

| function() { | |

| // Store new, pristine database in to the given scope. | |

| return( scope[ handle ] = new PouchDB( dbName ) ); | |

| } | |

| ) | |

| ; | |

| return( promise ); | |

| } | |

| </script> | |

| </body> | |

| </html> |

In this, code, I start and end by logging the Apple documents to the console so that we can see how they have changed. But, the real meat of the demo is the actual update:

| .then( | |

| function( results ) { | |

| var docsToUpdate = results.rows.map( | |

| function( row ) { | |

| var doc = row.doc; | |

| // Apply the 10% price increase. | |

| doc.price = +( doc.price * 1.1 ).toFixed( 2 ) | |

| return( doc ); | |

| } | |

| ); | |

| return( db.bulkDocs( docsToUpdate ) ); | |

| } | |

| ) |

When we read the documents into memory, they already contain _id and _rev properties. This is exactly what we need to persist a document. So, rather than try to do something clever with document cloning, we simply update the documents returned by the .allDocs() call and then pass those documents right back into the .bulkDocs() call. Not only does this make our lives easier, it also means that we're working with the most recent revision of each document during the update.

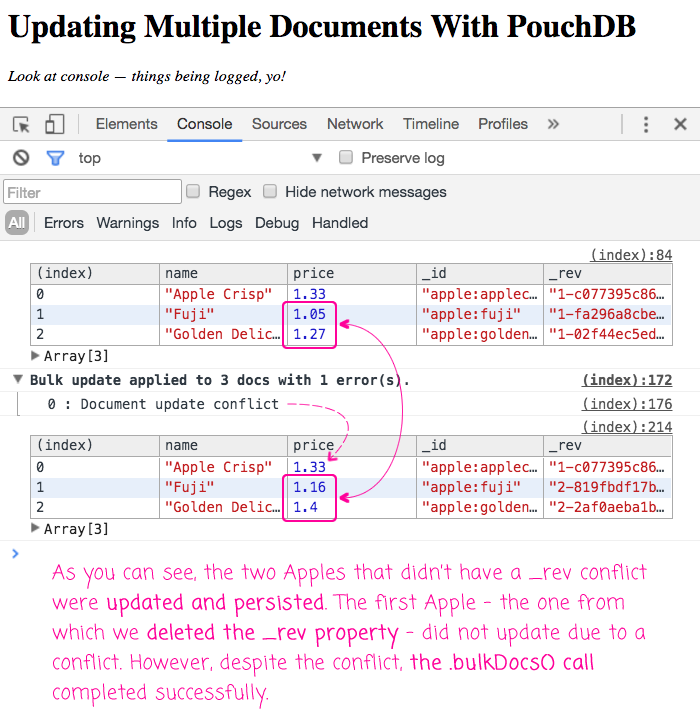

And, when we run the above code, we get the following page output:

As you can see, as expected, only two of the three documents updated successfully; and yet, the Promise for the .bulkDocs() API call did not get rejected. This is because the .bulkDocs() API call reports on the individual success and error of each targeted document.

Coming from a SQL background, updating multiple documents in a PouchDB database feels rather complex. Or rather, I should say, "tedious". After all, the underlying concept is fairly simple - read, update, save. It's just that it involves several steps - several steps that I believe could be encapsulated inside a PouchDB Plugin, which is what I want to look at in my next post.

Want to use code from this post? Check out the license.

Reader Comments

@All,

The concept of a bulk-update seemed like it would be perfect for a PouchDB plugin. As such, I did a follow-up post on my attempt to create an .updateMany() PouchDB plugin:

www.bennadel.com/blog/3199-creating-a-pouchdb-plugin-for-bulk-document-updates.htm

... it can be used in conjunction with .allDocs() or with .query(), based on how its invoked.