Using An Ordered Struct As A Fixed-Size Cache In ColdFusion

In ColdFusion, an ordered struct (or linked struct) is a struct in which the order of the key iteration matches the order of key insertion. Using ordered structs can be somewhat of a contentious topic because the difference between an ordered struct and a regular struct is almost invisible. But, an ordered struct can be hugely useful. For example, ordered structs are essential for MongoDB queries and can greatly simplify HMAC generation. Another use-case for ordered structs is maintaining a very simple, fixed-size cache in ColdFusion.

In the companion app for my feature flags book, I'm caching feature flag configuration data in memory. This is an optimization to get around (comparatively) slow file system access. I expect my companion app to get relatively low traffic; so, keeping things in memory isn't a problem. But, as a prudent safe-guard for server stability, I'm only allowing a limited number of configurations to be cache at one time.

This limit is being enforced with a fixed-size cache that is implemented as an ordered struct. Since an ordered struct reports its keys in the same order in which they were inserted, I can easily see which entries are newer and which entries are older without having to maintain a separate set of metadata about the cache itself.

Here's an implementation of such a fixed-size cache. This ColdFusion component has a private method, pruneKeys(), which leverages this key-order behavior in order to remove older entries as the cache size grows beyond its size limit:

component

output = false

hint = "I provide a very simple, fixed-size key-value cache."

{

/**

* I initialize the fixed-lock of the given size.

*/

public void function init( required numeric maxSize ) {

variables.maxSize = arguments.maxSize;

// Note: Using an ORDERED struct to represent the internal cache. This is

// important since we will leverage the predictability of the .keyArray() method

// to manage the FIFO (First In, First Out) characteristics of the pruning.

variables.store = [:];

// Since the internal cache has size constraints, we need to apply locking around

// access so that we can apply those size constraints across threads.

variables.lockName = "FixedCacheLock:#createUuid()#";

// Since this is all in-memory manipulation, a timeout of (1) should be sufficient

// for both type of locks.

variables.readLockSettings = {

name: lockName,

type: "readonly",

timeout: 1

};

variables.writeLockSettings = {

name: lockName,

type: "exclusive",

timeout: 1

}

}

// ---

// PUBLIC METHODS.

// ---

/**

* I get the value cached at the given key; or null if the value isn't cached.

*/

public any function get( required string key ) {

lock attributeCollection = readLockSettings {

if ( store.keyExists( key ) ) {

return store[ key ];

}

}

}

/**

* I get a SHALLOW copy of the internal store.

*/

public struct function getAll() {

lock attributeCollection = readLockSettings {

return store.copy();

}

}

/**

* I cache the given value at the given key. The value is returned.

*/

public any function set(

required string key,

required any value

) {

lock attributeCollection = writeLockSettings {

store[ key ] = value;

// Note: Since we're using an exclusive lock around the mutation of the store,

// we know that AT MOST we're only ever 1-entry over the size limit.

pruneKeys();

}

return value;

}

// ---

// PRIVATE METHODS.

// ---

/**

* I ensure that the cache is kept within the size constraints.

*/

private void function pruneKeys() {

if ( store.size() > maxSize ) {

// Since we're using an ORDERED STRUCT to manage the internal store, we know

// that the array of keys will be returned in the order in which values were

// added to the cache. As such, the FIRST key in the array will represent the

// oldest entry in the cache. And, since we're single-threading the SETTING of

// new keys, we know that we only have to remove first key in order to keep

// the cache at the right size.

store.delete( store.keyArray().first() );

}

}

}

As you can see, in the pruneKeys() method, the ordered struct allows us to manage the size of the internal cache with a single line of code:

store.delete( store.keyArray().first() );

Since the ordered struct reports its keys in the same order in which they were inserted, we know that the .first() key is always the oldest key in the set. Which means, when the cache has exceeded its max size, we can delete the first key in order to keep the cache within its size constraints.

To see this fixed-size behavior in action, let's created a fixed cache of size 10 and add 100 entries:

<cfscript>

// Only the last 10-keys will be kept in the cache.

fixedCache = new FixedCache( 10 );

for ( i = 1 ; i <= 100 ; i++ ) {

fixedCache.set( "Key-#i#", "Value-#i#" );

}

// We should only see 10 entries in the shallow-copy.

dump( fixedCache.getAll() );

</cfscript>

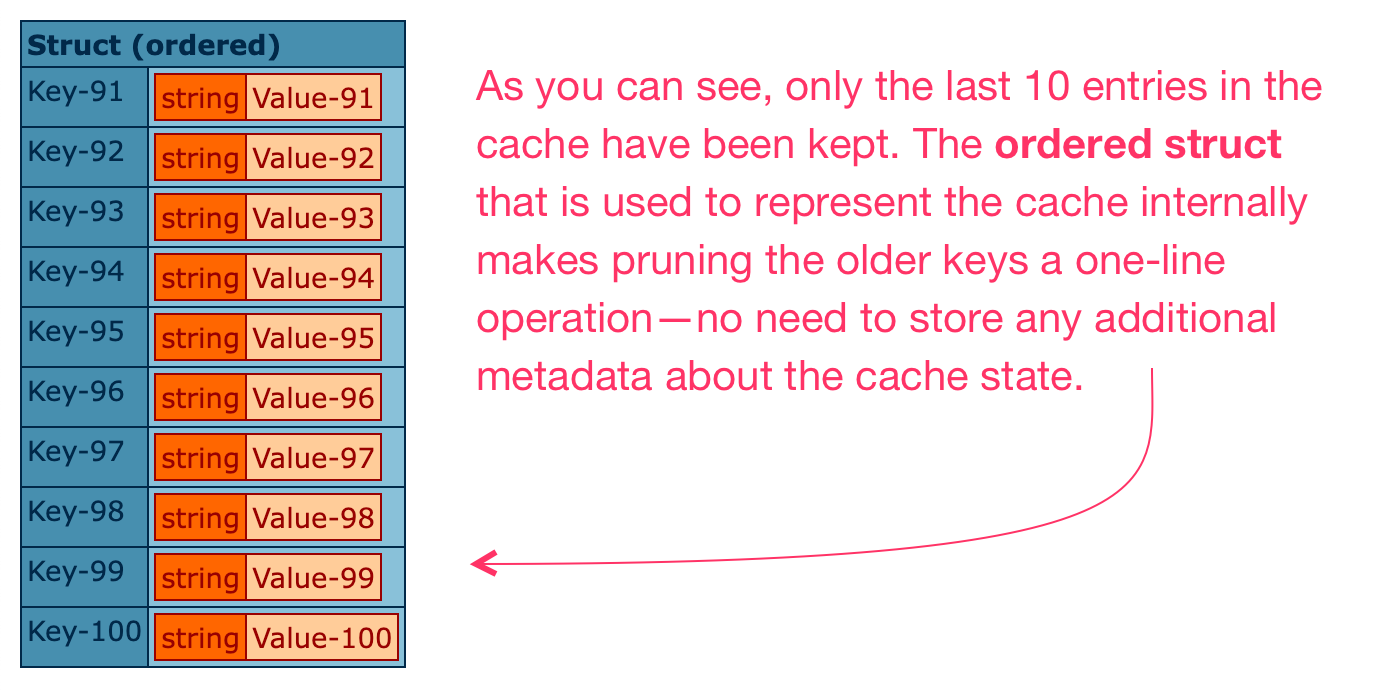

And, when we run this ColdFusion code, we get the following output:

Of the 100 key-values pairs added to the cache, only the last 10 inserted values are still present in the cache state.

This is, of course, a very simple implementation of a cache. It doesn't, for example, take into account how often a key is accessed. Nor does it disconnect the inserted value from the calling context (by duplicating / deep cloning the value). But that's kind of the point—the simplicity of the implementation is commensurate with the simplicity of the requirements. And, the use of an ordered struct allows us to reduce the number of moving parts in the implementation while still adhering to the requirements.

Don't underestimate the power of ordered structs in ColdFusion. Thar be gold in them there hills!

Want to use code from this post? Check out the license.

Reader Comments

there's a hidden new feature in Lucee 6.1

structNew( "max:50" ), but don't let anyone know I told you ok, just between us ok?https://github.com/lucee/Lucee/commit/aab00654330bb18d015056b08b92a125bda5ed86

Ha ha, I'll keep it just between us 😉

What would you say is the advantage of the struct style vs. an array of 10 items like:

@Will,

The biggest difference is when having to check to see if a value is already cached. In the

.get()method, you'd have to change the relatively simple call,store.keyExists(key), with something that iterates over the array to see if the key exists.Makes sense. I mean, I've done my share of in-object caching with structs, to be sure. I've never limited by quantity that way, but I see the idea. With code at work, we use Cachebox within Coldbox. I don't think it has a limited-quantity ruleset. Maybe they should add it!

It's definitely a niche-case. I think most cache eviction rules revolve around access rates (keeping popular items in the cache and expunging rarely-used items).

The only use case I have for ordered structs is purely aesthetic..I like when my structs are ordered (for me) when I dump and inspect them. Haha! But it's great to see another, more code-worthy use case. However, I do like the idea of a cache that retains items based on usage while (potentially) factoring in how computationally intensive they might be.

@Chris,

Ha ha, I'm 100% with you there - if I'm outputting to JSON or using a dump, I'll often use an ordered struct just so my output feels more human-friendly :D Ain't nothing wrong with that!

Post A Comment — ❤️ I'd Love To Hear From You! ❤️

Post a Comment →