Using The LaunchDarkly REST API To Update Rule Configuration In Lucee CFML 5.3.7.47

I am not bashful about the fact that I love using LaunchDarkly for our feature flags at InVision. But, the vast majority of my experience revolves around their Java SDK which implements the rules engine consumer and the server-sent event streaming. I actually have very little experience with the LaunchDarkly REST API, which allows you to programmatically update the rules engine without using the LaunchDarkly dashboard. At work, I need to build a simple toggle for our Customer Facing Team (CFT) that will allow them to add or remove an enterprise subdomain from a targeting rule within a given feature flag. As such, I needed to figure out how to update rule configurations using the LaunchDarkly REST API in Lucee CFML 5.3.7.47.

Most of my work consists of reading from and writing to my own database. This requires some abstractions; however, since it is so tightly coupled to our application architecture, we can bake a lot of assumptions into how we wire components together.

Building a remote API client, on the other hand, requires a slightly different set of skills because it necessarily needs to be more flexible. Not only does a remote API client need to solve a given set of problems now, it must be able to evolve over time as new types of problems pop up in the application.

To be honest, I'm not too good at architecting this kind of client - it's not something I do very often. As such, I thought this LaunchDarkly REST API requirement presented a lovely context in which to practice this skill.

My first step in this process was just to get something working. The LaunchDarkly REST API is kind of complicated; and, uses a concept that I've never seen before: JSON patching. Furthermore, it uses two different types of JSON patching: "standard" and "semantic". The standard patching required you to explicitly tell the API which data structures and values you want to change. The "semantic" patching allows you to be a little more high-level, telling the API what you want to do and allowing the API to figure out some of the lower-level details for you.

Once I got something janky working, I started to pull it apart and refactor it into layers that has more focused sets of responsibilities. Here's the layering that I finally came to:

REST HTTP Client - handles the low-level

CFHttptag implementation complete with retry logic, status code evaluation, and response parsing.REST Gateway - handles the higher-level semantic JSON patching concepts, including authentication and environment selection.

REST Service - handles the application-specific abstractions for adding and removing subdomains to and from feature flag targeting, respectively.

To be clear, I don't know if this is the right separation of concerns. But, it feels like I get to remove "noise" from each layer (pushing it down into the layer below it), which I think is probably a good sign.

As I mentioned at the top, the core case for this exploration is to be able to take a company subdomain (ex, acme in acme.invisionapp.com) and use it as targeting for a given feature flag. This case already bakes in a number of assumptions about how our application and feature flags works:

- There is a custom property called

subdomain. - There is a rule that targets this property.

- This rule only has two variations:

trueandfalse. - This rule only has a single clause, the first of which targets subdomains.

None of these assumptions are universally true in the greater landscape of LaunchDarkly. This is why it is so enjoyable to simply use their administrative dashboard (which is really well done). It's also why making abstraction layers is so complicated: I am trying to find the right balance of flexibility and simplicity (along with a healthy dose of application-specific assumptions).

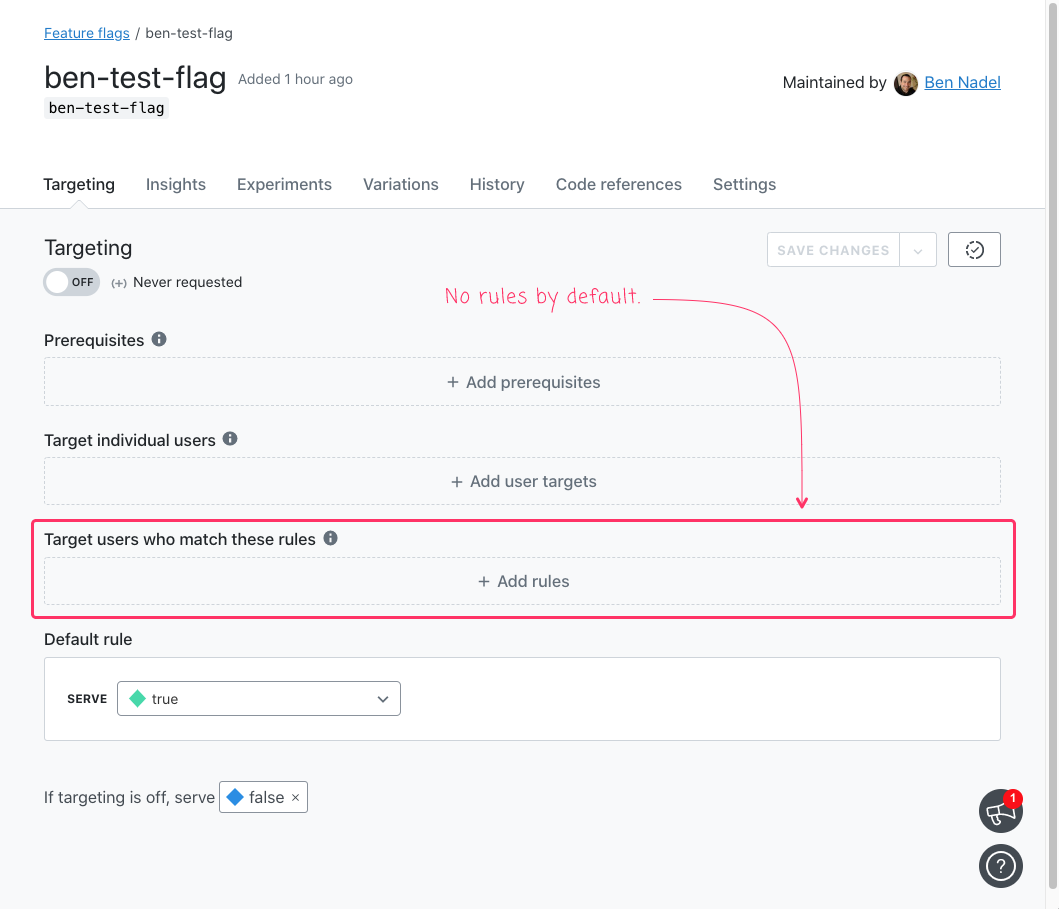

With that said, let's work from the outside (highest level abstractions) in (lowest level abstractions). First, I created a boolean variant feature flag, ben-test-flag, with which to test. By default, it has no targeting whatsoever:

As you can see, by default, there are no rules.

As our first step in this implementation, we're going to create our layers of abstraction and wire them together:

<cfscript>

// I handle low-level HTTP requests.

launchDarklyRestHttpClient = new LaunchDarklyRestHttpClient();

// I handle low-level semantic operations.

launchDarklyRestGateway = new LaunchDarklyRestGateway(

accessToken = server.system.environment.LAUNCH_DARKLY_REST_ACCESS_TOKEN,

launchDarklyRestHttpClient = launchDarklyRestHttpClient

);

// I provide high-level abstractions over feature flag mutations.

launchDarklyService = new LaunchDarklyService(

projectKey = server.system.environment.LAUNCH_DARKLY_REST_PROJECT_KEY,

environmentKey = server.system.environment.LAUNCH_DARKLY_REST_ENVIRONMENT_KEY,

launchDarklyRestGateway = launchDarklyRestGateway

);

</cfscript>

As you can see, we have our low-level HTTP layer, our high-level Gateway layer, and our application-specific Service layer. This is in a file called config.cfm, which we'll include in our subsequent steps. Our demo code will only ever touch the LaunchDarklyService ColdFusion component directly - the HTTP and Gateway layers are hidden away.

Now that we have our Service ColdFusion component, let's create a rule and add the subdomain acme to it as a recipient of the true value:

<cfscript>

// Create the components and wire them together.

include template = "./config.cfm";

launchDarklyService.addSubdomainTargeting(

key = "ben-test-flag",

subdomain = "acme"

);

</cfscript>

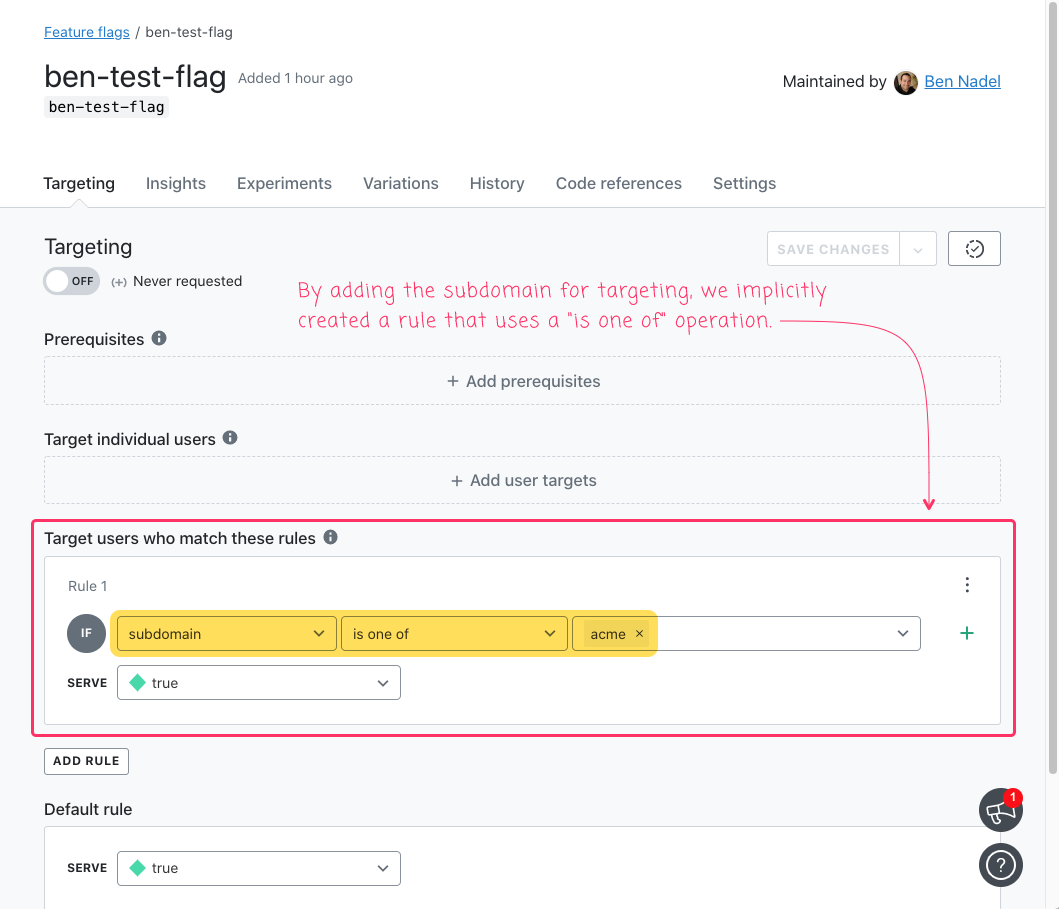

Here, we're passing in the key of the feature flag that we want to update and the enterprise subdomain (acme) that we want to target. And, after we run this ColdFusion code, our LaunchDarkly dashboard looks like this:

As you can see, our ColdFusion service layer implicitly created a rule for us and then added the acme subdomain to it.

Let's try adding another subdomain. This time, we're going to add cyberdyne:

<cfscript>

// Create the components and wire them together.

include template = "./config.cfm";

launchDarklyService.addSubdomainTargeting(

key = "ben-test-flag",

subdomain = "cyberdyne"

);

</cfscript>

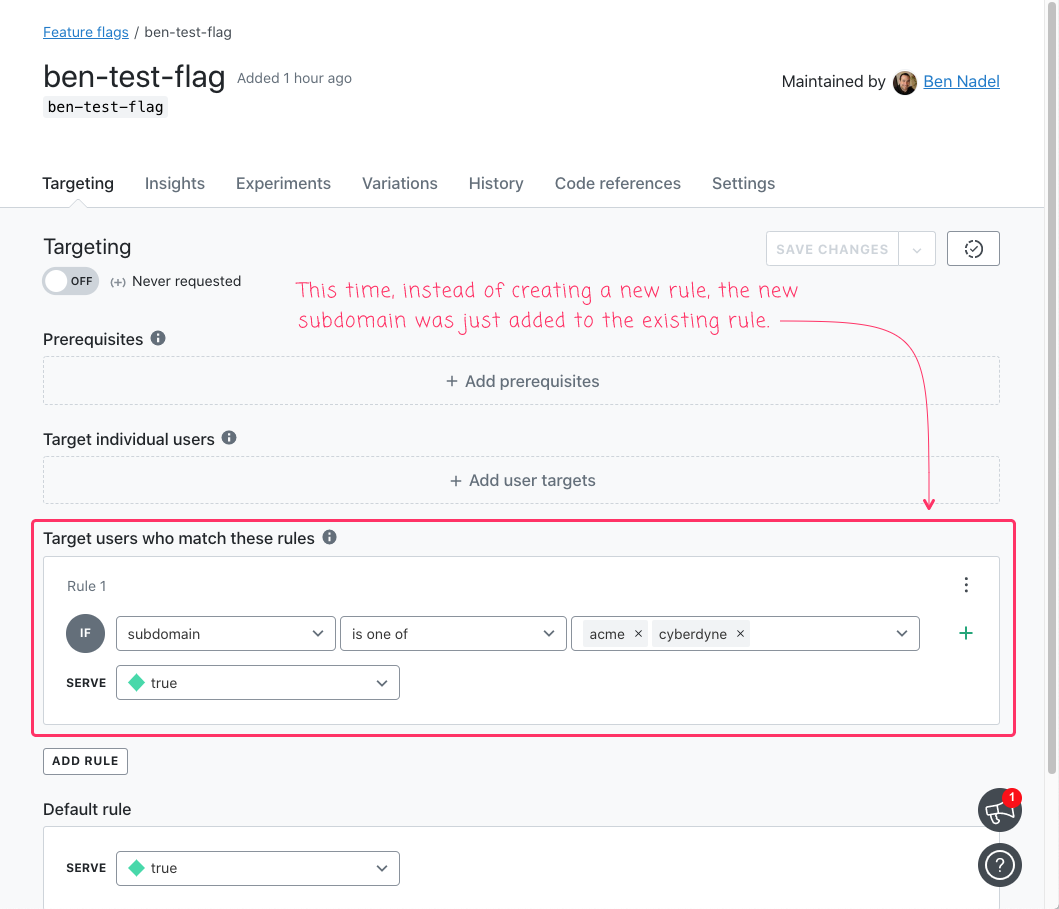

As you can see, it's the same exact code, only we're adding a different subdomain value. And, after we run this ColdFusion code, our LaunchDarkly dashboard looks like this:

As you can see, when we went to add the second subdomain for targeting, our ColdFusion service layer didn't create another rule. Instead, it added cyberdyne to the existing rule.

As a final, high-level test, let's remove acme from the targeting:

<cfscript>

// Create the components and wire them together.

include template = "./config.cfm";

launchDarklyService.removeSubdomainTargeting(

key = "ben-test-flag",

subdomain = "acme"

);

</cfscript>

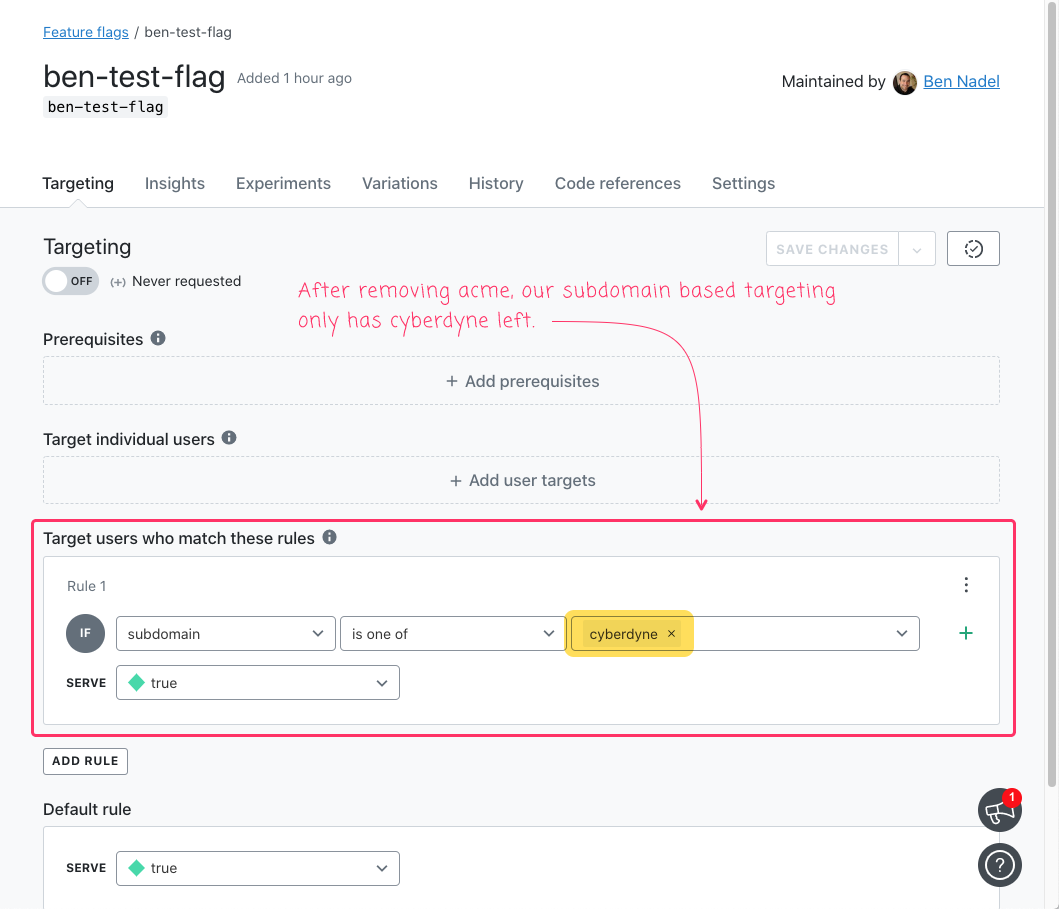

And, after we run this ColdFusion code, our LaunchDarkly dashboard looks like this:

As you can see, our ColdFusion service layer was able to remove acme, leaving cyberdyne as the only actively targeted subdomain.

Now that we see what is happening in this exploration, let's start digging down into the layers of abstraction so we can see how everything is wired together. At the top, we have our application-specific ColdFusion component, LaunchDarklyService.cfc. This Service layer is allowed to bake-in the most assumptions about how it will be used because it is being created specifically for our application.

This ColdFusion component works by fetching the given feature flag and then checking to see if it has a rule specifically for targeting subdomains. If it doesn't, it creates it. Then, it adds the subdomain to the rule:

component

output = false

hint = "I provide high-level abstractions over LaunchDarkly's HTTP REST API."

{

/**

* NOTE: In our application architecture, we lock the runtime down to a specific

* Project and Environment. As such, these values will be used for all subsequent

* method calls and cannot be changed on a per-request basis.

*/

public void function init(

required string projectKey,

required string environmentKey,

required any launchDarklyRestGateway

) {

variables.projectKey = arguments.projectKey;

variables.environmentKey = arguments.environmentKey;

variables.gateway = launchDarklyRestGateway;

}

// ---

// PUBLIC METHODS.

// ---

/**

* I add the given subdomain to the "true" targeting for the given feature flag.

*/

public void function addSubdomainTargeting(

required string key,

required string subdomain

) {

var feature = gateway.getFeatureFlag(

projectKey = projectKey,

environmentKey = environmentKey,

key = key

);

// The feature flag data structure is large and complex. And, we'll need to

// reference parts of it by ID. As such, let's build-up some indexes that make

// parts of it easier to reference.

var rulesIndex = buildRulesIndex( feature );

// CAUTION: For the purposes of our application, we're going to assume that this

// feature flag only has two boolean variations: True and False.

var variationsIndex = buildVariationsIndex( feature );

// If we already have a rule that represents a "subdomain is one of" targeting,

// we can add the given to the existing rule and clause.

if ( rulesIndex.keyExists( "subdomain.in" ) ) {

// CAUTION: For the purposes of our application, we are going to assume that

// all subdomain targeting rules have a single clause.

var rule = rulesIndex[ "subdomain.in" ];

var clause = rule.clauses.first();

gateway.addValuesToClause(

projectKey = projectKey,

environmentKey = environmentKey,

key = key,

ruleId = rule._id,

clauseId = clause._id,

values = [ subdomain ]

);

// If there is no existing rule that represents "subdomain is one of" targeting,

// we have to create it (using the given value as the initial value).

} else {

gateway.addRule(

projectKey = projectKey,

environmentKey = environmentKey,

key = key,

attribute = "subdomain",

op = "in",

values = [ subdomain ],

variationId = variationsIndex[ "true" ]._id

);

}

}

/**

* I remove the given subdomain from the "true" targeting for the given feature flag.

*/

public void function removeSubdomainTargeting(

required string key,

required string subdomain

) {

var feature = gateway.getFeatureFlag(

projectKey = projectKey,

environmentKey = environmentKey,

key = key

);

// The feature flag data structure is large and complex. And, we'll need to

// reference parts of it by ID. As such, let's build-up some indexes that make

// parts of it easier to reference.

var rulesIndex = buildRulesIndex( feature );

// CAUTION: For the purposes of our application, we're going to assume that this

// feature flag only has two boolean variations: True and False.

var variationsIndex = buildVariationsIndex( feature );

// If there is no existing rule that represents "subdomain is one of" targeting,

// then there's no place to remove the given subdomain. As such, we can just

// ignore this request.

if ( ! rulesIndex.keyExists( "subdomain.in" ) ) {

return;

}

// CAUTION: For the purposes of our application, we are going to assume that all

// subdomain targeting rules have a single clause.

var rule = rulesIndex[ "subdomain.in" ];

var clause = rule.clauses.first();

gateway.removeValuesFromClause(

projectKey = projectKey,

environmentKey = environmentKey,

key = key,

ruleId = rule._id,

clauseId = clause._id,

values = [ subdomain ]

);

}

// ---

// PRIVATE METHODS.

// ---

/**

* I create an index that maps rule-operations to rules.

*

* NOTE: The underlying feature flag data structure can return rules across all of the

* environments. However, we're only going to concern ourselves with the single

* environment associated with this runtime.

*/

private struct function buildRulesIndex( required struct featureFlag ) {

var rulesIndex = {};

for ( var rule in featureFlag.environments[ environmentKey ].rules ) {

var keyParts = [];

for ( var clause in rule.clauses ) {

keyParts.append( clause.attribute );

keyParts.append( clause.op );

}

rulesIndex[ keyParts.toList( "." ).lcase() ] = rule;

}

return( rulesIndex );

}

/**

* I create an index that maps variation values to variations.

*/

private struct function buildVariationsIndex( required struct featureFlag ) {

var variationsIndex = {};

for ( var variation in featureFlag.variations ) {

variationsIndex[ lcase( variation.value ) ] = variation;

}

return( variationsIndex );

}

}

Since the feature flag data structure is rather complex - again, why the LaunchDarkly dashboard is such a joy to use - we have to create some indexes which allow me to reference parts of the data structure using human-friendly values. These values are then piped into the next layer down - the Gateway layer - which exposes the Semantic JSON patching:

component

output = false

hint = "I provide low-level semantic abstractions over LaunchDarkly's HTTP REST API."

{

/**

* I initialize the LaunchDarkly REST API with the given access token.

*/

public void function init(

required string accessToken,

required any launchDarklyRestHttpClient

) {

variables.accessToken = arguments.accessToken;

variables.httpClient = arguments.launchDarklyRestHttpClient;

variables.baseApiUrl = "https://app.launchdarkly.com/api/v2";

// All requests to the HTTP REST API will need to include the authorization.

variables.authorizationHeader = {

name: "Authorization",

value: accessToken

};

// The HTTP REST API supports two different types of "JSON patches" determined by

// the existence of a special Content-Type header. This "semantic patch" allows

// us to use "commands" to update the underlying JSON regardless(ish) of the

// current state of the JSON document.

variables.semanticPatchHeader = {

name: "Content-Type",

value: "application/json; domain-model=launchdarkly.semanticpatch"

};

}

// ---

// PUBLIC METHODS.

// ---

/**

* I apply the semantic JSON patch operation for "addRule". Returns the updated

* feature flag struct.

*/

public struct function addRule(

required string projectKey,

required string environmentKey,

required string key,

required string attribute,

required string op,

required array values,

required string variationId

) {

return(

httpClient.makeHttpRequestWithRetry(

requestMethod = "patch",

requestUrl = "#baseApiUrl#/flags/#projectKey#/#key#",

headerParams = [

authorizationHeader,

semanticPatchHeader

],

body = serializeJson({

environmentKey: environmentKey,

instructions: [

{

kind: "addRule",

clauses: [

{

attribute: attribute,

op: op,

values: values

}

],

variationId: variationId

}

]

})

)

);

}

/**

* I apply the semantic JSON patch operation for "addValuesToClause". Returns the

* updated feature flag struct.

*/

public struct function addValuesToClause(

required string projectKey,

required string environmentKey,

required string key,

required string ruleId,

required string clauseId,

required array values

) {

return(

httpClient.makeHttpRequestWithRetry(

requestMethod = "patch",

requestUrl = "#baseApiUrl#/flags/#projectKey#/#key#",

headerParams = [

authorizationHeader,

semanticPatchHeader

],

body = serializeJson({

environmentKey: environmentKey,

instructions: [

{

kind: "addValuesToClause",

ruleId: ruleId,

clauseId: clauseId,

values: values

}

]

})

)

);

}

/**

* I return the feature flag data for the given key.

*/

public struct function getFeatureFlag(

required string projectKey,

required string environmentKey,

required string key

) {

return(

httpClient.makeHttpRequestWithRetry(

requestMethod = "get",

requestUrl = "#baseApiUrl#/flags/#projectKey#/#key#",

headerParams = [

authorizationHeader

],

urlParams = [

{

name: "env",

value: environmentKey

}

]

)

);

}

/**

* I apply the semantic JSON patch operation for "removeValuesFromClause". Returns the

* updated feature flag struct.

*/

public struct function removeValuesFromClause(

required string projectKey,

required string environmentKey,

required string key,

required string ruleId,

required string clauseId,

required array values

) {

return(

httpClient.makeHttpRequestWithRetry(

requestMethod = "patch",

requestUrl = "#baseApiUrl#/flags/#projectKey#/#key#",

headerParams = [

authorizationHeader,

semanticPatchHeader

],

body = serializeJson({

environmentKey: environmentKey,

instructions: [

{

kind: "removeValuesFromClause",

ruleId: ruleId,

clauseId: clauseId,

values: values

}

]

})

)

);

}

}

As you can see, this layer is really just responsible for configuring the data structures and authentication headers that then get passed down into the lowest layer, the HTTP layer:

component

output = false

hint = "I provide low-level HTTP methods for calling the LaunchDarkly HTTP REST API."

{

/**

* I make an HTTP request with the given configuration, returning the parsed file

* content on success or throwing an error on failure.

*/

public any function makeHttpRequest(

required string requestMethod,

required string requestUrl,

array headerParams = [],

array urlParams = [],

any body = nullValue(),

numeric timeout = 5

) {

http

result = "local.httpResponse"

method = requestMethod,

url = requestUrl

getAsBinary = "yes"

charset = "utf-8"

timeout = timeout

{

for ( var headerParam in headerParams ) {

httpParam

type = "header"

name = headerParam.name

value = headerParam.value

;

}

for ( var urlParam in urlParams ) {

httpParam

type = "url"

name = urlParam.name

value = urlParam.value

;

}

if ( arguments.keyExists( "body" ) ) {

httpParam

type = "body"

value = body

;

}

}

var fileContent = getFileContentAsString( httpResponse.fileContent );

if ( isErrorStatusCode( httpResponse.statusCode ) ) {

throwErrorResponseError(

requestUrl = requestUrl,

statusCode = httpResponse.statusCode,

fileContent = fileContent

);

}

if ( ! fileContent.len() ) {

return( "" );

}

try {

return( deserializeJson( fileContent ) );

} catch ( any error ) {

throwJsonParseError(

requestUrl = requestUrl,

statusCode = httpResponse.statusCode,

fileContent = fileContent,

error = error

);

}

}

/**

* I make an HTTP request with the given configuration, returning the parsed file

* content on success or throwing an error on failure. Failed requests will be retried

* a number of times if they fail with a status code that is considered safe to retry.

*/

public any function makeHttpRequestWithRetry(

required string requestMethod,

required string requestUrl,

array headerParams = [],

array urlParams = [],

any body = nullValue(),

numeric timeout = 5

) {

// Rather than relying on the maths to do exponential back-off calculations, this

// collection provides an explicit set of back-off values (in milliseconds). This

// collection also doubles as the number of attempts that we should execute

// against the underlying HTTP API.

// --

// NOTE: Some randomness will be applied to these values at execution time.

var backoffDurations = [

100,

200,

400,

800,

1600,

3200,

0 // Indicates end of retry attempts.

];

for ( var backoffDuration in backoffDurations ) {

try {

return( makeHttpRequest( argumentCollection = arguments ) );

} catch ( "LaunchDarklyRestError.ErrorStatusCode" error ) {

// Extract the HTTP status code from the error type - it is the last

// value in the dot-delimited error type list.

var statusCode = val( error.type.listLast( "." ) );

if ( backoffDuration && isRetriableStatusCode( statusCode ) ) {

sleep( applyJitter( backoffDuration ) );

} else {

rethrow;

}

}

}

}

// ---

// PRIVATE METHODS.

// ---

/**

* I apply a 20% jitter to a given back-off value in order to ensure some kind of

* randomness to the collection of requests against the target. This is a small effort

* to prevent the thundering heard problem for the target.

*/

private numeric function applyJitter( required numeric value ) {

// Create a jitter of +/- 20%.

var jitter = ( randRange( 80, 120 ) / 100 );

return( fix( value * jitter ) );

}

/**

* I return the given fileContent value as a string.

*

* NOTE: Even though we always ask ColdFusion to return a Binary value in the HTTP

* response object, the Type is only guaranteed if the request comes back properly.

* If something goes terribly wrong (such as a "Connection Failure"), the fileContent

* will still be returned as a simple string.

*/

private string function getFileContentAsString( required any fileContent ) {

if ( isBinary( fileContent ) ) {

return( charsetEncode( fileContent, "utf-8" ) );

} else {

return( fileContent );

}

}

/**

* I determine if the given HTTP status code is an error response. Anything outside

* the 2xx range is going to be considered an error response.

*/

private boolean function isErrorStatusCode( required string statusCode ) {

return( ! isSuccessStatusCode( statusCode ) );

}

/**

* I determine if the given HTTP status code is considered safe to retry.

*/

private boolean function isRetriableStatusCode( required string statusCode ) {

return(

( statusCode == 0 ) || // Connection Failure.

( statusCode == 408 ) || // Request Timeout.

( statusCode == 500 ) || // Server error.

( statusCode == 502 ) || // Bad Gateway.

( statusCode == 503 ) || // Service Unavailable.

( statusCode == 504 ) // Gateway Timeout.

);

}

/**

* I determine if the given HTTP status code is a success response. Anything in the

* 2xx range is going to be considered a success response.

*/

private boolean function isSuccessStatusCode( required string statusCode ) {

return( !! statusCode.reFind( "2\d\d" ) );

}

/**

* I throw an error for when HTTP response comes back with a non-2xx status code.

*/

private void function throwErrorResponseError(

required string requestUrl,

required string statusCode,

required string fileContent

) {

var statusCodeNumber = statusCode.findNoCase( "Connection Failure" )

? 0

: val( statusCode )

;

throw(

type = "LaunchDarklyRestError.ErrorStatusCode.#statusCodeNumber#",

message = "LaunchDarkly HTTP REST API returned a non-2xx status code.",

extendedInfo = serializeJson( arguments )

);

}

/**

* I throw an error for when the HTTP response payload could not be parsed as JSON.

*/

private void function throwJsonParseError(

required string requestUrl,

required string statusCode,

required string fileContent,

required struct error

) {

throw(

type = "LaunchDarklyRestError.JsonParse",

message = "LaunchDarkly HTTP REST API returned payload that could not be parsed.",

extendedInfo = serializeJson( arguments )

);

}

}

Wow - there's a lot of work that goes into adding and removing some values from property-based targeting. This goes to show you how very nice the LaunchDarkly administrative dashboard is; that is to say, how much complexity it hides away from you (as long as you are OK to interact with it manually).

This was a fun exploration of the LaunchDarkly REST API in ColdFusion. I feel like the layers / separation of concerns that I came up with mostly make sense. Though, of course, a developer wouldn't want to wire everything together. Instead, I'd likely want to provide a high-level factory-type function that does the instantiation and dependency-injection behind the scenes.

Want to use code from this post? Check out the license.

Reader Comments