Proxying Amazon AWS S3 Pre-Signed-URL Uploads Using Netlify Functions

A couple of months ago, I looked at proxying Amazon S3 pre-signed URL uploads using Lucee CFML 5.3.6.61. This was a topic of interest because InVision has enterprise customers that block all direct access to Amazon AWS (for security purposes); and, the only way we can upload files to S3 is by "hiding" the AWS URLs behind a proxy. Of course, proxying an upload through our servers is sub-optimal because it increases the load that our servers have to handle. So, what if we could proxy the upload through something more dynamic, like an AWS Lambda Function? Of course, we can't use AWS URLs (as they are being blocked by some of our customers); but, Netlify provides Lambda Functions; so, maybe we can use Netlify to proxy Amazon AWS S3 pre-signed URLs.

To re-explore this concept, I went into the area of my ColdFusion application that produces Amazon AWS pre-signed URLs; and, instead of returning those URLs directly the browser, I intercept the response and augment the URLs to point to my Netlify Lambda Function:

<cfscript>

// ... truncated code ...

rc.apiResponse.data = getPreSignedUrls();

// Intercept the response that contains our Amazon AWS S3 pre-signed URLs and

// proxy them through a Netlify Lambda Function end-point.

if ( uploadProxyService.isFeatureEnabled() ) {

for ( var entry in rc.apiResponse.data ) {

entry.signedurlOriginal = entry.signedurl;

// Overwrite the pre-signed URL - the UploadProxyService is going to HEX-

// encode the URL so that we don't have to worry about URL-encoding special

// characters.

entry.signedurl = ( "https://bennadel-s3-proxy.netlify.app/.netlify/functions/upload-to-s3?remoteUrl=" & uploadProxyService.encodeRemoteUrl( entry.signedurl ) );

}

}

// ... truncated code ...

</cfscript>

As you can see, we're taking the pre-signed URL - signedurl - and, instead of returning it directly to the browser, we're instead replacing it with a URL that proxies through a small Netlify site, bennadel-s3-proxy.netlify.app. This Netlify site has a single Lambda Function - upload-to-s3 - that will use the Axios library to take whatever it is sent and PUT the data up to the remoteUrl.

When performing this Axios pass-through, I want to be sure to:

- Pass-through the correct

content-type. - Pass-through the binary data (the main goal).

- Pass-back the correct status-code.

- Pass-back any HTTP headers returned from Amazon AWS.

Now, I actually tried to do this a few months ago. But, at the time, Netlify Functions couldn't handle the content-type, application/octet-stream. Fortunately, they quickly took steps to fix this and just recently announced an update that application/octet-stream content is being properly encoded. Which now makes it possible to use Netlify Lambda Functions as an S3 proxy.

Here's the Netlify Function that I created - other than dealing with the CORS (Cross-Origin Resource Sharing) complexities, all this is really doing is making an Axios call, passing along the data to the pre-signed URL, and then echoing the Axios response.

CAUTION: I've barely ever used Axios; and, this is only like the second time I've used a Netlify Function. So, please take the following as an experiment and not as an exhaustive look at how something like this should be implemented.

// Using the dotenv package allows us to have local-versions of our ENV variables in a

// .env file while still using different build-time ENV variables in production.

require( "dotenv" ).config();

// Require core node modules.

var axios = require( "axios" ).default;

var Buffer = require( "buffer" ).Buffer;

// ----------------------------------------------------------------------------------- //

// ----------------------------------------------------------------------------------- //

// I am the Netlify Function handler.

// --

// NOTE: The function is ASYNC allowing us to AWAIT asynchronous methods within.

export async function handler( event, context ) {

var corsHeaders = {

"Access-Control-Allow-Origin" : process.env.NETLIFY_ACCESS_CONTROL_ALLOW_ORIGIN,

"Access-Control-Allow-Headers": "Content-Type, Origin",

"Access-Control-Allow-Methods": "OPTIONS, PUT"

};

// In the case of a CORS preflight check, just return the security headers early.

if ( event.httpMethod === "OPTIONS" ) {

return({

statusCode: 200,

headers: corsHeaders,

body: JSON.stringify( corsHeaders )

});

}

try {

// Setup the PROXY configuration for our Axios request.

var proxyHeaders = {

"User-Agent": ( event.headers[ "user-agent" ] || "netlify-functions" ),

"Content-Type": ( event.headers[ "content-type" ] || "application/octet-stream" )

};

// The remote-url is getting passed to this lambda function as a HEX-encoded

// value so that we don't have to worry about URL-encoding special characters.

var proxyUrl = Buffer

.from( event.queryStringParameters.remoteUrl, "hex" )

.toString( "utf8" )

;

// Netlify can pass the request body through as a String or a Buffer. As such, we

// have to look at the instance-type to figure out how to generate a Buffer for

// the subsequent Axios request.

// --

// NOTE: This behavior was recently changed in Netlify to make this possible.

// Previously, this only worked for "image" content-types, but would break for

// any payload that was of type "application/octet-stream".

// --

// Read More: https://community.netlify.com/t/changed-behavior-in-function-body-encoding/19000

var proxyBody = ( event.body instanceof Buffer )

? event.body

: Buffer.from( event.body, ( event.isBase64Encoded ? "base64" : "utf8" ) )

;

var proxyResponse = await axios({

method: "put",

url: proxyUrl,

headers: proxyHeaders,

data: proxyBody

});

console.log({

successData: proxyResponse.data,

successStatus: proxyResponse.status,

successStatusText: proxyResponse.statusText,

successHeaders: proxyResponse.headers

});

return({

statusCode: proxyResponse.status,

headers: {

...proxyResponse.headers,

...corsHeaders

},

body: proxyResponse.data

});

} catch ( error ) {

console.error({

errorData: error.response.data,

errorStatus: error.response.status,

errorHeaders: error.response.headers

});

return({

statusCode: 500,

headers: {

"Content-Type": "text/plain",

...corsHeaders

},

body: JSON.stringify( "Server Error" )

});

}

}

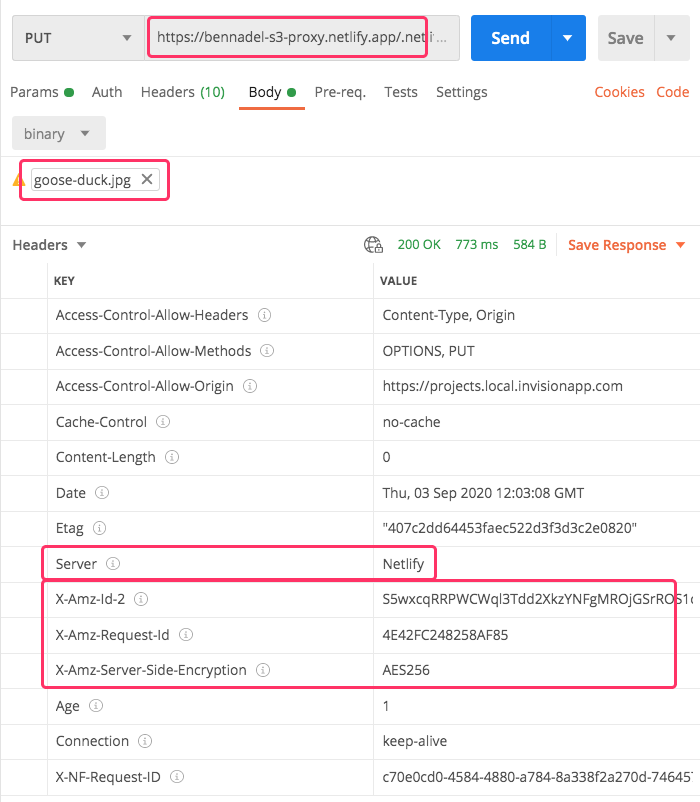

Now, if we open up Postman and try to hit this Netlify Lambda Function using one of the proxied URLs, we get the following output:

As you can see, we're getting a 200 OK response and we see that the server is Netlify; but, we can also see that the Amazon AWS headers have been included because our Netlify Function is just echoing whatever it receives from S3.

The benefit of being able to proxy pre-signed URLs through a Netlify Function is that it will scale independently of the ColdFusion application that is generating the Amazon AWS pre-signed URLs. This is exactly the point of so-called "microservices" - that we should be able to independently scale the features that have unique scaling needs!

Want to use code from this post? Check out the license.

Reader Comments

I'm not sure it would work in this case, but don't forget that if you need to proxy URLs, you do not need to write a function on Netlify, you can do it with their redirects support:

https://www.raymondcamden.com/2020/06/10/testing-netlifys-proxy-support-for-api-hiding

@Ray,

OOOH RIGHT! I totally forgot that this feature even exists. I'll have to try it out because I'm really not adding any value by having to touch the request - so, it's possible that the blind proxy would be just what the doctor ordered.

Outstanding suggestion!