Proxying Amazon AWS S3 Pre-Signed-URL Uploads Using CFHTTP And Lucee CFML 5.3.6.61

At InVision, when a user goes to upload a screen for an interactive prototype, we have the browser upload the file directly to S3 using a pre-signed URL. We've been using this technique for years and it's worked perfectly well. However, we now have an Enterprise client whose network is blocking all calls to Amazon AWS. As such, they are unable to use pre-signed URLs for their file uploads. To try and get around this issue, I wanted to see what it would take to proxy uploads to Amazon AWS S3 through our application using the CFHTTP tag and Lucee CFML 5.3.6.61.

ASIDE: This isn't the first time that I've looked at proxying files through ColdFusion. A few years ago, I created a fun little project called "Sticky CDN", which was a content-delivery network (CDN) designed for your local development environment. It was intended to cache remote files on the local file-system. My current article uses the foundational concepts from that CDN project.

To explore this concept, the first thing that I did was go into the ColdFusion code that generates the S3 pre-signed (query-string authenticated) URL, intercept the response payload, and then redirect the URLs through my local development server. In the following truncated snippet, the "data" payload contains a collection of pre-signed URLs that the browser will use when uploading the files directly to S3:

<cfscript>

// ... truncated output ...

rc.apiResponse.data = getPreSignedUrls();

// Intercept the response that contains our Amazon AWS S3 pre-signed URLs and

// proxy them through our Lucee CFML proxy page.

for ( var entry in rc.apiResponse.data ) {

// In order to side-step any possible issues with URL-encoding, let's take

// the remote URL and just hex-encoded it.

var hexEncodedUrl = binaryEncode( charsetDecode( entry.signedurl, "utf-8" ), "hex" );

// Overwrite the pre-signed URL - mwaaa ha ha ha ha ha ha!

entry.signedurl = ( "https://projects.local.invisionapp.com/scribble/s3-proxy/index.cfm?remoteUrl=" & hexEncodedUrl );

}

// ... truncated output ...

</cfscript>

As you can see, I'm redirecting the uploads to my experimental "s3-proxy" folder locally. And, I'm passing-in the original pre-signed URL as the remoteUrl. Now, in the "s3-proxy" folder, all I'm going to do is take the incoming file-upload (binary) and pass-it-on to the given remoteUrl.

When performing this pass-through, I want to be sure to:

- Pass-through the correct

content-type. - Pass-through the binary data (the main goal).

- Pass-back the correct status-code.

- Pass-back any HTTP headers returned from Amazon AWS.

When the browser uploads the file to my ColdFusion proxy, it is going to do so by sending the file as the request body. As such, I can access the incoming file using the getHttpRequestData() function:

<cfscript>

// For this exploration, we are expecting the Remote URL to come in as a HEX-encoded

// value (so that we don't have to worry about any possible URL-encoded issues).

param name="url.remoteUrl" type="string";

// ------------------------------------------------------------------------------- //

// ------------------------------------------------------------------------------- //

// The browser is going to "PUT" the file to the Lucee CFML server as the BODY of the

// incoming request. As such, the "content" within our HTTP Request Data should be a

// binary payload.

requestData = getHttpRequestData();

proxyHeaders = requestData.headers;

proxyBinary = requestData.content;

// Proxy the PUT of the file to Amazon AWS S3.

http

result = "s3Response"

method = requestData.method

url = hexDecode( url.remoteUrl )

getAsBinary = "yes"

timeout = 10

{

httpparam

type = "header"

name = "Content-Type"

value = ( proxyHeaders[ "content-type" ] ?: "application/octet-stream" )

;

httpparam

type = "header"

name = "User-Agent"

value = ( proxyHeaders[ "user-agent" ] ?: "Lucee CFML S3-Proxy Agent" )

;

httpparam

type = "body"

value = proxyBinary

;

}

// Proxy the Amazon AWS S3 response status-code.

header

statusCode = s3Response.status_code

statusText = s3Response.status_text

;

// Proxy the Amazon AWS S3 response HTTP headers.

loop

struct = flattenHeaders( s3Response.responseHeader )

index = "key"

item = "value"

{

// Skip over any status-code-related headers as these have already been set using

// the CFHeader tag above.

if ( ( key == "status_code" ) || ( key == "explanation" ) ) {

continue;

}

header

name = key

value = value

;

}

// Proxy the Amazon AWS S3 response content.

// --

// NOTE: If the connection to S3 failed (such as with a timeout or an SSL error), the

// response will not be a binary object, even though we requested one. As such, we

// have to normalize the content-type.

content

type = s3Response.mimeType

variable = ensureBinaryContent( s3Response.fileContent )

;

// ------------------------------------------------------------------------------- //

// ------------------------------------------------------------------------------- //

/**

* I ensure that the given value is a Binary value.

*

* @contentInput I am the value being inspected, and coerced to Binary as needed.

*/

public binary function ensureBinaryContent( required any contentInput ) {

if ( isBinary( contentInput ) ) {

return( contentInput );

}

// If the content isn't binary, it's probably something like "Connection Failure"

// or "Could not determine mine-type". In that case, we're just going to convert

// the String to a byte-array.

return( stringToBinary( contentInput ) );

}

/**

* I ensure that the HTTP headers Struct is a collection of SIMPLE VALUES. HTTP

* headers don't have to be simple - each header value can be a Struct of simple

* values. In such a case, the value will be normalized into a comma-delimited list

* of simple values.

*

* Read More: https://www.bennadel.com/blog/2668-normalizing-http-header-values-using-cfhttp-and-coldfusion.htm

*

* @responseHeaders I am the HTTP headers being flatted / normalized.

*/

public struct function flattenHeaders( required struct responseHeaders ) {

var flattenedHeaders = responseHeaders.map(

( key, value ) => {

if ( isSimpleValue( value ) ) {

return( value );

} else {

return( structValueList( value ) );

}

}

);

return( flattenedHeaders );

}

/**

* I convert the given hex-encoded value back into a UTF-8 string.

*

* @hexInput I am the hex-encoded value being decoded.

*/

public string function hexDecode( required string hexInput ) {

return( charsetEncode( binaryDecode( hexInput, "hex" ), "utf-8" ) );

}

/**

* I convert the given string to a byte-array.

*

* @input I am the string value being converted to binary.

*/

public binary function stringToBinary( required string input ) {

return( charsetDecode( input, "utf-8" ) );

}

/**

* I return a comma-delimited list of values in the given struct.

*

* @valueMap I am the struct being inspected.

*/

public string function structValueList( required struct valueMap ) {

var values = [];

for ( var key in valueMap ) {

values.append( valueMap[ key ] );

}

return( values.toList( ", " ) );

}

</cfscript>

As you can see, there's not too much going on here. I'm just using the CFHTTP tag to implement the original pre-signed URL request. Then, I'm taking the response from Amazon AWS S3 and I'm doing my best to return the HTTP Headers and response content that S3 gives me.

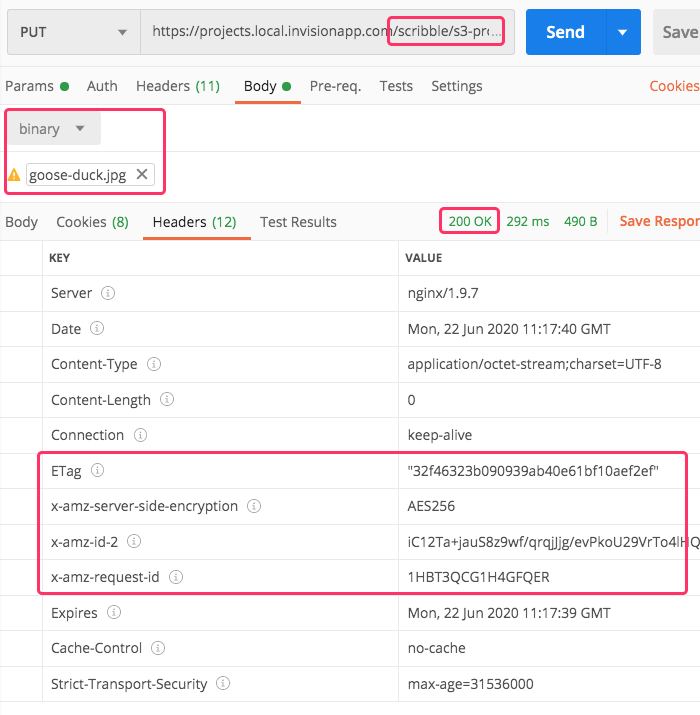

Now, if I open up Postman and try to upload a picture of my dogo through my local development proxy in Lucee CFML, I get the following output:

As you can see, I'm getting a 200 OK response. And, if we look at the HTTP Headers returned to Postman, you can see that I've successfully echoed the AWS Headers returned from the S3 PUT operation.

Ultimately, we'd love for the browser to upload directly to S3. Doing so removes load from our servers; and, removes our application as a potential point-of-failure in the upload mechanics. As such, it's unfortunate to have to proxy an upload to S3. That said, it's pretty cool that the CFHTTP tag and Lucee CFML make implementing a proxy fairly painless.

Epilogue on Security

If I were to deploy this to a production server, I'd want to lock the proxy end-point down in some way. Right now, it just generically proxies any HTTP request; which doesn't necessarily open us up to a "security threat" per-say but, could allow people to send load / traffic through our servers, which we obviously don't want (and could even have legal implications).

Locking it down could be as simple as ensuring that the pre-signed URL, in the url.remoteUrl parameter, points to the customer's S3 bucket.

Epilogue on Netlify Lambda Functions

My original idea for this was to implement the proxy using Netlify's Lambda Functions feature. This way, the uploads would still be off our servers and would be fronted by Netlify's global CDN. But, it seems that Netlify functions don't currently allow for binary data. It would have been pretty cool, though!

I was able to get it to work fairly easily using Axios in the development server for Netlify Lambda Functions (which happily provides binary payloads as a Buffer in Node.js). But, once deployed to Netlify's edge-network, said payloads staring coming through a corrupted String values.

Want to use code from this post? Check out the license.

Reader Comments

@All,

One thing I ran into here was that if the Connection Fails, the

status_codeis reported as0. Well, it seems that - at least in Chrome - if you send back a status code of0, the request just hangs. As such, I've been using this:This appears to allow the browser-request to at least complete.

@All,

A quick follow-up on this. As I mentioned in this post, I had originally tried to do this with Netlify Functions; but, at the time, Netlify functions didn't properly handle

application/octet-streamcontent properly. Well, a few weeks ago, they fixed this issue, which means that the timing was right to expeirment with using Netlify:www.bennadel.com/blog/3887-proxying-amazon-aws-s3-pre-signed-url-uploads-using-netlify-functions.htm

It's the same basic idea; only, I'm using a Netlify Lambda Function + Axios to perform the proxy rather than Lucee CFML and

CFHttp. Everything else is roughly equivalent.