Creating asyncAll(), asyncSettled(), And asyncRace() Functions Using runAsync() And Parallel Iteration In Lucee 5.3.2.77

The other day, I was delighted to discover that Lucee 5.3.2.77 supports asynchronous iteration of collections, including "each", "map", and "filter" operations. Then, in the comments to that post, Charlie Arehart mentioned that Lucee also supports the runAsync() function, which allows individual pieces of logic to be executed in an asynchronous thread (much like a Promise in JavaScript). As a fun learning experiment, I wanted to see if I could use these two pieces of functionality to create the higher-order asynchronous control-flow methods, asyncAll(), asyncSettled(), and asyncRace() in Lucee 5.3.2.77 CFML.

The concepts of all, settled, and race are taken directly from the world of Promises. And, more specifically, deal with how a collection of Promises - as a "unit of work" - should resolve or reject based on the resolution of each individual Promise. In this thought experiment, I'm going to try and reproduce those behaviors using the Future object that Lucee returns from the runAsync() function.

asyncAll() modeled on Promise.all()

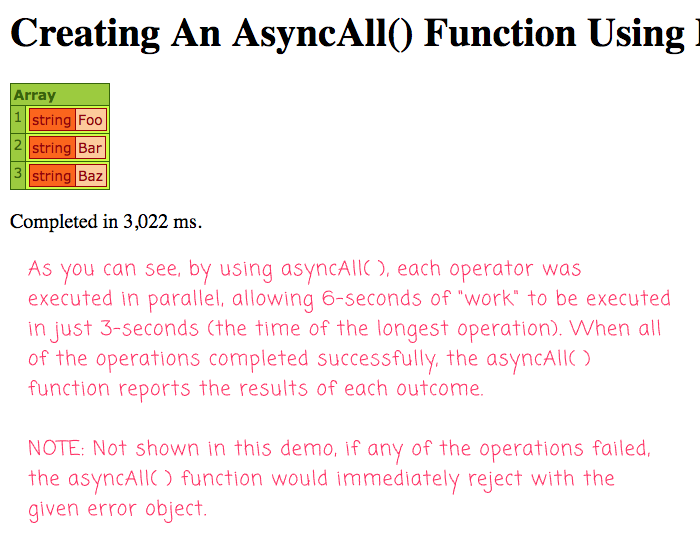

In the world of Promises, the Promise.all() method takes an array of Promise objects and resolves as either an array of all the resultant values; or, rejects using the error of the first Promise that fails. Recreating this behavior in ColdFusion (Lucee) is as simple as wrapping the parallel iteration functionality inside a Future:

<cfscript>

startedAt = getTickCount();

// The asyncAll() function executes all of the given functions in parallel and

// returns a Future that resolves to an array in which each array index holds the

// results of the operator that resided at the same array index of the input.

// --

// CAUTION: If any of the operators throws an error, that error immediately resolves

// the future while the rest of the operators continue to execute in the background.

future = asyncAll([

function() {

sleep( 3000 );

return( "Foo" );

},

function() {

sleep( 1000 );

return( "Bar" );

},

function() {

sleep( 2000 );

return( "Baz" );

}

]);

writeOutput( "<h1> Creating An AsyncAll() Function Using Parallel Threads </h1>" );

writeDump( future.get() );

writeOutput( "<p> Completed in #numberFormat( getTickCount() - startedAt )# ms. </p> ");

// ------------------------------------------------------------------------------- //

// ------------------------------------------------------------------------------- //

/**

* I take an array of operators, execute them asynchronously in parallel, and return a

* future that resolves to an array of ordered results (which maps to the order of the

* operators.

*

* @operators I am the collection of Functions being executed in parallel.

* @output false

*/

function asyncAll( required array operators ) {

var future = runAsync(

function() {

// Internally to the asynchronous runAsync() callback, we can spawn

// further threads to run our individual operators in parallel. Using

// Lucee's parallel iteration, this becomes quite easy!

// --

// NOTE: If any of the operators throws an error, the error will

// immediately bubble up and become the result of the parent Future.

var results = operators.map(

function( operator ) {

return( operator() );

},

true // Iterate using parallel threads.

);

return( results );

}

);

return( future );

}

</cfscript>

The native expression of array.map() essentially implements the Promise.all() behavior right out of the box. Meaning, it gathers all of the results of the asynchronous iterations; or, it throws on the first iteration that fails. As such, all we have to do is take that behavior and wrap it inside a runAsync() call so that the outcome is captured in a Future.

If we run this Lucee CFML page in the browser, we get the following output:

asyncSettled() modeled on Promise.allSettled()

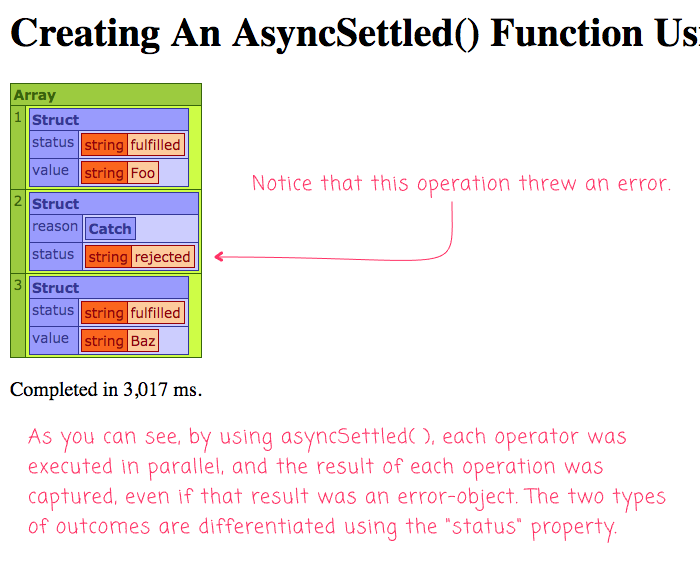

In the world of Promises, the Promise.allSettled() method takes an array of Promise objects and resolves with the non-pending outcome of each individual Promise. This includes Promises that both resolve and reject. In order to differentiate the two types of outcome, each individual results wraps the outcome and contains a status property.

A successful outcome looks like:

status: "fulfilled"value: the resolved value.

And, a rejected outcome looks like:

status: "rejected"reason: the rejection object object.

Implementing asyncSettled() is just slightly more involved that the asyncAll() function. The only difference is that we wrap each parallel iteration in a try/catch so that we can generate the higher-order result:

<cfscript>

startedAt = getTickCount();

// The asyncSettled() function executes all of the given functions in parallel and

// returns a Future that resolves to an array in which each array index holds the

// non-pending state of the operator that resided at the same array index of the

// input.

future = asyncSettled([

function() {

sleep( 3000 );

return( "Foo" );

},

function() {

sleep( 1000 );

throw( type = "BoomGoesThe dynamite" );

return( "Bar" );

},

function() {

sleep( 2000 );

return( "Baz" );

}

]);

writeOutput( "<h1> Creating An AsyncSettled() Function Using Parallel Threads </h1>" );

writeDump( future.get() );

writeOutput( "<p> Completed in #numberFormat( getTickCount() - startedAt )# ms. </p> ");

// ------------------------------------------------------------------------------- //

// ------------------------------------------------------------------------------- //

/**

* I take an array of operators, execute them asynchronously in parallel, and return a

* future that resolves to an array of ordered results in which each index reports the

* non-pending state of the operator. This will be either a "fulfilled" state with a

* "value"; or a "rejected" state with a "reason".

*

* @operators I am the collection of Functions being executed in parallel.

* @output false

*/

function asyncSettled( required array operators ) {

var future = runAsync(

function() {

// Internally to the asynchronous runAsync() callback, we can spawn

// further threads to run our individual operators in parallel. Using

// Lucee's parallel iteration, this becomes quite easy!

var results = operators.map(

function( operator ) {

try {

return({

status: "fulfilled",

value: operator()

});

} catch ( any error ) {

return({

status: "rejected",

reason: error

});

}

},

true // Iterate using parallel threads.

);

return( results );

}

);

return( future );

}

</cfscript>

As you can see, the asyncSettled() function is basically the asyncAll() function with an intermediary try/catch. And, when we run this Lucee CFML page in the browser, we get the following output:

asyncRace() modeled on Promise.race()

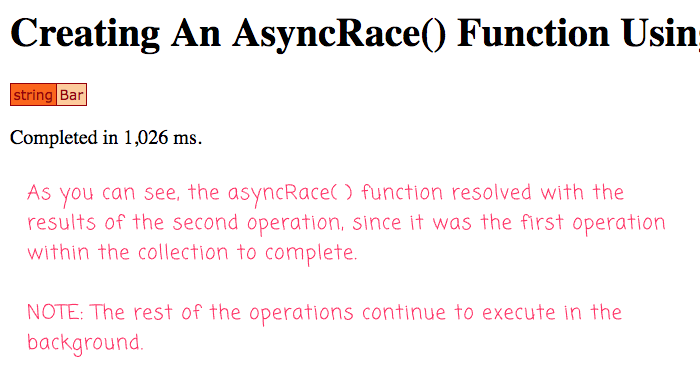

In the world of Promises, the Promise.race() method takes an array of Promise objects and resolves or rejects based on the first outcome within the collection. Unlike the asyncAll() and asyncSettled() functions, which were essentially Future-wrapped calls to Lucee's parallel iteration behavior, creating the asyncRace() function requires a lot more finagling!

While the parallel iteration in Lucee CFML throws errors eagerly, it blocks and waits until all iterations have completed. As such, we can't just use it as the driver since we need to resolve eagerly as well. And, we can't just use a Future, since Lucee CFML doesn't provide a way to programmatically "resolve" or "reject" a pending Future.

To implement asyncRace(), we have to use a more deeply-nested combination of runAsync() calls, parallel iteration, and one of Java's Atomic Data Types. Essentially, each asynchronous operation is going to be run inside its own runAsync() callback; and, each operation is going to compete for access to an AtomicReference. Then, a higher-order Future is going to block and wait until the AtomicReference has been set by one of the operations:

<cfscript>

startedAt = getTickCount();

// The ayncRace() function executes all of the given functions in parallel and

// returns a Future that resolves with the results of the first function that

// completes its execution. The rest of the functions continue to run in the

// background.

// --

// CAUTION: Any errors that are thrown after the first resolution are swallowed.

future = asyncRace([

function() {

sleep( 3000 );

return( "Foo" );

},

function() {

sleep( 1000 );

return( "Bar" );

},

function() {

sleep( 2000 );

return( "Baz" );

}

]);

writeOutput( "<h1> Creating An AsyncRace() Function Using Parallel Threads </h1>" );

writeDump( future.get() );

writeOutput( "<p> Completed in #numberFormat( getTickCount() - startedAt )# ms. </p> ");

// ------------------------------------------------------------------------------- //

// ------------------------------------------------------------------------------- //

/**

* I take an array of operators, execute them asynchronously in parallel, and return a

* future that resolves with the first results of the operator that completes. The

* rest of the operators continue to execute in the background.

*

* @operators I am the collection of Functions being executed in parallel.

* @output false

*/

function asyncRace( required array operators ) {

var future = runAsync(

function() {

// Internally to the asynchronous runAsync() callback, we're going to use

// Lucee's native parallel iteration. However, the parallel iteration -

// as a logical unit - is, itself, a blocking operation. As such, we have

// to spawn a nested, asynchronous thread in which to run the iteration.

// Then, from within that nested thread iteration, we're going to reach

// back into this thread in order to set an ATOMIC REFERENCE. The Atomic

// reference will contain the Future of the first operator to complete.

var atomicFuture = createObject( "java", "java.util.concurrent.atomic.AtomicReference" )

.init( nullValue() )

;

// Run parallel iteration inside a nested thread so it doesn't block and

// prevent us from being able to inspect the ATOMIC REFERENCE.

runAsync(

function() {

operators.each(

function( operator ) {

// Using YET ANOTHER ASYNC THREAD, execute the Operator.

// The return value of this call is the Future that we

// will store in our ATOMIC REFERENCE.

// --

// NOTE: We are using a Future here, instead of vanilla

// value so that if the operator throws an error, it will

// captured and reported in the Future..

var operatorFuture = runAsync( operator );

try {

// Force the operator's Future to block and resolve.

operatorFuture.get();

// If we got this far, the operator Future resolved

// successfully. As such, let's try to store the

// Future into the ATOMIC REFERENCE. Using the

// .compareAndSet() method, we can ensure that only

// the first operator to resolve will "win".

atomicFuture.compareAndSet( nullValue(), operatorFuture );

} catch ( any error ) {

// If the operator threw an error, the error will be

// encapsulated within the operator's Future. As

// such, we can "report" the error by simply

// reporting the future. Using the .compareAndSet()

// method, we can ensure that only the first operator

// to resolve will "win" -- all other errors will be

// swallowed by the race-workflow.

atomicFuture.compareAndSet( nullValue(), operatorFuture );

}

},

true // Iterate using parallel threads.

);

}

); // End inner runAsync().

// At this point, our parallel iteration is, itself, running inside an

// asynchronous thread. Let's continuously check the ATOMIC REFERENCE

// until one of the operators completes and stores its Future in the

// reference value.

do {

var result = atomicFuture.get();

} while ( isNull( result ) );

// Once we have our ATOMIC REFERENCE value, let's block and resolve it.

// Since we are currently inside another runAsync() callback, the results

// of this Future will get unwrapped (using .get()) and become the

// results of the parent Future.

return( result.get() );

}

); // End runAsync().

return( future );

}

</cfscript>

As you can see, this implementation is quite a bit more complicated. Each individual operator is used to generate a Future. Then, when the first operator completes - either in success or in error - its Future is bubbled-up to the AtomicReference, which is, in turn, used to resolve the top-level runAsync() callback.

When we run this Lucee CFML page in the browser, we get the following output:

This is some exciting stuff! Using the runAsync() function, in combination with Lucee's parallel iteration (using asynchronous threads), we can really start to build some complex, higher-order functionality. Taking a cue from the JavaScript Promise data-type, we can recreate "any", "settled", and "race" behaviors using Lucee's Future data-type. This allows us to more easily code asynchronous control-flow into the traditionally-blocking world of ColdFusion

Epilogue on a theoretical asyncAny() function

In the world of Promises, there is both Promise.race() and Promise.any(). While these two methods are similar, they are different in how they handle errors. Like, Promise.race(), the Promise.any() method will eagerly resolve with the first successful Promise. However, unlike Promise.race(), Promise.any() doesn't eagerly reject with the first error. Instead, it gathers up all of the errors and then rejects the higher-order Promise with an array of the aggregated errors.

In Lucee ColdFusion, this is not currently possibly to implement because there is no way to reject a Future without an actual error object. And, since we can't "throw" an array of error objects, there's no real way to implement the correct behavior of the higher-order Future.

Want to use code from this post? Check out the license.

Reader Comments

Loving the async explorations. I noticed you haven't recorded any videos in a while. Are you done with that? Too much overhead? I personally enjoyed the video walk throughs.

@Chris,

Good question. I have historically done the videos for the JavaScript / CSS stuff, not as much for the back-end stuff. But, that's really just laziness :D I should start doing them for all posts. Because, I agree, it tells a different story and provides different insights (and modes of consumption).

@Ben

That would be great! I don't always have the time to "digest" the words which requires more cognitive load. But I generally always have 5m to get the idea through video. But if we're comparing laziness, I definitely lose because generating all this great content is absolutely far less lazy than any laziness I've displayed in consuming it. Ha. I mean, I'm complaining about having to read? What!? So lame and lazy of me. I appreciate you regardless of the form your content takes. I was just curious :)

Finally got back to "read" this. Couple thoughts...

I wonder why asyncSettled doesn't return 1status: "resolved"

rather thanstatus: "fulfilled"` ??You blew my mind ?? with the asyncRace() example! Wow, Ben. Just wow!

@Chris,

Ha ha, yeah, the

asyncRace()one is kind of mind-bending. I mean, it has 3 nestedrunAsync()calls and usage of parallel iteration. So, basically, 4-levels of asynchronous control-flow. I think that one took me two consecutive mornings to get working :D It was a very iterative, trial-and-error process.