CSV To CTE Transformer In Angular 18

When generating reports at work, I make heavy use of common table expressions (CTE) as well as the VALUES / ROW construct to create derived tables in MySQL 8. Thanks to the awesome power of SublimeText multi-cursor functionality, going from CSV (comma separated values) to CTE only takes a minute or two; but, it's a repetitive task that I'm keen to create a utility for (see my blog's utilities section). As such, I wanted to create a rough draft of this utility using Angular 18.

Run this demo in my JavaScript Demos project on GitHub.

View this code in my JavaScript Demos project on GitHub.

To get a high-level sense of what I'm talking about here, I want to take a CSV text payload like this:

ID,EMAIL

1,ben@example.com

2,laura@example.com

3,smith@example.com

And generate a MySQL query that looks like this:

WITH

derived (

id,

email

) AS (

VALUES

ROW ( 1, 'ben@example.com' ),

ROW ( 2, 'laura@example.com' ),

ROW ( 3, 'smith@example.com' )

)

SELECT

d.id,

d.email

FROM

derived d

;

I can then take this base SQL statement and manually add additional INNER JOIN clauses for on-the-fly reporting.

When converting a CSV value to a CTE like the one above, there are three steps involved:

Parse the CSV text into a two-dimensional rows array.

Map the CSV fields onto SQL query columns.

Render the common table expression using the desired mappings.

To get this done, the first thing I did was create a simple CsvParser class (see code at end of post). It's not the most flexible CSV parser; but, it should get the job done for the type of data that I'm usually working with (ie, clean, well formatted data being generated by spreadsheet exports).

Once I had my CSV parser, I was able to build the primary CSV-to-CTE experience. Since every CSV payload is different, I had to include a way to customize the mappings between the two constructs. For this, I'm using Angular's powerful template-based forms module. Template-based forms keep both the logic and the markup simple while also providing for completely dynamic form fields.

In this case, I have to generate several form fields for each field within the CSV payload. Each CSV field needs both a custom name and a flag indicating whether or not to include it in the generated SQL query. I'm representing this data as an array of FieldSetting objects:

type FieldSettings = FieldSetting[];

interface FieldSetting {

fieldIndex: number;

columnName: string;

exampleValue: string;

includeInCte: boolean;

}

The magic of Angular's NgModel directive is that it can bind to object properties (such as those in the FieldSetting instance). As such, I can use the @for control flow to output a dynamic set of form inputs and then bind them to the underlying view-model. It's almost too easy!

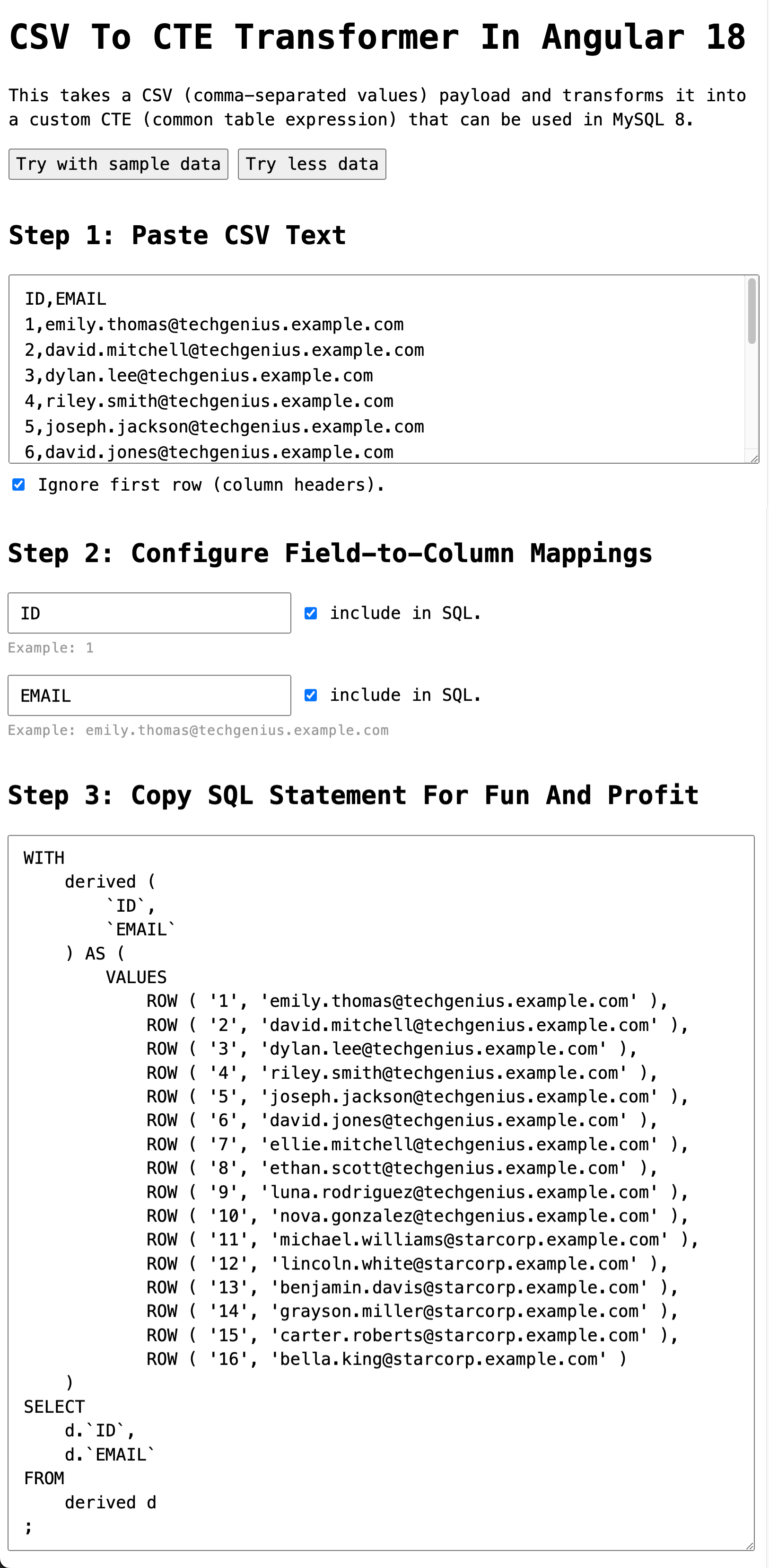

Here's the user interface for my transformer. It's the CSV textarea (input) followed by the dynamic list of field mappings followed by the CTE textarea (output).

<p>

This takes a CSV (comma-separated values) payload and transforms it into a custom CTE

(common table expression) that can be used in MySQL 8.

</p>

<p class="flex-row">

<button

(click)="applySampleTemplate( sampleTemplate1 )"

class="sample">

Try with sample data

</button>

<button

(click)="applySampleTemplate( sampleTemplate2 )"

class="sample">

Try less data

</button>

</p>

<form>

<h2>

Step 1: Paste CSV Text

</h2>

<p class="csv">

<textarea

name="csvInput"

[(ngModel)]="csvInput"

(ngModelChange)="processInput()"

class="csv__input"

></textarea>

<label class="csv__header flex-row">

<input

type="checkbox"

name="ignoreFirstRow"

[(ngModel)]="ignoreFirstRow"

(ngModelChange)="processInput()"

/>

Ignore first row (column headers).

</label>

</p>

<h2>

Step 2: Configure Field-to-Column Mappings

</h2>

@for ( fieldSetting of fieldSettings ; track $index ) {

<p class="field">

<span class="flex-row">

<input

type="text"

name="fieldName{{ $index }}"

[(ngModel)]="fieldSetting.columnName"

(ngModelChange)="renderCte()"

class="field__name"

/>

<label class="flex-row">

<input

type="checkbox"

name="includeField{{ $index }}"

[(ngModel)]="fieldSetting.includeInCte"

(ngModelChange)="renderCte()"

/>

include in SQL.

</label>

</span>

<span class="field__example">

Example: {{ fieldSetting.exampleValue }}

</span>

</p>

}

<h2>

Step 3: Copy SQL Statement For Fun And Profit

</h2>

<p class="cte">

<textarea

name="cteOutput"

[(ngModel)]="cteOutput"

class="cte__output"

></textarea>

</p>

</form>

<!-- Sample CSV data that be loaded into the demo. -->

<template #sampleTemplate1>

... truncated for demo ...

</template>

<template #sampleTemplate2>

... truncated for demo ...

</template>

Just look at how simple NgModel keep the markup. Freaking, Angular, AMIRIGHT?!

At the end of that HTML I have a few <template> elements that provide some sample data. This helped me develop the app; but, it should also make the demo a bit more exciting.

Here's the code-behind for this experience:

// Import vendor modules.

import { Component } from "@angular/core";

import { FormsModule } from "@angular/forms";

import { inject } from "@angular/core";

// Import app modules.

import { CsvParser } from "./csv-parser";

import { CsvRow } from "./csv-parser";

import { CsvRows } from "./csv-parser";

// ----------------------------------------------------------------------------------- //

// ----------------------------------------------------------------------------------- //

type FieldSettings = FieldSetting[];

interface FieldSetting {

fieldIndex: number;

columnName: string;

exampleValue: string;

includeInCte: boolean;

}

// ----------------------------------------------------------------------------------- //

// ----------------------------------------------------------------------------------- //

@Component({

selector: "app-root",

standalone: true,

imports: [

FormsModule

],

styleUrl: "./app.component.less",

templateUrl: "./app.component.html"

})

export class AppComponent {

private csvParser = inject( CsvParser );

public csvInput = "";

public csvRows: CsvRows = [];

public ignoreFirstRow = false;

public fieldSettings: FieldSettings = [];

public cteOutput = "";

// ---

// PUBLIC METHODS.

// ---

/**

* I apply and process the given sample data template reference.

*/

public applySampleTemplate( sampleTemplate: HTMLTemplateElement ) {

this.csvInput = sampleTemplate.content.textContent!.trim();

this.ignoreFirstRow = true;

this.processInput();

}

/**

* I process the CSV input and generate the CTE output.

*/

public processInput() {

this.csvRows = this.csvParser.parse( this.csvInput );

this.fieldSettings = [];

this.cteOutput = "";

// If we have no rows, there's nothing else to process.

if ( ! this.csvRows.length ) {

return;

}

// If we only have one row and we want to ignore it, there's nothing else to

// process.

if ( this.ignoreFirstRow && ( this.csvRows.length === 1 ) ) {

return;

}

// If we ignore the first row, it means that the first row is the field headers.

// We can therefore use the result of the splice() operation to default the names

// of the fields in the SQL statement.

var splicedRow: CsvRow = [];

if ( this.ignoreFirstRow ) {

splicedRow = this.csvRows.splice( 0, 1 )

.at( 0 )!

.map( ( value ) => value.replace( /\s+/g, "" ) )

;

}

// We're going to assume that the row structures are uniform and use the first row

// as a representation of the overall row structure. This will be used to define

// the default field names (giving precedence to the spliced row above).

this.fieldSettings = this.csvRows[ 0 ].map(

( fieldValue, i ) => {

return {

fieldIndex: i,

columnName: ( splicedRow[ i ] || `f${ i + 1 }` ),

exampleValue: fieldValue,

includeInCte: true

};

}

);

this.renderCte();

}

/**

* I render the CTE output based on the current view-model.

*/

public renderCte() {

// Filter down to the fields we want to include in the CTE rendering.

var settings = this.fieldSettings.filter(

( fieldSetting ) => {

return fieldSetting.includeInCte;

}

);

// If none of the field are to be included, there's nothing left to process.

if ( ! settings.length ) {

this.cteOutput = "";

return;

}

this.cteOutput = `

WITH

derived (

${ derivedColumns( settings ) }

) AS (

VALUES

${ derivedRows( settings, this.csvRows ) }

)

SELECT

${ selectColumns( settings ) }

FROM

derived d

;

`;

// Our string template approach added a bunch of extra indentation on each line.

// Let's remove that indentation to create a properly formatted SQL statement.

this.cteOutput = this.cteOutput

.trim()

.replace( /^\t{3}/gm, "" )

;

// Function-local helper methods.

function derivedColumns( settings: FieldSettings ) {

return settings

.map(

( setting ) => {

return `\`${ setting.columnName }\``;

}

)

.join( ",\n " )

;

}

function derivedRows( settings: FieldSettings, rows: CsvRows ) {

return rows

.map(

( row ) => {

return `ROW ( ${ derivedRow( settings, row ) } )`;

}

)

.join( ",\n " )

;

}

function derivedRow( settings: FieldSettings, row: CsvRow ) {

return settings

.map(

( setting ) => {

return `'${ row[ setting.fieldIndex ].replace( /'/g, "''" ) }'`;

}

)

.join( ", " )

;

}

function selectColumns( settings: FieldSettings ) {

return settings

.map(

( setting ) => {

return `d.\`${ setting.columnName }\``;

}

)

.join( ",\n " )

;

}

}

}

If I now run this Angular code and populate the CSV input, I get the following output:

As you can see, the CSV payload has become a CTE SQL query.

As a final note, here's the CSV parser that I created for the demo:

// Import vendor modules.

import { Injectable } from "@angular/core";

// ----------------------------------------------------------------------------------- //

// ----------------------------------------------------------------------------------- //

export type CsvRows = CsvRow[];

export type CsvRow = string[];

// This is the native match that exec() will return.

type ExecMatch = RegExpExecArray | null;

// This is the translated match that we use internally.

type ParserMatch = FieldMatch | null;

// This is the translated match interface.

interface FieldMatch {

value: string;

isQuotedValue: boolean;

isNakedvalue: boolean;

delimiter: string;

isFieldDelimiter: boolean;

isRowDelimiter: boolean;

isEndOfInput: boolean;

}

// ----------------------------------------------------------------------------------- //

// ----------------------------------------------------------------------------------- //

@Injectable({

providedIn: "root"

})

export class CsvParser {

/**

* I parse the given input into a CSV rows result.

*/

public parse( input: string ) : CsvRows {

// Note: This is a judgement call, but stripping off any trailing newlines at the

// end of the input seems to result in a more meaningful match. Otherwise you can

// end up with some empty row at the end depending on the algorithm.

input = input.replace( /[\r\n]+$/, "" );

if ( ! input ) {

return [];

}

var pattern = this.createPattern();

var match: ParserMatch;

var row: CsvRow = [];

var rows: CsvRows = [row];

while ( match = this.transformMatch( pattern.exec( input ) ) ) {

row.push( match.value );

// Because our pattern can match an empty field followed by the end-of-input,

// there's a zero-length match at the end of the input sequence that never

// moves the lastIndex of the exec() function forward. As such, we have to

// explicitly break out of the loop once we've reached the end.

if ( match.isEndOfInput ) {

break;

}

if ( match.isRowDelimiter ) {

row = [];

rows.push( row );

}

}

return rows;

}

// ---

// PRIVATE METHODS.

// ---

/**

* I create the RegExp instance for matching fields and delimiters.

*/

private createPattern() : RegExp {

// Note: The String.raw tag will return the embedded escape sequences (\r\n) as

// string literals instead of interpreting them as escape sequences.

var source = String.raw`

(?:

"([^"]*(?:""[^"]*)*)" # Quoted field value.

|

([^",\r\n]*) # Naked field value.

)

(,|\r\n?|\n|$) # Field delimiter.

`;

// The native RegExp doesn't support the "verbose" flag. As such, let's strip out

// any comments and whitespace in the current source.

source = source

.replace( /# [^\r\n]*/g, " " )

.replace( /\s+/g, "" )

;

return new RegExp( source, "gy" );

}

/**

* I transform the native exec() match into a local parser match.

*/

private transformMatch( match: ExecMatch ) : ParserMatch {

if ( ! match ) {

return null;

}

var value = ( match[ 1 ] || match[ 2 ] || "" );

var isQuotedValue = ( match[ 1 ] !== undefined );

var isNakedvalue = ! isQuotedValue;

var delimiter = match[ 3 ];

var isFieldDelimiter = ( delimiter === "," );

var isRowDelimiter = ! isFieldDelimiter;

var isEndOfInput = ! delimiter;

// If the field value is quoted, canonicalize any embedded quotes.

if ( isQuotedValue ) {

value = value.replace( /""/g, '"' );

}

return {

value,

isQuotedValue,

isNakedvalue,

delimiter,

isFieldDelimiter,

isRowDelimiter,

isEndOfInput

};

}

}

While I've been using regular expressions for ages, I don't think I've ever used the .exec() method in a TypeScript setting before. It was good to see that Angular's error messages pointed me in the right direction (vis-a-vis typing).

Want to use code from this post? Check out the license.

Reader Comments

Very cool! Now show us React --- GO!

@Chris,

Ha ha, the same, only much harder 🤪 the template-based form stuff is something Angular really just nailed from the starting-gate. I think React is only just now starting to embrace the idea of "uncontrolled form inputs" and two-way data binding (they've always allowed for uncontrolled inputs; but, I think I heard that they are starting to realize that it just makes form interactions so much easier ... but, I could be talking out my behind).

It would be curious to see this in React - I wonder if I can just paste it into Chat GPT.

Great stuff. Ben.

Now I am guessing this:

Is a new Angular 16+ feature that allows us to inject stuff without using a constructor. This is a really great little feature.

Oooo. Ooo. And I also see you are using a box of bananas 📦 🍌

Don't you just love the flexibility of bi-directional data flow that React cannot match! ☺️

And a few questions about the MySQL stuff.

What exactly is a derived table?

I don't think I have ever used one of these?

Are they like a temporary table?

I presume the

Row()function is similar to a normal tableINSERT?Is this a MySQL 8 feature?

OK. I am beginning to think that this is just a plain old sub query.

For one moment, I thought that

derived()was some new funky MySQL 8 method.I've just done a Google on it and all I can find for derived is sub query.

It's just that

ROW()method threw me a bit, along with theWithkeyword.Ben.

It would be really great if you could do a mini tutorial based on the MySQL, at the top of this article.

I am trying to get my head around how it all works and what the

ROW()method actually does.I have done some Googling on

ROW()and there isn't much out there.The only thing that I have gleaned so far, is that it compares different sub queries with two or more columns?

@Charles,

Yes, the

inject( CsvParser )stuff is Angular's ability to do dependency-injection without having to do constructor injection. Though, I think that's actually what it is doing under the hood during the compilation of the code. You can only useinject()in "constructor contexts" for this reason, I assume.As far as the SQL stuff, you are correct - the common table expression (CTE) is really just storing a sub-query into a variable. In this case, I'm using the

VALUES/ROWScombination, but you can just as easily use aSELECT:Note that with the

SELECTI don't have to define the column names since that's handled by theSELECTitself. In the example in my blog post, since there's noSELECT, I have to be more explicit about the column names.And yes, there's nothing special about the term

derived- that's just the variable name I used. I could have used:WITH temp ASorWITH users AS- I only usederivedbecause that's what I'm used to using.As for the

ROWstuff, yeah, I think that was introduced in MySQL 8 as well. I did a closer look at that previously if you're curious:www.bennadel.com/blog/4626-using-values-row-to-create-derived-table-from-static-values-in-mysql-8-0-19.htm

It's basically just a way to map values onto columns in the derived table. So, stead of having to use a bunch of

UNION ALLcalls to construct a table:... you can just use

ROW()to define each row. Once you start using it, it's quite a nice developer experience.And YES, the two-data binding is AMAZING! 🎉

@Ben Nadel,

Thanks for this explanation.

So, just to clarify, I presume the reason you didn't use:

Is because you weren't building a derived table from an existing table.

In your example, you were building the derived table from scratch?

Now I was reading that the difference between CTE and a sub query is that CTE can deal with recursion.

Do you have any more detail on the recursion part? I am not entirely sure what recursion is referring to, in this context?

@Charles,

Exactly right. Since I wasn't using a sub-query, but rather constructing actual rows, something somewhere has to define what the column names are called. And, in this particular demo, the user can customize each field name via the Angular view.

As far as recursion, I looked it up one time but I don't think I've ever tried it out. I think the canonical example is usually something like a file/folder tree where there is a hierarchy across the queries. But, I've never actually put something like that into practice at the DB level (normally I would pull more records back and then perform the hierarchy calculations in the application code).

@Ben Nadel,

Cheers for comfirmation.

I managed to find this simple example, which has helped me understand the basics:

@Charles Robertson,

This breaks my brain a little. Thanks for this example! Good stuff

@Chris G,

Yes. I'm not looking forward to a more complex example! ☺️🤯

Yeah, that looks bananas 😜 I don't think I'll ever use something like that in my app DB (feels like something that should be in the app code itself); but, you've inspired me to at least look at it little more closely.

Over on LinkedIn, Gurpreet asked for an example of where I might use this technique. I've been using it a ton lately as we start to send out emails about the shut-down of our system. Let me provide some context:

I have an account representative who needs to communicate with our enterprise clients about when their enterprise contracts are ending. These emails are all being sent out through a specialized workflow which looks at an "email verification" table before sending. Since we have to keep our bounce rate low, we don't want to risk send emails to people whose email address may no longer be valid.

So, when my account rep comes to be and says she needs to send out an email to a given set of enterprises, she'll give me the list of emails that she wants to target. Then, before we do any sending, I'll compare her list to the email validation cache to see which emails haven't been validated (ie, don't have a corresponding record in the DB yet) and which will be skipped due to current validation.

The query for this looks something like this:

Then, I'll take those results and put them in a Google sheet for the account rep to review. For users that don't have any validation record (

c.textCode IS NULL), she'll run those emails through a validation service like NeverBounce or Email List Verify (and we'll update the internal cache with the new results). For companies that have too many skipped users, she'll do some research to see if there are other emails in the company that we can include in the mail-serve.It's these kind of out-of-app, manually-orchestrated queries that are made much easier from the CTE technique.

@Ben Nadel,

That's interesting, but it seems to me that CTEs are used mainly for readability. Because there doesn't seem to be much difference between a sub query & a CTE, unless you use the

RECURSIVEkeyword? Or unless you create a temporary table from scratch, as you have in the example above. 🤔@Kevin is AI - lol

@Chris G,

I honestly couldn't tell, until I read it a second time 🤭

Yeah, I just deleted the comments. I wasn't sure at first, but the second comment (first was on a different post) confirmed.

@Ben Nadel,

I wonder what the point of an AI comment was. Serves no purpose I can imagine 🤔

As a quick aside, I've now added this feature to my utils section:

www.bennadel.com/utils/

@Ben Nadel,

Good stuff! First time I'm seeing this page. Is it a hidden URL, or is it hooked into the site's navigation somewhere? If it is, I couldn't find it.

@Chris,

I link to it in the site footer. I only just added this page a few weeks ago. It was just something I've been thinking about doing for a while. It's mostly for my own usage; but, I figured I'd make it public for anyone else that might find it helpful.

@Ben Nadel,

Ah, I see it now! Thanks for pointing that out... Wasn't sure if I needed to bookmark it to find it again. I appreciate you, all that you do, and all that you share. 🙏

You are very kind, good sir :) If you have any suggestions for utilities, let me know. I'm just keeping notes as I think to myself, "man, I should build something for XYZ."

Post A Comment — ❤️ I'd Love To Hear From You! ❤️

Post a Comment →