Using Range Offsets To Power Weighted Distributions In ColdFusion

A few years ago, I looked at using arrays to power weighted distributions in ColdFusion. Using arrays is nice because they create a great visualization of the distributed values. But, creating and populating an array is a lot of overhead. As such, I wanted to revisit the idea of a weighted distribution of values; but, instead of populating an array, I'm just using calculated offsets.

Consider the following values with the given weighted distribution:

Awith 50% distribution.Bwith 30% distribution.Cwith 20% distribution.

If I were to create an array and populate it with values, the array literal would look like this:

<cfscript>

values = [

"A", "A", "A", ... // repeated 50 times.

"B", "B", "B", ... // repeated 30 times.

"C", "C", "C", ... // repeated 20 times.

];

</cfscript>

Then, randomly choosing indexes between 1 and 100 in the generated values array would, on balance, result in values with the desired weighted distribution.

But, to think about this from an index perspective and not a value perspective, we can consider our weighted distribution as being represented by a range of indices within the total 1...100 range:

Ais indices1...50.Bis indices51...80.Cis indices81...100

Given this perspective, we can select a random value based on the index alone:

- Choose a random value (

n) between1...100. - If

nin range1...50, chooseA. - If

nin range51...80, chooseB. - If

nin range81...100, chooseC.

Of course, the set of options isn't going to be static. As such, we need an algorithm that can handle any number of values with any (integer) weight. So, let's think more abstractly about our index-based approach.

Instead of thinking about each values as represented by an absolute range, we can think about relative ranges. Assuming that we have an iteration variable, i, which is 1, we can redefine the ranges as:

Ais indicesi...(i+50-1).Bis indices(i+50)...(i+50+30-1).Cis indices(i+50+30)...(i+50+30+20-1).

Essentially, the cap-indices of each range can be determined if we know the end of the previous range and the weight of the current range. Which means, all we have to do is iterate over the options, keeping track of the from and to offsets, and then testing the calculated range against against the randomly-selected index.

To demonstrate, I'm going to randomly select 100,000 values from a set of options with a weighted distribution. The index-checking logic is encapsulated in the user defined function (UDF), getWeightedValue(options). You'll see that it uses a for-in loop to iterate over the options; and, for each option, calculates an inclusive from/to range:

<cfscript>

// Each of these values should be randomly selected using the given weight. Meaning,

// on balance, a value with a weight of "20" should be expected to be randomly

// selected 20% of the time.

options = [

{ value: "a", weight: 10 },

{ value: "b", weight: 20 },

{ value: "c", weight: 40 },

{ value: "d", weight: 10 },

{ value: "e", weight: 17 },

{ value: "f", weight: 3 }

];

// ------------------------------------------------------------------------------- //

// ------------------------------------------------------------------------------- //

// Note: As we select more values, our actual distribution starts to approach the

// original weighting. If we only have a few selections, the weight can get obscured

// by the randomness.

total = 100000;

tally = { a: 0, b: 0, c: 0, d: 0, e: 0, f: 0 };

selectedValues = [];

// Select many random values and record how often each value shows up.

loop times = total {

value = getWeightedValue( options );

selectedValues.append( value );

tally[ value ]++;

}

// Output the values and their tally.

dump( tally );

echo( "<p>" & selectedValues.toList( " " ) & "</p>" );

// ------------------------------------------------------------------------------- //

// ------------------------------------------------------------------------------- //

/**

* I return a random value using a weighted distribution of likelihood of selection.

*/

public string function getWeightedValue( options ) {

var randomIndex = randRange( 1, 100, "sha1prng" );

// Each option is going to be represented as a sub-range within the 1...100 range,

// inclusive. However, since each individual option only knows its own weight -

// not the weight with respect to the sub-range - we need to WALK over the from/to

// indicies and map each option to a sub-range, starting with 1.

var fromInclusive = 1;

var toInclusive = 1;

for ( var option in options ) {

toInclusive = ( fromInclusive + option.weight - 1 );

if (

( randomIndex >= fromInclusive ) &&

( randomIndex <= toInclusive )

) {

return option.value;

}

// If the current option didn't match, move the FROM index just past the

// current TO index in order to prepare for the next loop iteration.

fromInclusive = ( toInclusive + 1 );

}

throw(

type = "UnexpectedWeightedDistribution",

message = "Weights do not total to 100."

);

}

</cfscript>

Now, if we run this Lucee CFML code, we get the following output:

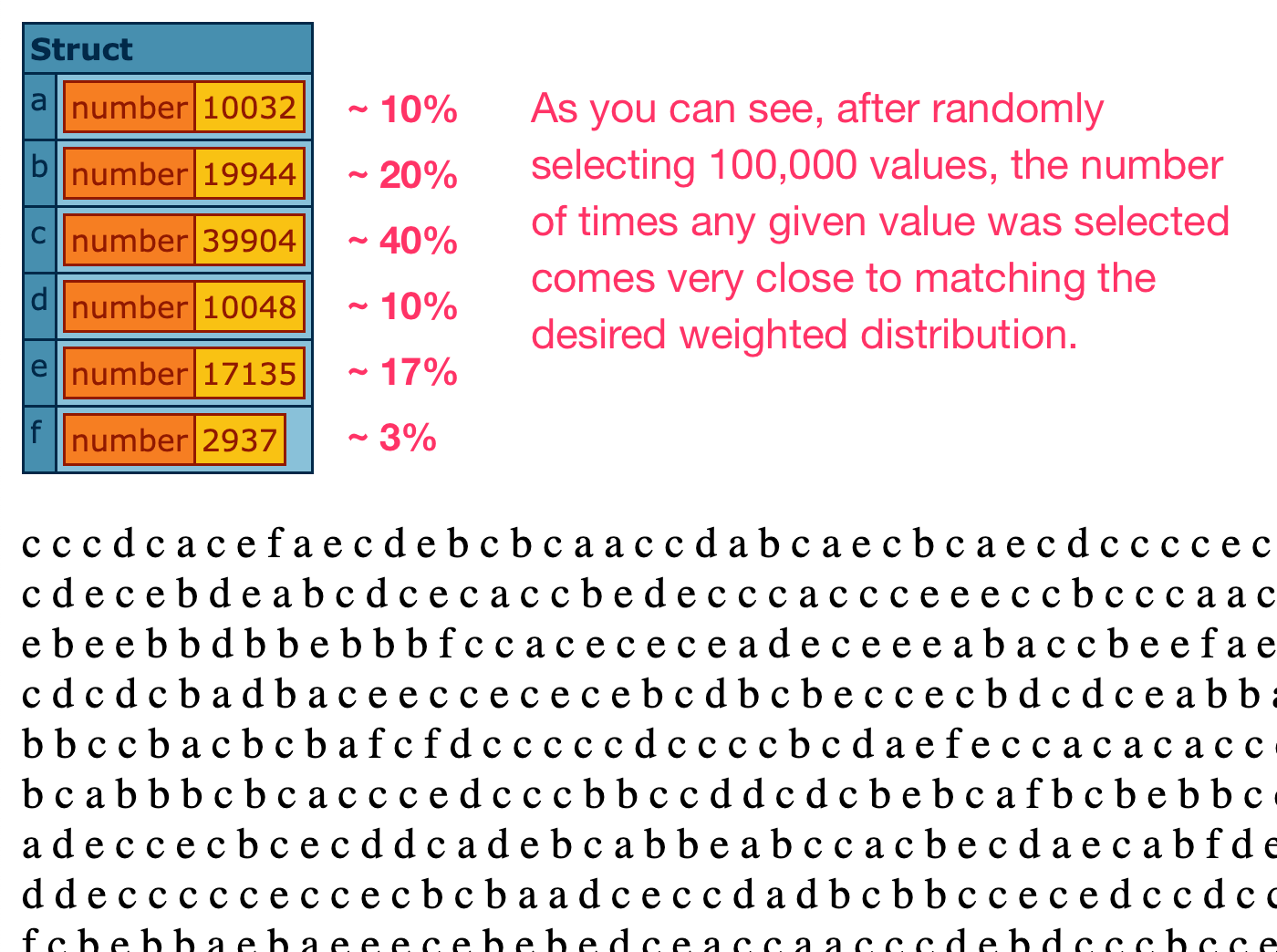

Out of 100,000 random value selections, we ended up with:

Aselected about 10% of the time (10,032).Bselected about 20% of the time (19,944).Cselected about 40% of the time (39,904).Dselected about 10% of the time (10,048).Eselected about 17% of the time (17,135).Fselected about 3% of the time (2,937).

As you can see, we were able to match the desired weighted distribution; and, to do so without having to allocate and populate an array. This should be a much lighter-weight operation.

Using Proportional Weights

In the comments below, Raymond Camden asked a great question. Instead of thinking about the weights as having to sum to a total of 100, can we instead think about the weights as being simply proportional. Meaning, can we define the options as such:

Awith weight6.Bwith weight3.Cwith weight1.

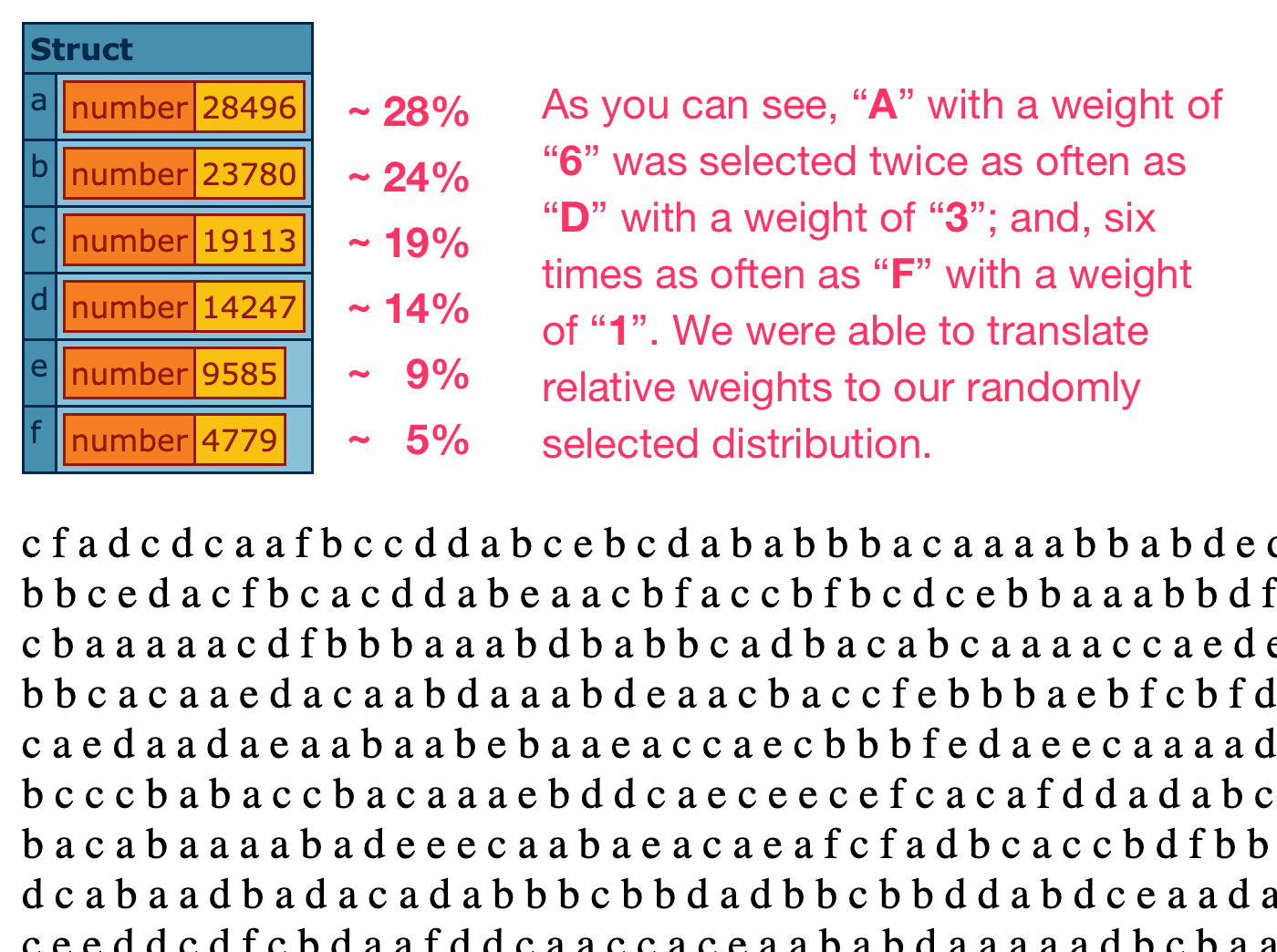

In this case, we aren't trying to define a total but rather a relative distribution of options. In this configuration, A with a weight of 6 should expect to be randomly selected twice as often as B with a weight of 3; and six times as often as C with a weight of 1.

In the code that I outlined above, the only piece of logic that actually requires the weights to total 100 is the line that selects the random index. If we replace the 100 with something akin to SUM(weight), everything else should naturally work.

Here is a modification of the previous demo, this time using proportional weights:

<cfscript>

// Each of these values should be randomly selected using the given PROPORTIONAL

// weight. Meaning, on balance, a value with a weight of "6" should expect to get

// randomly selected TWICE as often as a value with weight "3".

options = [

{ value: "a", weight: 6 }, // 6 / 21 ~ 28%

{ value: "b", weight: 5 }, // 5 / 21 ~ 24%

{ value: "c", weight: 4 }, // 4 / 21 ~ 19%

{ value: "d", weight: 3 }, // 3 / 21 ~ 14%

{ value: "e", weight: 2 }, // 2 / 21 ~ 9%

{ value: "f", weight: 1 } // 1 / 21 ~ 5%

];

// ------------------------------------------------------------------------------- //

// ------------------------------------------------------------------------------- //

// Note: As we select more values, our actual distribution starts to approach the

// original weighting. If we only have a few selections, the weights can get obscured

// by the randomness.

total = 100000;

tally = { a: 0, b: 0, c: 0, d: 0, e: 0, f: 0 };

selectedValues = [];

// Select many random values and record how often each value shows up.

loop times = total {

value = getWeightedValue( options );

selectedValues.append( value );

tally[ value ]++;

}

// Output the values and their tally.

dump( tally );

echo( "<p>" & selectedValues.toList( " " ) & "</p>" );

// ------------------------------------------------------------------------------- //

// ------------------------------------------------------------------------------- //

/**

* I return a random value using a weighted distribution of likelihood of selection.

*/

public string function getWeightedValue( options ) {

// Instead of assuming that the weights add up to 100, we're going to PLUCK and

// SUM the proportional weights to find our upper-bound index.

var totalWeight = options

.map( ( option ) => option.weight )

.sum()

;

var randomIndex = randRange( 1, totalWeight, "sha1prng" );

// Each option is going to be represented as a sub-range within the 1...TOTAL

// range, inclusive. However, since each individual option only knows its own

// weight - not the weight with respect to the sub-range - we need to WALK over

// the from/to indicies and map each option to a sub-range, starting with 1.

var fromInclusive = 1;

var toInclusive = 1;

for ( var option in options ) {

toInclusive = ( fromInclusive + option.weight - 1 );

if (

( randomIndex >= fromInclusive ) &&

( randomIndex <= toInclusive )

) {

return option.value;

}

// If the current option didn't match, move the FROM index just past the

// current TO index in order to prepare for the next loop iteration.

fromInclusive = ( toInclusive + 1 );

}

}

</cfscript>

We're still selecting 100,000 items and keeping track how often each value is selected. And, when we run this version of the ColdFusion code, we get the following output:

As you can see, it worked quite well—each option was selected with an occurrence that was proportional to the configured weight. And, in fact, A with a weight of 6 was selected twice as often as D with a weight of 3.

Want to use code from this post? Check out the license.

Reader Comments

I swear I did something similar to this a LONG time ago in a little 'ad banner' open source thing I built... Harlan I think I called it. One interesting nit - how would you handle letting a person define their weights and NOT require them to equal 100? So I may want:

And then your code should basically just do the math to make it work with what you showed above.

@Raymond,

Oh, that's a great question! So, looking back at the logic, the only place in which

100is actually coded into the logic is in the line:So, I think all that would actually need to change would be to replace the hard-coded

100with something that sums the weights:Of course, I'm putting the sum in-place to be illustrative; but, one could easily wrap that up in a function.

Yeah, there's no reason this has to sum to 100 -- and, could be a much better UX if it doesn't have to - more flexible, less prone to jank.

Great question!

Inspired by Ray's comment, I update the blog post to include a section on using proportional weights instead of weights that total 100. The demo is almost exactly the same; only, I have to calculate the total weights first:

The rest of the code works exactly the same.

Awesome :)

Post A Comment — ❤️ I'd Love To Hear From You! ❤️

Post a Comment →