Using LATERAL Joins To Get "Top N" Records From Each Group In MySQL 8.0.14

One type of SQL query that has been particularly hard, historically, is getting the "Top N" rows for each group in a MySQL aggregation. When MySQL added LATERAL joins in 8.0.14, however, they opened the door for some simple but rather powerful query techniques. A few months ago, I looked at using LATERAL joins to gather row-specific aggregates. In that post, I used traditional aggregate functions like COUNT() and MAX(). But, as I recently demonstrated, MySQL's JSON aggregates (5.7.22+) can be nested for some pretty exciting outcomes. In this post, I want to look at combining LATERAL joins with JSON aggregates to read the "Top N" rows from a GROUP BY query.

To demonstrate this, in the context of this blog, I'm going to craft a SQL query that gets the oldest 10 members; and, for each member, I want to get the newest 5 comments. Getting the oldest 10 members is simple and has been possible in SQL since the dawn of time:

SELECT

m.id,

m.name

FROM

member m

WHERE

m.id <= 10

In this case, I'm leaning on the fact that my id is an AUTO_INCREMENT column. Which means, the first 10 id values in the table represent the oldest members in the table.

ASIDE: In a simple query like this, I could have done

ORDER BY m.id DSEC LIMIT 10to truly get the oldest 10 members. But, using this technique blows up when you start joining to other tables.

Now, I'm going to add a LATERAL derived join table that will get the most recent 5 comments for each member. Since the LATERAL sub-query is executed for each row of the outer table, it means that our LATERAL SQL can reference columns in the outer row. In this case, we're going to match on m.id:

SELECT

m.id,

m.name

FROM

member m

-- By using a LATERAL JOIN (MySQL 8+), we can create a derived table PER EACH ROW of the

-- outer table. This per-row derived table is made available within the SELECT clause.

INNER JOIN LATERAL

(

-- Since this lateral join derived table is being calculated per row, we can

-- easily grab the "TOP N" corresponding records (using LIMIT) for each row in the

-- outer table.

SELECT

c.id,

c.createdAt

FROM

blog_comment c

WHERE

c.memberID = m.id -- Per-row join condition.

ORDER BY

c.id DESC

LIMIT

5

) AS recent

WHERE

m.id <= 10

As you can see here, the LATERAL join allows us to use a per-row join condition:

WHERE c.memberID = m.id

... which, in turn, allows us to gather unique blog_comment records for each of the member records.

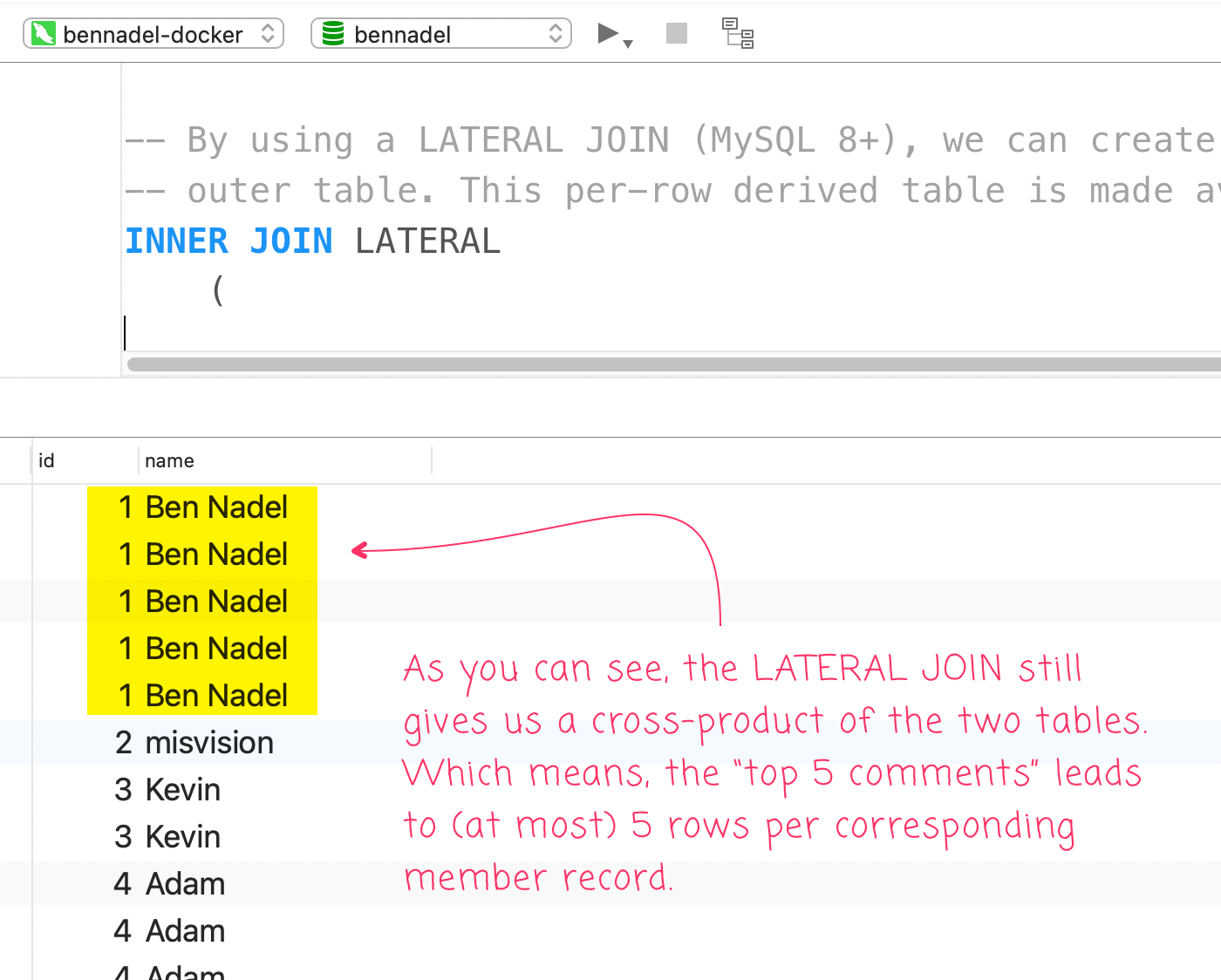

Of course, this INNER JOIN LATERAL still works like any other INNER JOIN which means that the result of this SQL query is a cross product of the two tables. This gives us way more rows that we wanted:

As you can see, we end up with "Ben Nadel" 5 times in this resultset since we get the cross product of each member row and the (at most) 5 comments that each member has left on the blog.

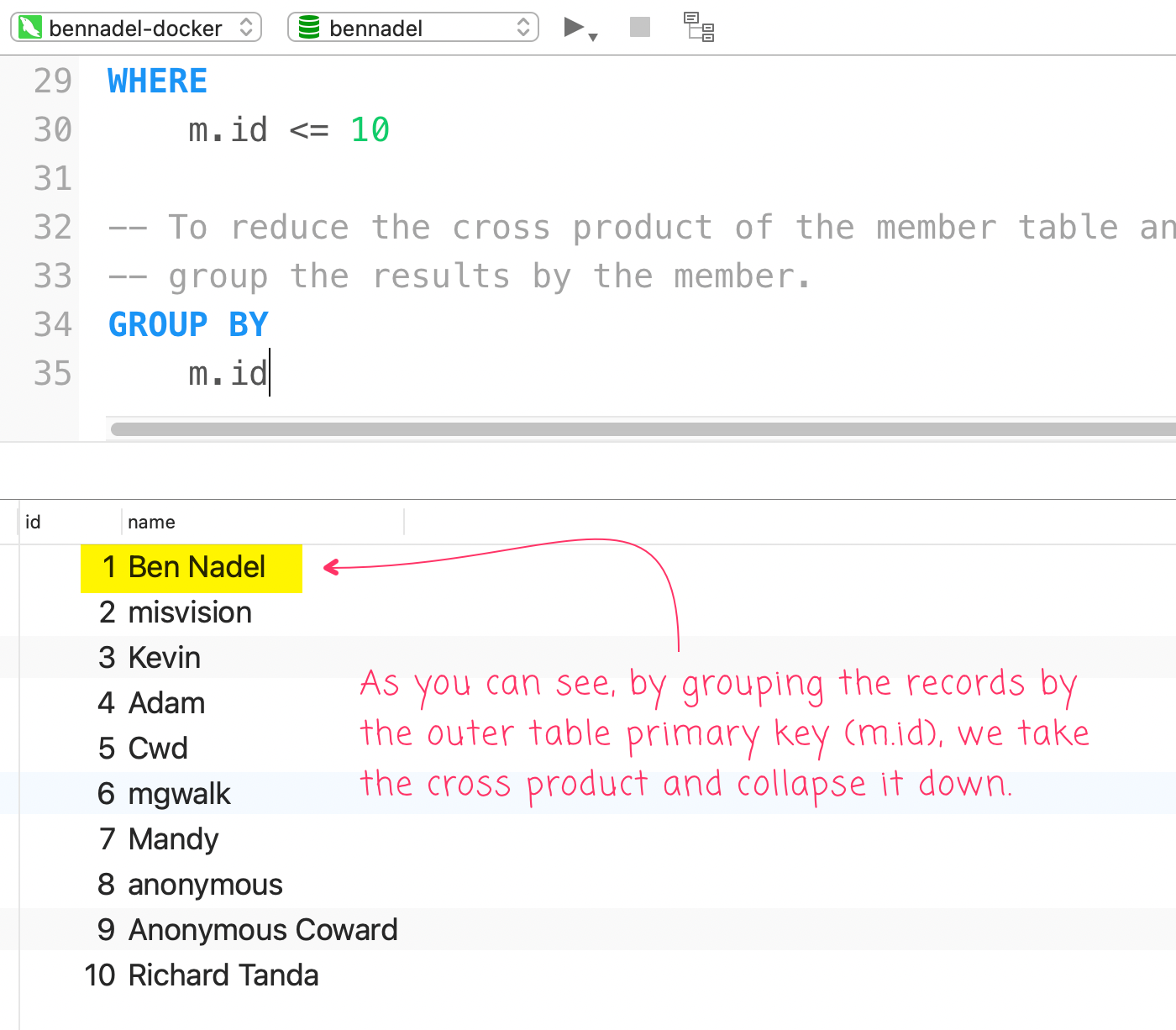

To fix this, we can now GROUP BY the outer row. In some SQL engines, your GROUP BY clause has to contain all the columns that you want to group. However, one of the very nice things in the MySQL-specific syntax is that you only need to include just one of the columns and MySQL figures it out. In this case, we we are going to group by the member id:

SELECT

m.id,

m.name

FROM

member m

-- By using a LATERAL JOIN (MySQL 8+), we can create a derived table PER EACH ROW of the

-- outer table. This per-row derived table is made available within the SELECT clause.

INNER JOIN LATERAL

(

-- Since this lateral join derived table is being calculated per row, we can

-- easily grab the "TOP N" corresponding records (using LIMIT) for each row in the

-- outer table.

SELECT

c.id,

c.createdAt

FROM

blog_comment c

WHERE

c.memberID = m.id -- Per-row join condition.

ORDER BY

c.id DESC

LIMIT

5

) AS recent

WHERE

m.id <= 10

-- To reduce the cross product of the member table and the blog_comment table, we can

-- group the results by the member.

GROUP BY

m.id

Now, when we run this, with the GROUP BY m.id, we get the following MySQL results:

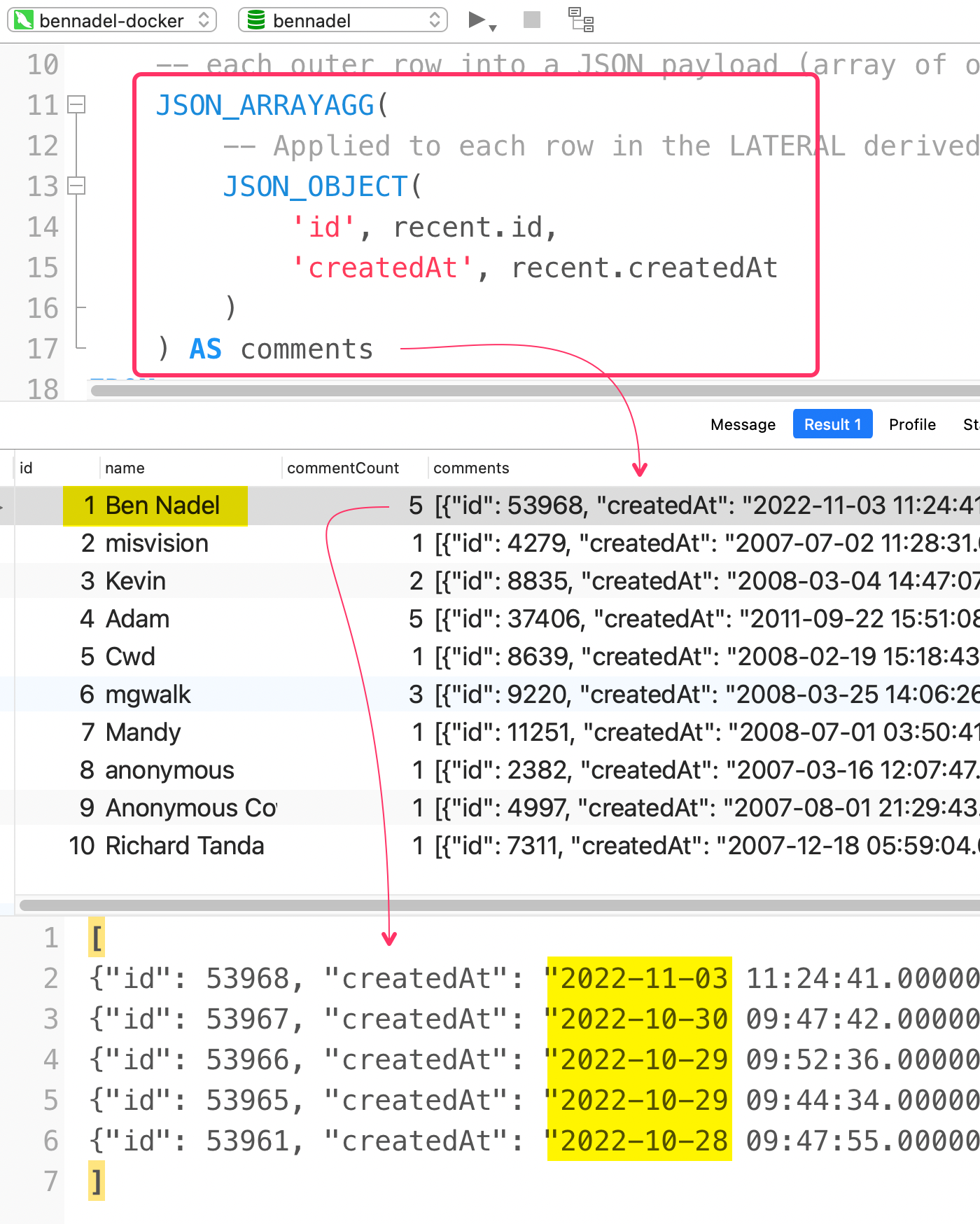

As you can see, we're back down to a single record per member. The "Top N" comments that we generated in our LATERAL join have been collapsed. In order to extract the comment information, we're going to use MySQL's JSON aggregate functions. The JSON_ARRAYAGG() function allows us to compose grouped expressions into a JSON array. And, the JSON_OBJECT() function allows us to aggregate row columns as the expression being composed into the JSON array:

JSON_ARRAYAGG(

-- Applied to each row in the LATERAL derived table.

JSON_OBJECT(

'id', recent.id,

'createdAt', recent.createdAt

)

) AS comments

Here, the JSON_OBJECT() function is being applied to each row in the grouping. Meaning, we're looking at each blog_comment row in the LATERAL derived table and we're constructing a JSON object. Then, the JSON_ARRAYAGG() function takes those JSON objects and composes them into a single array per member record.

The whole query looks like this:

SELECT

m.id,

m.name,

-- Since we performed a GROUP BY on the outer table, our LATER JOIN derived table is

-- now available for aggregation.

COUNT( * ) AS commentCount,

-- Using the JSON functions (MySQL 5.7.22+), we can collapse the "TOP N" rows for

-- each outer row into a JSON payload (array of objects).

JSON_ARRAYAGG(

-- Applied to each row in the LATERAL derived table.

JSON_OBJECT(

'id', recent.id,

'createdAt', recent.createdAt

)

) AS comments

FROM

member m

-- By using a LATERAL JOIN (MySQL 8+), we can create a derived table PER EACH ROW of the

-- outer table. This per-row derived table is made available within the SELECT clause.

INNER JOIN LATERAL

(

-- Since this lateral join derived table is being calculated per row, we can

-- easily grab the "TOP N" corresponding records (using LIMIT) for each row in the

-- outer table.

SELECT

c.id,

c.createdAt

FROM

blog_comment c

WHERE

c.memberID = m.id -- Per row join condition.

ORDER BY

c.id DESC

LIMIT

5

) AS recent

WHERE

m.id <= 10

GROUP BY

m.id

;

And, when we run this in MySQL 8.0.14+, we get the following output:

As you can see, the JSON_ARRAYAGG() and JSON_OBJECT() functions have taken the collapsed / grouped LATERAL join records and exposed them as a JSON payload in the MySQL recordset. This has allowed us to get the "Top N" comments for each member in the group. Of course, in your application code you'll have to deserialize the JSON payload to get the comments as an array; however, that should be a capability natively built into your application runtime.

Isn't SQL just thrilling! Every now and then, I'll hear someone diminish SQL as something that people "have" to use. Forget that! I love SQL. And, I love that the SQL engines are constantly adding new and groovy functionality.

Performance Considerations

You may be looking at this SQL and thinking to yourself, "That can't be fast!" And, it's likely not as fast as some less complex queries. But, if we run an EXPLAIN on the SQL query we can see that it is using the PRIMARY key index for the member table and the IX_by_member index on the blog_comment table. Yes, it has to do some filesort operations as well as something called Rematerialize (which I've never seen before); but, I think you'll find that this is actually quite optimized.

Of course, with all things, you just have to test it in your environment with your particular volume of data and index configurations.

Want to use code from this post? Check out the license.

Reader Comments

This is so cool 😎

@Chris,

SQL got some pretty nifty stuff in it! I really love the idea of the

LATERALjoin; though, I haven't really used it in a production setting yet. Most of the stuff on this blog is pretty straightforward (under the hood).As regards any 'speed' issues...

The alternative to doing it in one query (and the way most devs would approach it) would one db call to get the members and a 10x loop to get the 5 top messages. That's 11 times the number of (admittedly simpler) query parsing/optimisations and 11 lots of network latency. That one query is going to have to optimise pretty badly...

You're right about people diminishing SQL as something that people "have" to use (or treating a db as just a 'dumb bucket' for data). I don't think it's a coincidence that nearly every time I come across a non-performant application, it's sub-optimal db use that's the main culprit.

@Ian,

You touched a nerve with me 🤣 I think it was on the Shop Talk podcast, or maybe it was CodePen Radio - on one of them, they were talking about converting code from Ruby on Rails over to Golang. It was some migration code that they were changing. Anyway, months ago, when this first came up, they were saying how a process that ran in Ruby took like 3-days to complete. And then when they rewrote it in Golang, it took like 15-minutes or something. And, at the time, they used that as evidence to say how much better the language was.

Anyway, months later, in a different conversation, they talked about how after it was all said-and-done, they realize that the big change in the code conversion was a change to the way they were doing the database queries. That is was the DB interactions that were making the Ruby code so slow... not Ruby itself.

Of course, cut to me basically yelling into the void that of course it was the database!! 😂

Post A Comment — ❤️ I'd Love To Hear From You! ❤️

Post a Comment →