Splitting An Array Into Groups In ColdFusion 2021

The other day, I was dealing with a long list of IDs that I needed to use in a SQL query. In fact, the list was so long that the generated SQL statement was exceeding the max packet size property of the MySQL server. As such, I had to split the list of IDs into groups and perform the SQL query in chucks. I was surprised to see that our Utilities.cfc at work didn't already have a user defined function (UDF) for this. As such, I wrote a function that splits an array into groups of a given max-length in ColdFusion.

The logic in this function is fairly simple: I'm using an old-school for-loop to iterate over the given collection is "group size" steps. Then, I'm just slicing-out the desired values. Really, the only complexity here at all is the fact that the Slice function in ColdFusion is NOT SAFE. As such, I have to take care when dealing with the end-bound of the array.

<cfscript>

values = [ "a", "b", "c", "d", "e", "f", "g", "h", "i", "j", "k" ];

writeDump( splitArrayIntoGroups( values, 5 ) );

// ------------------------------------------------------------------------------- //

// ------------------------------------------------------------------------------- //

/**

* I split the given collection into separate arrays, each of which has the given max

* length.

*/

public array function splitArrayIntoGroups(

required array collection,

required numeric maxLength

) {

var collectionLength = collection.len();

var groups = [];

for ( var i = 1 ; i <= collectionLength ; i += maxLength ) {

// CAUTION: The slice() method is NOT SAFE, and will throw an error if we go

// out-of-bounds on the given collection. As such, we have to make sure that

// our group-length argument never goes past the last index.

var groupLength = min( maxLength, ( collectionLength - i + 1 ) );

groups.append( collection.slice( i, groupLength ) );

}

return( groups );

}

</cfscript>

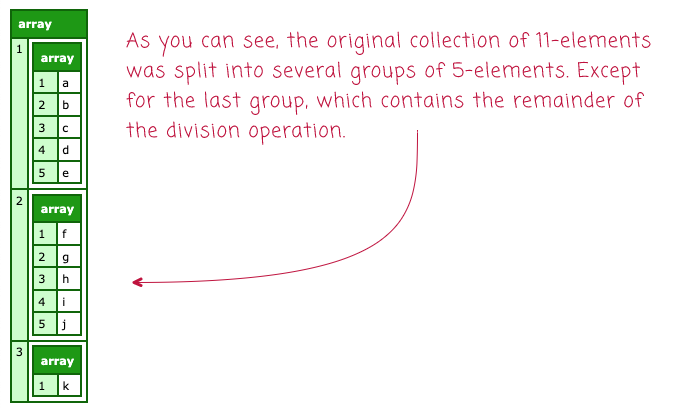

Here, we're splitting the collection of 11 letters into groups of 5. And, when we run this ColdFusion code, we get the following output:

As you can see, the collection of 11-letters was split into 3 groups: 2 of max-length 5 and then 1 that contained the remaining 1-element.

I really wish that the arraySlice() method in ColdFusion was safe. Having to worry about going out-of-bounds is quite a pain. A few years ago, I wrote an arraySliceSafe() function, and then demonstrated how many other Array-functions would become trivial to write.

Anyway, just a fun little weekend code kata. ColdFusion code is easy like a Sunday morning.

Want to use code from this post? Check out the license.

Reader Comments

We've probably all written utility functions like this. Super helpful. The most challenging part (for me) is naming these functions. Yours is named very well. I think I called mine

chunk()LOL 🤣@Chris,

You can't go wrong with "chunk" 😆 Honestly, I use "chunk" and "group" interchangeably in my code all the time.

In this post, I mentioned that I needed this "split array" concept in order to get around some issues that I was running into with a large SQL statement. As a quick follow-up, I wrote about that here:

www.bennadel.com/blog/4238-avoiding-mysql-max-allowed-packet-errors-by-splitting-up-large-aggregation-queries-in-coldfusion.htm

Basically, I was generating a

WHERE IN()clause that was too large for the MySQL server. So, I had to take the list of IDs, break it up into chunks, and then divide-and-conquer the queries.I too have written a chunk function and my first implementation used

slice. On large arrays (100k+ elements), response time would be measured in seconds so I had to go back to the drawing board.@Robert,

Curious to know what kind of a solution you ended-up with? Just a totally different approach altogether, like making just gabbing "first-N" elements instead of splitting the entire array?

While not very pretty, it is fast.

@Robert,

Ah, very cool. I had a previous implementation that took an approach that looked more like yours. Only, instead of calculating offsets internally, it would just build-up another array. It was something like this:

Really, the only difference here is that I'm iterating over the main collection using a

for-inloop and you're doing it using an index loop; but, we're both building up intermediary chunks and then appending them to the result.I love how many ways there are to do stuff like this!

@Ben,

Very clean! I like your implementation. 😀

@All,

A word of caution. It seems that the

arraySlice()function in Lucee has some serious performance overhead and does not play nicely with very large arrays. As the size of the array increases, the performance of thearraySlice()method goes does drastically:www.bennadel.com/blog/4253-arrayslice-has-an-exponential-performance-overhead-in-lucee-cfml-5-3-8-201.htm

By switching from

arraySlice()and over to an algorithm, like the ones that @Bruce and I were discussing, I was able to improve one data-processing algorithm by 500x.Thanks, yet again Ben!

Just wondered how you handle your

utilities.cfc? I have a sprawling one that has been updated over, well, basically my entire career, and is instantiated as a singleton and passed into pretty much all of my other CFCs... but if anyone has a more elegant way, I'm sure it's you.As an aside, I've often run into a similar problem with MSSQL (> 2000 params) and solved with a dynamically created CTE containing all the IDs I need and sub selecting that in the

WHEREclause.Something like:

You have to manually sanitize your ID list first as you can't use

cfqueryparam, but it works well for me.@Gary,

Oh chickens, I love the idea of creating a materialized table with a list of IDs. And, more than that, the fact that you used the comma-delimited nature of the ColdFusion List to provide the

VALUEScollection - well played!Re:

Utilities.cfc, honestly, I used to try to be more clever with it; but, in the end, I've started to remove the complexity and do exactly what you are doing: one gigantor component with a grab-bag full of small functions. Then, I just inject it wherever it is needed. Basically the same as Lodash, but on the server.The only real exception to that is if I see a number of utility methods that are all tightly related and specialized, I may break those out into their own library. Kind of like when I wanted to do a bunch of things with Java RegEx functionality, and I put that it into its on

JREcomponent.But mostly, I just jam them all into

Utilities.cfcand call it a day.Just thought I'd come back and update this with some new information...

Having been using this approach for many years, I've finally run into an issue whereby SQL Server is unable to process such a complex query. In this instance we're passing a list of around 60k IDs, which to be fair, is a bit excessive!

SQL Server returns an error I've never seen before:

The query processor ran out of internal resources and could not produce a query plan. This is a rare event and only expected for extremely complex queries or queries that reference a very large number of tables or partitions. Please simplify the query.Careful out there, folks.