Modernizing My CSV (Comma Separated Value) Parser In Lucee CFML 5.3.7.47

In the past week, I've written a few posts about generating CSV (Comma Separated Value) data in ColdFusion, including some experimentation with "lazy" queries and streaming CSV files in Lucee CFML. Just coincidentally, Adam Cameron asked me about a very old post that I wrote for parsing CSV data in ColdFusion. I had taken that post down due to some questionable content; so, I thought this was a perfect opportunity to rewrite my CSV parser using modern ColdFusion syntax in Lucee CFML 5.3.7.47.

All the CSV parsing that I perform is based on a non-back-tracking Regular Expression (RegEx) that I wrote with the help of Steven Levithan. It uses a technique called "Unrolling the Loop", which allows for "special" substrings (such as escaped quotes) without leading to catastrophic back-tracking and never-ending patterns.

Regular Expressions - especially complex ones - are never easy to read, let alone write. To help maintain some clarity and maintainability, I am breaking out the RegEx pattern generation into its own User Defined Function (UDF) with copious amounts of comments. I'm also using verbose mode to allow for white-space that won't impact the mechanics of the pattern itself:

<cfscript>

/**

* I return a Java Pattern Matcher for the given CSV input and field delimiter.

*

* @input I am the CSV data being parsed.

* @delimiter I am the field delimiter.

*/

private any function getPatternMatcher(

required string input,

required string delimiter

) {

var escapedDelimiter = delimiter.reFind( "\W" )

? "\#delimiter#"

: delimiter

;

var patternParts = [

// VERBOSE FLAGE: So that we can add white-space characters in the various

// parts (for readability) and they won't affect the RegEx pattern mechanics.

"(?x)",

// Make sure that the next match picks up exactly where the last match left

// off. In other words, we only want matches that consume a continuous set of

// characters.

"\G",

// As we match tokens within the CSV input, we're always going to match a

// FIELD value followed by a DELIMITER (or the end of the file).

// --

// NOTE: The "*+" notation is a "Possessive Qualifier", which means that it

// does not allow the pattern matcher to backtrack if it doesn't find a

// match. This forces all patterns to be a continuous set of characters (and

// has a performance benefit as the matcher does not have to remember any

// backtracking positions).

"(?:",

// CAPTURE GROUP 1: A quoted field value.

'"( [^"]*+ (?>""[^"]*+)* )"',

"|",

// CAPTURE GROUP 2: An unquoted field value.

// --

// NOTE: I originally had double-quotes in the following character-set;

// but, I am not sure if I really need it there. Removing it makes the

// matching ever-so-slightly more flexible.

'( [^#escapedDelimiter#\r\n]*+ )',

")",

// CAPTURE GROUP 3: The delimiter.

"(

#escapedDelimiter# |

\r\n? |

\n |

$

)"

];

var patternText = patternParts.toList( "" );

var matcher = createObject( "java", "java.util.regex.Pattern" )

.compile( javaCast( "string", patternText ) )

.matcher( javaCast( "string", input ) )

;

return( matcher );

}

</cfscript>

I know that there are a lot of people in this world that don't like comments; and, who think that by leaving comments I have somehow fundamentally failed to express the intend of my code. Well, I'm pretty sure that those people have never written Regular Expressions!

This Regular Expression pattern works by capturing quoted (qualified) and non-quoted field values using two different groups. As we match the pattern against the input CSV data, we can therefore vary our parsing logic based on which capture group is null / populated.

ASIDE: Normally, I would not include the

javaCast()calls for my interactions with the lower-level Java constructs; Lucee CFML generally doesn't need this as it uses the proper Types under the hood. However, to make this more compatible with the Adobe ColdFusion runtime, I'm including them in this implementation.

To modernize this whole approach, I've wrapped it in a ColdFusion Component that exposes two methods: one that parses raw CSV input; and, on that parses a CSV file:

component

output = false

hint = "I provide methods for parsing CSV (Comma Separated Values) inputs and files."

{

this.COMMA = ",";

this.TAB = chr( 9 );

// If this delimiter is passed-in, the parser will examine a portion of the input and

// try to determine, roughly, which delimiter is being used in the data.

this.GUESS_DELIMITER = "";

// ---

// PUBLIC METHODS.

// ---

/**

* I parse the given CSV input value using the given delimiter. The results are

* returned as a set of nested arrays. The rows are ASSUMED to be delimited by new

* line and / or row return characters.

*

* @input I am the CSV data being parsed.

* @delimiter I am the field delimiter.

*/

public array function parseCsv(

required string input,

string delimiter = this.GUESS_DELIMITER

) {

input = removeTrailingLines( input );

// Short-Circuit: It's not really possible to differentiate an empty input from

// an input that has an empty row. As such, we're just going to make a judgment

// call that an empty input has no records.

if ( ! input.len() ) {

return( [] );

}

if ( delimiter == this.GUESS_DELIMITER ) {

delimiter = getBestDelimiter( input );

}

testDelimiter( delimiter );

var matcher = getPatternMatcher( input, delimiter );

var QUOTED_FIELD_GROUP = javaCast( "int", 1 );

var UNQUOTED_FIELD_GROUP = javaCast( "int", 2 );

var DELIMITER_GROUP = javaCast( "int", 3 );

var ESCAPED_QUOTE = """""";

var UNESCAPTED_QUOTE = """";

var rows = [];

var row = [];

while ( matcher.find() ) {

// Our pattern matches quoted and unquoted fields in different capturing

// groups. As such, the only way we can determine which type of field was

// captured in this match is to see which one of the groups is NULL.

var quotedField = matcher.group( QUOTED_FIELD_GROUP );

// If the quotedField variable exists, it means that we captured a quoted

// field. And, if it's null / undefined, it means that we captured an

// unquoted field.

if ( local.keyExists( "quotedField" ) ) {

row.append( quotedField.replace( ESCAPED_QUOTE, UNESCAPTED_QUOTE, "all" ) );

} else {

row.append( matcher.group( UNQUOTED_FIELD_GROUP ) );

}

var capturedDelimiter = matcher.group( DELIMITER_GROUP );

// If our captured delimiter has a length, it was either a field delimiter

// or a row delimiter.

if ( capturedDelimiter.len() ) {

if ( capturedDelimiter == delimiter ) {

// In the case of a field delimiter, there's nothing to do - the

// matcher will just move onto the next field.

} else {

// In the case of a row delimiter, we need to gather up the current

// row in the results and then start the next row.

rows.append( row );

row = [];

}

// If our captured delimiter has no length, it means that it matched the end

// of the CSV input, which is also the end of the current row. We need to

// gather up the current row in the results; but, we don't need to bother

// starting a new row - there will be no more (meaningful) matches.

} else {

rows.append( row );

// In order to prevent an extra empty row from being appended to the

// results, we have to explicitly break out of the loop.

break;

}

} // END: While.

// The CSV input is expected to be in the format of FIELD followed by DELIMITER.

// However, if the user passed-in a delimiter that does not match the delimiter

// in the actual data, the pattern matcher will never match. In such a case, we

// need to let the user know that this was unexpected.

if ( ! rows.len() ) {

throw(

type = "CsvParser.UnexpectedEmptyResults",

message = "Results should not be empty.",

detail = "This can happen if you use an incompatible delimiter."

);

}

return( rows );

}

/**

* I parse the given CSV files using the given delimiter. The results are returned as

* a set of nested arrays. The rows are ASSUMED to be delimited by new line and / or

* row return characters.

*

* @inputFile I am the CSV file being parsed.

* @delimiter I am the field delimiter.

*/

public array function parseCsvFile(

required string inputFile,

string delimiter = this.GUESS_DELIMITER

) {

return( parseCsv( fileRead( inputFile ), delimiter ) );

}

// ---

// PRIVATE METHODS.

// ---

/**

* I examine the given CSV input and determine which delimiter (Comma or Tab) to use.

* This algorithm is naive and simply looks at which one MAY create more fields.

*

* @input I am the CSV input being examined.

*/

private string function getBestDelimiter( required string input ) {

var prefix = input.left( 1000 );

var commaCount = prefix.listLen( this.COMMA );

var tabCount = prefix.listLen( this.TAB );

if ( commaCount >= tabCount ) {

return( this.COMMA );

} else {

return( this.TAB );

}

}

/**

* I return a Java Pattern Matcher for the given CSV input and field delimiter.

*

* @input I am the CSV data being parsed.

* @delimiter I am the field delimiter.

*/

private any function getPatternMatcher(

required string input,

required string delimiter

) {

var escapedDelimiter = delimiter.reFind( "\W" )

? "\#delimiter#"

: delimiter

;

var patternParts = [

// VERBOSE FLAGE: So that we can add white-space characters in the various

// parts (for readability) and they won't affect the RegEx pattern mechanics.

"(?x)",

// Make sure that the next match picks up exactly where the last match left

// off. In other words, we only want matches that consume a continuous set of

// characters.

"\G",

// As we match tokens within the CSV input, we're always going to match a

// FIELD value followed by a DELIMITER (or the end of the file).

// --

// NOTE: The "*+" notation is a "Possessive Qualifier", which means that it

// does not allow the pattern matcher to backtrack if it doesn't find a

// match. This forces all patterns to be a continuous set of characters (and

// has a performance benefit as the matcher does not have to remember any

// backtracking positions).

"(?:",

// CAPTURE GROUP 1: A quoted field value.

'"( [^"]*+ (?>""[^"]*+)* )"',

"|",

// CAPTURE GROUP 2: An unquoted field value.

// --

// NOTE: I originally had double-quotes in the following character-set;

// but, I am not sure if I really need it there. Removing it makes the

// matching ever-so-slightly more flexible.

'( [^#escapedDelimiter#\r\n]*+ )',

")",

// CAPTURE GROUP 3: The delimiter.

"(

#escapedDelimiter# |

\r\n? |

\n |

$

)"

];

var patternText = patternParts.toList( "" );

var matcher = createObject( "java", "java.util.regex.Pattern" )

.compile( javaCast( "string", patternText ) )

.matcher( javaCast( "string", input ) )

;

return( matcher );

}

/**

* I remove any trailing empty lines.

*

* @input I am the CSV data being parsed.

*/

private string function removeTrailingLines( required string input ) {

return( input.reReplace( "[\r\n]+$", "" ) );

}

/**

* I assert that the given delimiter can be used by this parser. If it can, this

* method exits quietly; if not, it throws an error.

*

* @delimiter I am the delimiter being tested.

*/

private void function testDelimiter( required string delimiter ) {

if ( delimiter.len() != 1 ) {

throw(

type = "CsvParser.InvalidDelimiter.Length",

message = "Field delimiter must be one character.",

detail = "The field delimiter [#delimiter#] is not supported."

);

}

if (

( delimiter == chr( 13 ) ) ||

( delimiter == chr( 10 ) )

) {

throw(

type = "CsvParser.InvalidDelimiter.RowDelimiter",

message = "Field delimiter matches row delimiter.",

detail = "The field delimiter [#delimiter#] cannot be the same as the implicit row delimiter."

);

}

}

}

Once you get past the complexity of the Regular Expression pattern itself, there's really not all that much going on here. All we're doing it creating a Java Pattern Matcher and then looping over the CSV input looking at the three captured groups.

To test this out, I created a sample CSV file that has various quirks:

ID,Value,Feature Being Tested

1,hello,A raw value

2,"world",A qualified value

3,"jib,jab","A qualified value with "","" embedded"

4,ben "jamin" nadel,A raw field with embedded quotes

5,,An empty raw field

6,"",An empty qualified field

7,after empty,Making sure the empty line above worked

Now, all we have to do is instantiate our CsvParser.cfc ColdFusion component and try to read this file:

<cfscript>

parser = new CsvParser();

dump( parser.parseCsvFile( "./sample.csv" ) );

</cfscript>

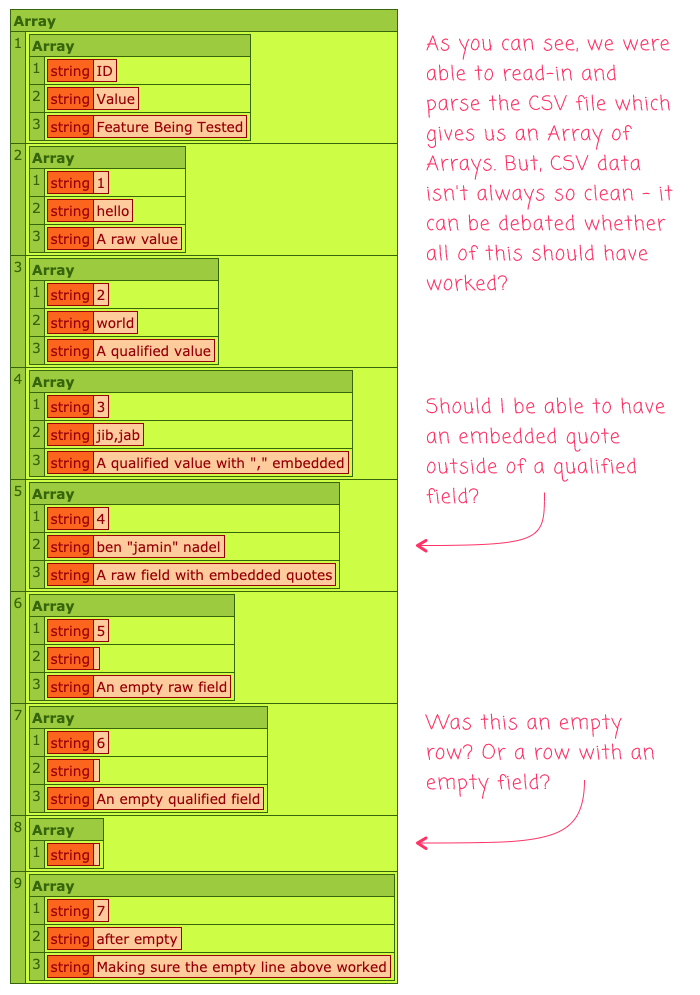

And, when we run this ColdFusion code, we get the following output:

As you can see, we were able to parse the sample CSV file into a multi-dimensional array of results. But, CSV data can be somewhat ambiguous. Take the 8th element in this Array - it was an empty row in my CSV file; but, does that mean it's an entirely blank row? Or, is it a row with a single, empty field value? Probably, I could update my logic to ignore rows that end-up with a single, empty value.

As much as I celebrated the ease with which we can generate CSV files in ColdFusion, it's also not that difficult to consume CSV files in our ColdFusion applications. That is, once you get past the Regular Expression! CSV data - and text-data like JSON (JavaScript Object Notation) - ends-up being a rather solid choice when it comes to the interoperability of systems.

Want to use code from this post? Check out the license.

Reader Comments

Impressive how you've clearly documented what would otherwise be a nightmare to read after the fact.

@Chris,

Thanks! RegEx is one of those technologies that makes way more sense in the mind of author vs. the mind of the reader. Have you ever seen one of those patterns that people use to validate email addresses 🤣 The only tool we have is copious amounts of comments.

@ Ben

The verbose flag is a game changer! I hadn't heard of it before. My best form of documenting a REGEX expression to date has been simply to assign it to a thoughtful variable name. At least then the reader would know what it's purpose was (intended) to be. The Unrolling the Loop is new for me too. REGEX never ceases to amaze me...such a clever, cryptic, capable language.

@Chris,

The verbose flag is pretty cool. It's harder to see it in action in a

CFScriptblock since there's not as much white-space to be had. But, you can see it in the last part of the pattern where I have all the delimiters on a new-line inside the double-quoted, multi-line string.Inside a verbose flag, you should be able to pull in an actual white-space character by escaping it.... I think... like

\(where there is a space after that slash.Thanks a bunch for this. I've been using your CSVToArray function in the web app I manage for a while, and it's one of the few things that isn't working now that I'm looking into moving us over to Lucee.

@James,

Ha ha, perfect timing 💪 Exciting to hear about your move to Lucee, I've really been loving it as an engine. I hope your transition goes smoothly. Our biggest stumbling block, once the non-starters were gone, was Arrays being passed by reference in Lucee. In ACF, they are always passed by value; and, when we moved to Lucee, we had some "tricky" code that was originally depending on the arrays being passed by value, which caused production bugs after we moved.

This is great! Regex to the rescue!

I am playing with this component now and so far on my machine running ACF 2018, the new CFC performs on average ~33% faster using a sample CSV with 56 columns and 5000 rows. 💪

Since the CFC reads the entire file into memory, I wonder at what point memory will become a bottleneck, especially with extremely large CSV files.

Previously, when dealing with large CSV files, I've cflooped my way through each row, but that doesn't work with CSV files that have line breaks in them.

I'm just thinking out loud here, but I wonder if there would be a way to modify this new CFC to "buffer" the incoming file so that it wouldn't have to read the whole thing into memory and instead, loop over one or more rows until it had X amount of data? That would be handy too if you just needed to get header columns of a CSV file only.

Perhaps there's an underlying Java method for reading just a certain amount of a file that we could parse through and assemble the output array. The logic might look something like this:

@David,

That's awesome that is faster!! Woot woot 💪 I think buffering would definitely be doable, though it's not something I've tried in the past. I have seen parsers that will "emit" tokens rather than returning an entire data-structure. So, it might emit a "field value" and then emit a "row delimiter" ... or something, I'm just shooting from the hip. But, I think ultimately people don't usually need that much flexibility. I think the idea of row-based buffering would be the right balance of performance and usability.

I'll let that sit in the back of my brain for a bit.