The Affect Of File-IO On Performance Experimentation In Docker And Lucee CFML

Ever since I learned that Lucee CFML supports parallel array iteration, I've been itching to find a good place to really leverage it. And, recently, it occurred to me that I might be able to use it to boost PDF generation at InVision. Currently, when you generate a PDF for a prototype, we let the CFDocument tag slurp in images using securely-signed, remote URLs. But, what if I downloaded those images to a scratch folder first; and then, used local file-paths in the CFDocument tag? Would it change the performance characteristics of the PDF generation?

Well, as it turns out, Yes and No. And, I'm not sure - it's confusing.

Let's look at what I was trying to do. First, I set up a "control" version of the Lucee CFML code in which I am generating a PDF with 91-pages, where each page contains a single Image from a remote URL:

<cfscript>

// Read-in an array of URLs for images stored in the CDN. We're going to generate a

// PDF where each document page contains one of these images.

remoteUrls = deserializeJson( fileRead( "./remote-urls2.json" ).trim() );

startedAt = getTickCount();

</cfscript>

<cfoutput>

<cfdocument

format="pdf"

filename="./test.pdf"

overwrite="true"

orientation="portrait">

<!--- Create a new PDF document page for each remote URL. --->

<cfloop index="remoteUrl" array="#remoteUrls#">

<cfdocumentsection>

<p>

<img src="#remoteUrl#" height="600" />

</p>

</cfdocumentsection>

</cfloop>

</cfdocument>

<p>

Duration: #numberFormat( getTickCount() - startedAt )# ms

</p>

<iframe

src="./test.pdf?_=#getTickCount()#"

style="width: 100% ; height: 800px ;">

</iframe>

</cfoutput>

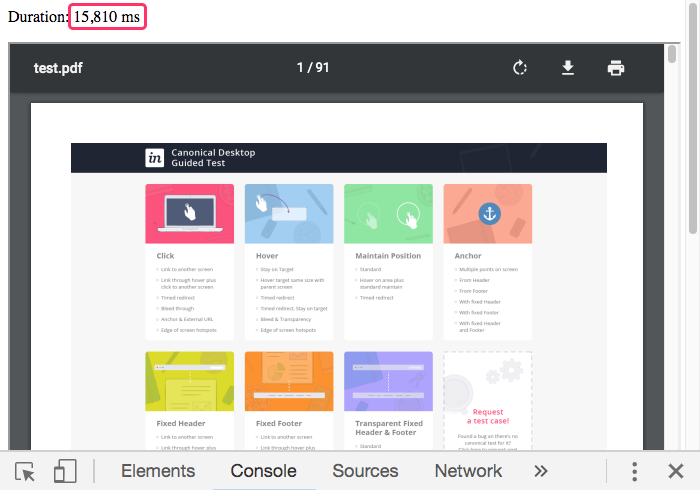

As you can see, there's almost nothing going on here. All I'm doing is generating a bunch of <img> tags inside of the CFDocument tag and then letting ColdFusion do its thing. And, when we run this ColdFusion in my local development environment (ie, inside Docker for Mac), we get the following output:

As you can see, we generated the 91-page PDF in about 15-seconds using remote URLs in Lucee CFML. The timing of this page changes from refresh-to-refresh. But, generally, it takes somewhere between 12-seconds and 20-seconds to complete.

ASIDE: After all these years, I'm still shocked at how easy ColdFusion makes it to generate PDF documents. I mean, look at how little code that takes!

Now for the experiment: instead of embedding the remote URLs right in the CFDocument tag, I'm going to download the images, in parallel threads, and then embed the images using local file paths:

<cfscript>

// Read-in an array of URLs for images stored in the CDN. We're going to generate a

// PDF where each document page contains one of these images.

remoteUrls = deserializeJson( fileRead( "./remote-urls2.json" ).trim() );

startedAt = getTickCount();

// As a performance experiment, instead of leaving it up to CFDocument to download

// all of the remote images, let's pre-download the images IN PARALLEL THREADS to see

// if it makes the PDF generation any faster.

localUrls = remoteUrls.map(

( remoteUrl, i ) => {

// NOTE: I am saving the images into a directory outside of the web-root in

// order to make sure that the resultant URLs are NOT WEB ACCESSIBLE. I

// wanted to rule-out any possibility that the CFDocument tag was still

// making a remote request.

var localUrl = expandPath( "../../../scratch/pdf-test/#i#.jpg" );

fileCopy( remoteUrl, localUrl );

return( localUrl );

},

// Run the mapping / downloading in parallel.

true

);

downloadDuration = ( getTickCount() - startedAt );

</cfscript>

<cfoutput>

<!---

NOTE: This time, since we're reading from the local file-system, we need to use

the "localUrl" attribute so that CFDocument doesn't try to make CFHTTP requests

when embedding the images.

--->

<cfdocument

format="pdf"

filename="./test2.pdf"

overwrite="true"

orientation="portrait"

localUrl="true">

<!---

Create a new PDF document page for each LOCAL image file.

--

NOTE: I found that I had to prefix the local image path with "file://" or the

image would fail to embed in the resultant PDF, even with localUrl="True" in

the CFDocument attributes.

--->

<cfloop index="localUrl" array="#localUrls#">

<cfdocumentsection>

<p>

<img src="file://#localUrl#" height="600" />

</p>

</cfdocumentsection>

</cfloop>

</cfdocument>

<p>

Duration: #numberFormat( getTickCount() - startedAt )# ms

( Downloading: #numberFormat( downloadDuration )# ms )

</p>

<iframe

src="./test2.pdf?_=#getTickCount()#"

style="width: 100% ; height: 800px ;">

</iframe>

</cfoutput>

As you can see, this time, I'm mapping the remote URLs array onto a collection of local URLs that are outside of Lucee's web root. And, because I'm using Lucee's parallel array iteration, that map-operation should download the images using up to 20 (the default) concurrent threads. Then, in the CFDocument tag, I'm referencing the local, downloaded images instead of the remote URLs.

NOTE: Even when using

localUrl="true"on theCFDocumenttag, I found that Lucee would continue to make HTTP requests for the images until I actually prefixed thesrcattribute withfile://. I didn't realize this was happening until I moved the local images outside of the web-root and discovered that the images no longer showed-up in the generated PDF.

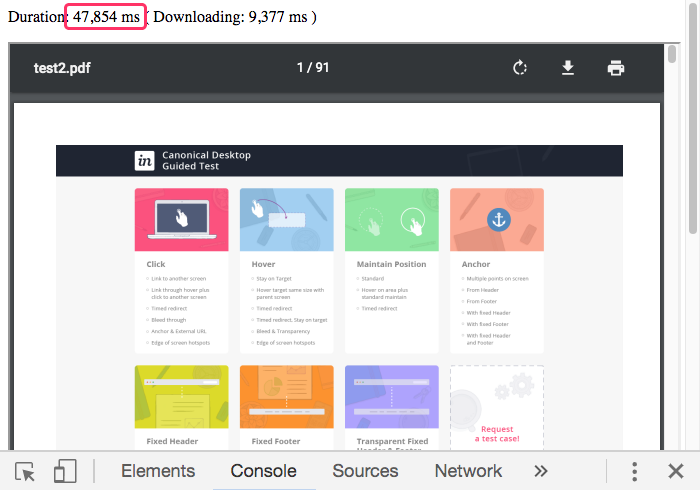

Now, when we run this experimental version of the Lucee CFML code in my local development environment (ie, inside Docker for Mac), we get the following output:

This time, generating the PDF document took 3-times as long when using the local URLs compared to the "control" version which used remote URLs. In fact, just downloading the images took almost as long as the original version took to generate the entire PDF. And, after refreshing the page a few times, I even saw it take as much as 30-seconds just for the download portion of this experiment.

At first blush, it appears that attempting to pre-download the images and then use local URLs in the CFDocument tag leads to much poorer performance. But, I have to keep in mind that my local development environment isn't necessarily indicative of production performance.

Locally, our team does our ColdFusion development inside of Docker containers (Docker for Mac specifically) in order to ensure that everyone is running the same setup; and, that we [almost] never run into a "Works on my machine" scenario.

CAUTION: I know very little about Docker and containerization. As such, it is quite possible that I have Docker settings that are shooting me in the foot. My current MacBook Pro is set to give Docker 4-Cores and 10Gb of RAM (though, Docker for Mac appears to take all the RAM is damn well pleases). I'm also using

:delegatedon my Volume mounts.

Now, Docker for Mac has known issues when it comes to File IO (Input / Output). As such, I wanted to re-run these experiments closer to the "metal" by using CommandBox and running a Lucee CFML server directly on my host computer.

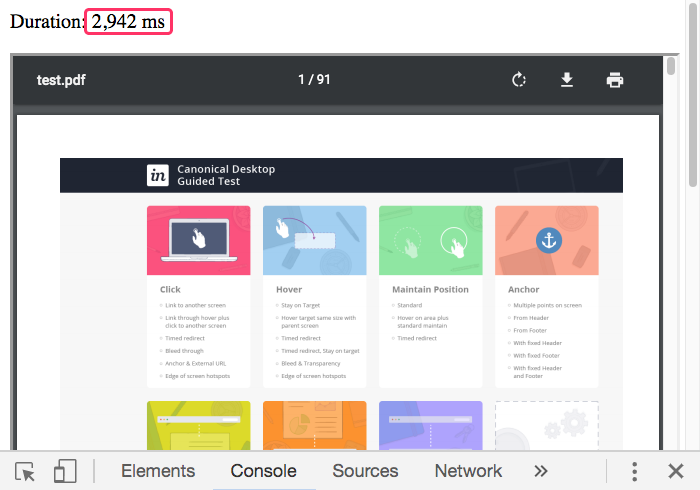

When using CommandBox, here's what I get for the "control" version of the PDF generation:

Holy chickens! When using the CFDocument in a CommandBox Lucee CFML server, the 91-page PDF is generated in about 3-seconds. Refreshing the page a few times gives me results in anywhere between 2-seconds and 4-seconds. This is 4-times faster than when running inside my Docker container.

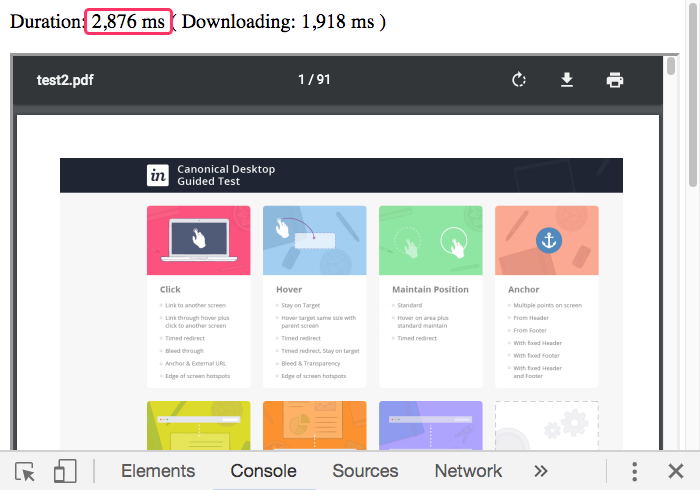

But, here's the kicker: this is what I get when running the experimental version in the CommandBox Lucee CFML server:

This time, the experimental version is on-par with the "control" version in terms of performance. In this particular instance, the overall time is a tiny-bit faster; but, this is not always the case. If I refresh the page, it usually takes somewhere between 2-seconds and 4-seconds for the experimental version to complete.

So, from a performance standpoint, attempting to pre-download the images doesn't appear to have a meaningful impact. But, that shines a light on the real take-away from this experiment, which is the fact that Docker file IO has a very significant impact on performance! When running outside of Docker, the two approaches are roughly equivalent. But, when running inside Docker, the approach that uses more file IO is at least 3-times slower.

The real dilemma here is that I have no idea how much of this will be indicative of production performance. After all, we're not running Macs in production - we've got an army of *nix servers being managed by Kubernetes (K8) on Amazon EC2.

If I can't "trust" file IO performance locally, how will I know which experiments are worthwhile? In this case, it was easy enough to replicate the logic in an isolated Lucee CFML server. But, not all cases will be that easy. And, I have no idea if a "bare metal" CFML server running in CommandBox is any more relevant to what's happening in production?

I don't have any good answers here - only questions. Thankfully, most algorithms are not file-IO bound; so, the overhead of the Docker container is much less of an issue. But, in cases where I do have to do a lot of file manipulation, the picture becomes much less clear to me.

Epilogue On The RAM Disk (ram://) Virtual File System (VFS)

Yesterday, when discussing the Docker file-IO issues with one of our Site Reliability Engineers (SRE), Maxwell Cole, he suggested that I try using a "Ram Disk". A Ram disk is part of ColdFusion's Virtual File System (VFS) that allows us to treat segments of memory like a traditional file-system. The hope being that I could use file IO without the overhead of reading-from / writing-to a mounted volume.

Unfortunately, I could not get the ram:// disk to work with image src attributes inside the CFDocument tag. If I tried something like:

src="ram:///image.jpg"

... the PDF would generate, but the image would be broken. And, if I tried:

src="file:///ram:///image.jpg"

... the PDF generation would just hang indefinitely. I also tried various combinations of // and /// and nothing seemed to work.

Want to use code from this post? Check out the license.

Reader Comments

I am curious how that will run on WSL2 with Windows 10 this may at release. I have always found local to be slower than the server, so this is a surprise for me also. Good share Ben! Now we await for the container gurus to enlighten us all.

@John,

Right, local is usually slower; but, in my experience, at least it's proportionally slower when compared to the server. In this case, with the file IO, it feels like fundamental difference that is unpredictable until you actually get to production.

The most unsettling things about this is the false negative you might get on a sanity check. For example, you have an itch to test something, so you throw a proof-of-concept together locally and try it it out. If you see terrible performance, you might think, "Meh, poc failed, moving on to another task", but you might only be seeing an outlier issue in your local Docker and may not realize that it would radically different in production.

That's the most troublesome part.