Viewing Software Engineers Through The Lens Of The Milgram Experiment On Obedience To Authority Figures

Last week, I spent a good amount of time deleting dead code from our application codebase. I love to delete code. As far as I'm concerned, few things in life offer as much excitement and satisfaction as decreasing code-rot. In fact, I love it so much that I become confused by software engineers who leave the dead code in the application in the first place. Over the weekend, however, I was listening to someone talk about The Milgram Experiment on Obedience to Authority Figures; and, I couldn't help but draw parallels between the person who shocks another human-being and the engineer who leaves known bugs in the user experience and rotting code in the codebase.

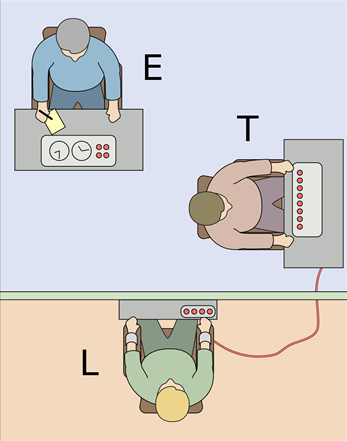

If you are unfamiliar with The Milgram Experiment (conducted by Stanley Milgram), it's an experiment in which an Experimenter (ie, the authority figure) orders a Teacher (the subject) to give increasingly dangerous electric shocks to a Learner who is believed to be in another room. The following graphic is borrowed from the Wikipedia page on the topic:

| |

|

|

||

| |

|

|

||

| |

|

|

The intent of the study was to see how people would respond when asked to do something that conflicted with their own set of morals. Before the study, it was believed that most subjects would refuse to send an electric shock to the Learner once the subject believed the Learner to be in pain. However, once the experiment was conducted, a surprising number of subjects would end up delivering the most powerful 450-volt shock, even when the thought of doing so felt absolutely abhorrent.

In Milgram's first set of experiments, 65 percent (26 of 40) of experiment participants administered the experiment's final massive 450-volt shock, though many were very uncomfortable doing so; at some point, every participant paused and questioned the experiment; some said they would refund the money they were paid for participating in the experiment. Throughout the experiment, subjects displayed varying degrees of tension and stress. Subjects were sweating, trembling, stuttering, biting their lips, groaning, digging their fingernails into their skin, and some were even having nervous laughing fits or seizures.

When we hear about findings like this, we all like to believe that we would never do such things. We like to believe that, "just following orders," is somehow a human flaw that we don't have. But, experiments like this demonstrate that such assumptions are optimistic at best and completely wrong at worst.

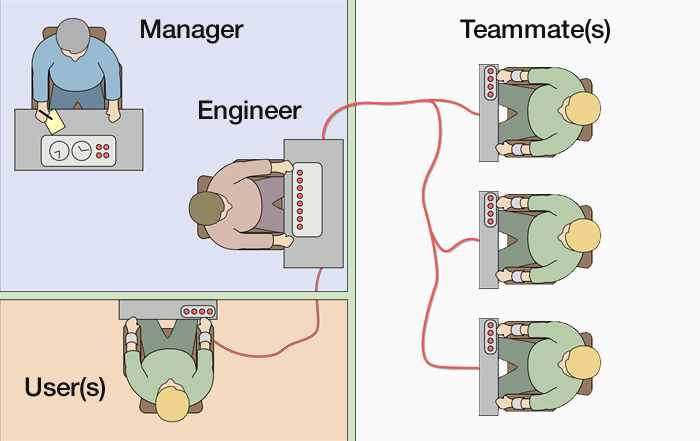

And, now that we see how people respond to authority figures when physical pain is on the line, let's think about how software engineers respond to authority figures when mere abstract frustration and inconvenience is at stake. What if we take the Milgram Experiment diagram and rework it to reflect our engineering team dynamic:

| |

|

|

||

| |

|

|

||

| |

|

|

When we look at the engineering team like this, it's much less surprising that we engineers leave dead code in the codebase and allow known bugs to present in the user experience (UX). Perhaps at some point an authority figure made it clear that we didn't have time to fix problems or that said problems weren't a high-enough priority on the product roadmap. Or maybe that cleaning up dead code didn't add value from the user's perspective. And so, we move on to the next task even though leaving the code as-is conflicts with our internal moral compass and our sense of right and wrong.

In the Milgram Experiment, the subjects often raised concern and objection about sending the jolt of electricity into the human-being in the next room. But, the experimenter would prod the subject into continuing with the following phrases (from Wikipedia):

- Please continue.

- The experiment requires that you continue.

- It is absolutely essential that you continue.

- You have no other choice, you must go on.

With nothing but words, the experimenter was able to get 65-percent of subjects - against their will - to deliver extremely painful electric shocks. I wonder, what words do we use in a software engineering context?

- It's not up to us, it's up to the Product team.

- We have to hit our deadline.

- The marketing team is going to release a press-release next week.

- You're not closing enough tickets.

- We're going to abandon this code eventually anyway.

- This is just a short-term solution.

- You're team's velocity needs to be improved.

- This only affects a small number of users.

- This isn't a priority for us right now.

- We'll come back and fix this later.

- Because that's what management wants.

- Why is this taking so long.

- You need to step up and get this done.

I am not writing about this to provide a solution. I don't have one. I am only writing about this to cultivate - in my self - a better sense of empathy and understanding. When I see code that frustrates me and behavior that confuses me, I have to remember that actions don't necessarily represent intent. I have to remember that engineers aren't leaving dead code in place because they don't care - they're leaving dead code in place because they aren't given the freedom to clean it up. I have to remember that engineers aren't leaving bugs in place because they don't care about the users - they're leaving bugs in place because they aren't given the authority to deviate from the product roadmap.

Reader Comments

This is a very interesting lens through which to view how we develop software (and lots of other things.)

Milgram determined that once his subjects perceived themselves as agents acting on behalf of someone else rather than as independent actors they essentially delegated their morality to the one giving the instructions. As a result they felt that someone other than them was responsible for their actions and their outcomes.

You may have led me toward an answer to a question that has perplexed me. Since we have collectively learned so much about software development over the past decades, why do we continue to ignore what we know and do so much wrong? Perhaps it's because we surrender our responsibility and view ourselves as agents.

@Scott,

Learning is a tough subject in an of itself. People are always saying that - in this industry - we keep learning the same lessons over and over again; that every generation of software developers keeps identifying patterns that the previous generation knew a long time ago. Like how the old-guard will hear "microservice architecture" and be like, "Yeah, or as we called it 20-years ago, Service Oriented Architecture" :D

Honestly, I wish I knew how to get a better grip on this. I think part of the problem is just the massive variety in things that we want to build. And, the need to do so on an ever-expanding set of options. It's hard to chalk it up to education because you can learn patterns and solutions. But, if they don't map 1:1 to what you are trying to do, you really have to have an evolved understanding of said patterns to know / understand how to translate them to your context.

I feel like I'm flailing all the time. Every day is just a struggle to find some level of elegance.