Parsing And Serializing Large Objects Using JSONStream In Node.js

At InVision App, the data-services team has been tasked with migrating vertical-slices of data from one Tenant in one VPN (Virtual Private Network) to another Tenant in another VPN. To do this, we're gathering the database records (MySQL and MongoDB in this case), dumping those records so JSON (JavaScript Object Notation) files, and then importing those JSON files into the target system. This proof-of-concept-cum-production-code workflow has been working fairly well until recently - in the last migration, the size of the data exceeded V8's ability to execute JSON.stringify(). As such, I wanted to take a quick look at how JSON can be parsed and generated incrementally using Node.js Transform streams.

I am not sure, off-hand, which version of Node.js was running on the machine performing the migration; so, V8's ability to parse and stringify JSON may very well be version-dependent. That said, when we went to execute JSON.stringify() on a massive record-set, we were getting the JavaScript error:

RangeError: Invalid string length

A quick Google search will reveal that this error actually means that the V8 process ran "out of memory" while performing the serialization operation. So, instead of trying to serialize the entire record-set in one operation, we need to break the record-set apart and serialize the individual records.

To do this, I am experimenting with the npm module JSONStream by Dominic Tarr. JSONStream presents .parse() and .stringify() methods that provide Node.js Transform streams (aka "through" streams) through which large operations can be broken down into smaller, resource-friendly operations. The nice thing about this module is that the final deliverables are the same - large JSON files; the only difference is that they are being generated and consumed incrementally instead of using a single, resource-exhausting operation.

To test out this module, I'm going to take an in-memory record-set and stream it disk as JSON; then, when the JSON output file has been generated, I'm going to stream it back into memory and log the data to the terminal.

JSONStream provides two methods for serialization: .stringify() and .stringifyObject(). As you can probably guess from the names, stringify() deals with Arrays and stringifyObject() deals with Objects. I am using .stringify() for my demo; but, in either case, it's important to understand that you are not passing a top-level entity to these methods. Instead, you are letting JSONStream generate the top-level entity from the sub-items that you pass to it. Thats why, in the following code, I'm passing individual records to the .stringify() method, rather than trying to pass it the entire record-set.

// Require the core node modules.

var chalk = require( "chalk" );

var fileSystem = require( "fs" );

var JSONStream = require( "JSONStream" );

// ----------------------------------------------------------------------------------- //

// ----------------------------------------------------------------------------------- //

// Imagine that we are performing some sort of data migration and we have to move data

// from one database to flat files; then transport those flat files elsewhere; then,

// import those flat files into a different database.

var records = [

{ id: 1, name: "Terminator" },

{ id: 2, name: "Predator" },

{ id: 3, name: "True Lies" },

{ id: 4, name: "Running Man" },

{ id: 5, name: "Twins" }

// .... hundreds of thousands of records ....

];

// If the record-sets are HUGE, then we run the risk of running out of memory when

// serializing the data as JSON:

// --

// RangeError: Invalid string length (aka, out-of-memory error)

// --

// As such, we're going to STREAM the record-set to a data file using JSONStream. The

// .stringify() method creates a TRANSFORM (or THROUGH) stream to which we will write

// the individual records in the record-set.

var transformStream = JSONStream.stringify();

var outputStream = fileSystem.createWriteStream( __dirname + "/data.json" );

// In this case, we're going to pipe the serialized objects to a data file.

transformStream.pipe( outputStream );

// Iterate over the records and write EACH ONE to the TRANSFORM stream individually.

// --

// NOTE: If we had tried to write the entire record-set in one operation, the output

// would be malformed - it expects to be given items, not collections.

records.forEach( transformStream.write );

// Once we've written each record in the record-set, we have to end the stream so that

// the TRANSFORM stream knows to output the end of the array it is generating.

transformStream.end();

// Once the JSONStream has flushed all data to the output stream, let's indicate done.

outputStream.on(

"finish",

function handleFinish() {

console.log( chalk.green( "JSONStream serialization complete!" ) );

console.log( "- - - - - - - - - - - - - - - - - - - - - - -" );

}

);

// ----------------------------------------------------------------------------------- //

// ----------------------------------------------------------------------------------- //

// Since the stream actions are event-driven (and asynchronous), we have to wait until

// our output stream has been closed before we can try reading it back in.

outputStream.on(

"finish",

function handleFinish() {

// When we read in the Array, we want to emit a "data" event for every item in

// the serialized record-set. As such, we are going to use the path "*".

var transformStream = JSONStream.parse( "*" );

var inputStream = fileSystem.createReadStream( __dirname + "/data.json" );

// Once we pipe the input stream into the TRANSFORM stream, the parser will

// start working it's magic. We can bind to the "data" event to handle each

// top-level item as it is parsed.

inputStream

.pipe( transformStream )

// Each "data" event will emit one item in our record-set.

.on(

"data",

function handleRecord( data ) {

console.log( chalk.red( "Record (event):" ), data );

}

)

// Once the JSONStream has parsed all the input, let's indicate done.

.on(

"end",

function handleEnd() {

console.log( "- - - - - - - - - - - - - - - - - - - - - - -" );

console.log( chalk.green( "JSONStream parsing complete!" ) );

}

)

;

}

);

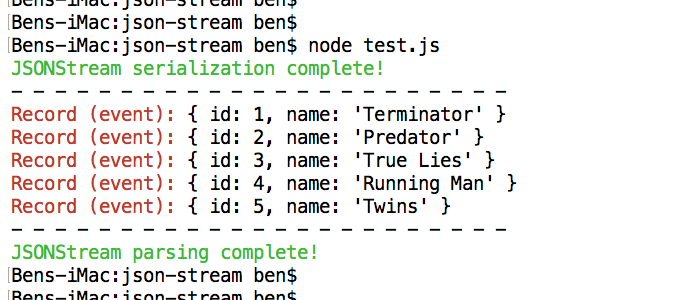

As you can see, the code is fairly straightforward - at least, as straightforward as Streams can be (amiright?!). JSONStream provides Transform streams through which the individual records are being safely aggregated. And, when we run the above code, we get the following terminal output:

Here, you can see the data successfully completed the full life-cycle, being serialized to disk and then deserialized back into memory. But, take note that when reading the data from the file-input stream, each "data" event indicates an individual record in the overall record-set.

To be clear, I haven't yet tried this in our migration project, so I can't testify that it actually works on massive record-sets; but, from what I can see, JSONStream looks like a really easy way to serialize and deserialize large objects using JavaScript Object Notation (JSON). And, the best part is that the files themselves don't need to change - we just need to tweak the way in which we generate and consume those files.

Want to use code from this post? Check out the license.

Reader Comments

@All,

I revisited this idea, using "Newline-Delimited JSON", which is a slightly different but more performant approach:

www.bennadel.com/blog/3233-parsing-and-serializing-large-datasets-using-newline-delimited-json-in-node-js.htm

It still uses JSON; but, it stores a small JSON object per-line, rather than one very large JSON object per file. This has several benefits.