Trying To Understand Unicode Normalization In ColdFusion And Java

Just when you think character encoding can't get any worse - it gets worse. Apparently, in Unicode, some characters can be represented by more than one sequence of bytes. This means that two sequences of code may be structurally different but, functionally equivalent in so much as they render the exact same glyph. This structural difference becomes problematic when you need to match characters, such as with a database query; if the input code sequence doesn't match the persisted code sequence, no match is found even if both code sequences "code" for the same character.

When you're dealing with a file system, things get even more interesting! In my [limited] experience with this problem, structurally different encodings may or may not result in different files. In the demo below, you'll see that, on my Mac, two different code sequences will result in a single file. However, two different code sequences on a Windows computer will result in two different files.

At InVision App, our persisted data tries to keep parity with various file systems since we allow designers to sync design files directly from their local files systems, Dropbox, Google Drive, etc.. This parity, combined with inconsistent Unicode representation, has lead to some problematic behavior and difficult debugging - imagine two database entries with different Unicode representations resulting into a single file being synchronized.

To cope with this problem, Oscar Arevalo and David Bainbridge have been looking into Unicode normalization. In Java (and therefore in ColdFusion), there are built-in libraries that allow a developer to normalize Unicode representations for use within a given context. Using these libraries, we can pick a single representation format at the boundaries of our application so as to keep all persisted data in a consistent state.

Personally, I don't feel comfortable enough with character encoding to go into too much detail; but, according to the Unicode website, there are four different types of normalization - NFC, NFD, NFKC, and NFKD. From what I have read, it seems that NFC is good for general text and has good legacy compatibility. NFD, on the other hand, is preferred for internal processing.

A more in-depth explanation of the pros and cons can be seen here, which states that NFC is a great choice; however, if you need to perform text comparison, NFD is a far superior choice. Since we do a lot of database comparisons, we decided to go with NFD.

That said, let's take a quick look at some code just to get a better sense of what I'm talking about. In the following demo, I'm going to code for the lowercase "e" with an acute accent. This can be represented by a single character; or, by two characters (that rendered combined). Then, I'm going to take both representations and normalize them using the NFC and the NFD formats:

<cfscript>

// The precomposed representation of the e-acute character is represented as a

// single character, \u00E9.

precomposed = chr( inputBaseN( "00E9", 16 ) );

// The combining representation of the e-acute character is represented by two

// characters (e and combining-acute), \u0065 and \u0301, that are visually

// collapsed when rendered.

combining = ( chr( inputBaseN( "0065", 16 ) ) & chr( inputBaseN( "0301", 16 ) ) );

// Demonstrate that these two characters are visually equivalent, but not the same

// from a data-storage standpoint.

writeOutput( precomposed );

writeOutput( " == " );

writeOutput( combining );

writeOutput( " : " & ( precomposed == combining ) );

writeOutput( " ( " & len( precomposed ) & " , " & len( combining ) & " ) " );

writeOutput( "<br /><br />" );

// ------------------------------------------------------------------------------- //

// ------------------------------------------------------------------------------- //

// Java provides a utility class that can normalize unicode characters into four

// different forms: NFC, NFD, NFKC, and NFKD.

normalizer = createObject( "java", "java.text.Normalizer" );

normalizerForm = createObject( "java", "java.text.Normalizer$Form" );

// ------------------------------------------------------------------------------- //

// ------------------------------------------------------------------------------- //

// NFC - Canonical decomposition, followed by canonical composition.

// Convert both the strings to NFC format - this attempts to combine character

// sequences, though it is not always guaranteed that the normalized string will be

// less-than-or-equal the length of the input.

precomposedNFC = normalizer.normalize( javaCast( "string", precomposed ), normalizerForm.NFC );

combiningNFC = normalizer.normalize( javaCast( "string", combining ), normalizerForm.NFC );

// Demonstrate that the NFC normalized format now makes the two strings both

// functionally equivalent and structurally equivalent.

writeOutput( precomposedNFC );

writeOutput( " == " );

writeOutput( combiningNFC );

writeOutput( " : " & ( precomposedNFC == combiningNFC ) );

writeOutput( " ( " & len( precomposedNFC ) & " , " & len( combiningNFC ) & " ) " );

writeOutput( "<br /><br />" );

// ------------------------------------------------------------------------------- //

// ------------------------------------------------------------------------------- //

// NFD - Canonical decomposition.

// Convert both the strings to NFD format - this attempts to decompose the input into

// a sequence of separate but combining characters.

precomposedNFD = normalizer.normalize( javaCast( "string", precomposed ), normalizerForm.NFD );

combiningNFD = normalizer.normalize( javaCast( "string", combining ), normalizerForm.NFD );

// Demonstrate that the NFD normalized format now makes the two strings both

// functionally equivalent and structurally equivalent.

writeOutput( precomposedNFD );

writeOutput( " == " );

writeOutput( combiningNFD );

writeOutput( " : " & ( precomposedNFD == combiningNFD ) );

writeOutput( " ( " & len( precomposedNFD ) & " , " & len( combiningNFD ) & " ) " );

writeOutput( "<br /><br />" );

</cfscript>

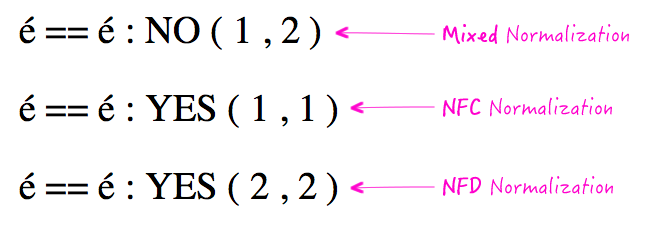

As you can see, I'm using the Java library, java.text.Normalizer, to do the normalization. When we run the above code, we get the following page output:

As you can see, even when the code sequences are different, they all code for the exact same glyph.

Now, let's take the same set of inputs and try to generate physical files:

<cfscript>

// Create two representations of the same unicode character.

precomposed = chr( inputBaseN( "00E9", 16 ) );

combining = ( chr( inputBaseN( "0065", 16 ) ) & chr( inputBaseN( "0301", 16 ) ) );

// Attempt to create a file for each representation.

// --

// CAUTION: On my Mac, this only creates one file with the last fileWrite() winning.

fileWrite( expandPath( "./data/#precomposed#.txt" ), "Precomposed file" );

fileWrite( expandPath( "./data/#combining#.txt" ), "Combining file" );

</cfscript>

As you can see, I'm attempting to create two different files, one with the NFC representation and one with the NFD representation. However, when I run this code on my Mac I don't get two files - I get a single file with the last call to fileWrite() "winning." Of course, when I run this on a Windows computer, I get two different files with the same "visual name."

Are you starting to understand how fun it is to synchronize this kind of data across systems? At the very least, ColdFusion (by way of Java) is awesome enough to have great utility functions baked into the core libraries. So, small victories.

Want to use code from this post? Check out the license.

Reader Comments