Simulating Network Latency In AngularJS With $http Interceptors And $timeout

Over the weekend, when I was exploring routing, caching, and nested views with ngRoute in AngularJS 1.x, I wanted to slow down the HTTP speed so that the user could experience Views in a "pending state." But, because I was working with my local system, HTTP requests were completing very quickly, in about 15ms. To get around this I used an HTTP interceptor to simulate network latency in a controlled fashion.

Run this demo in my JavaScript Demos project on GitHub.

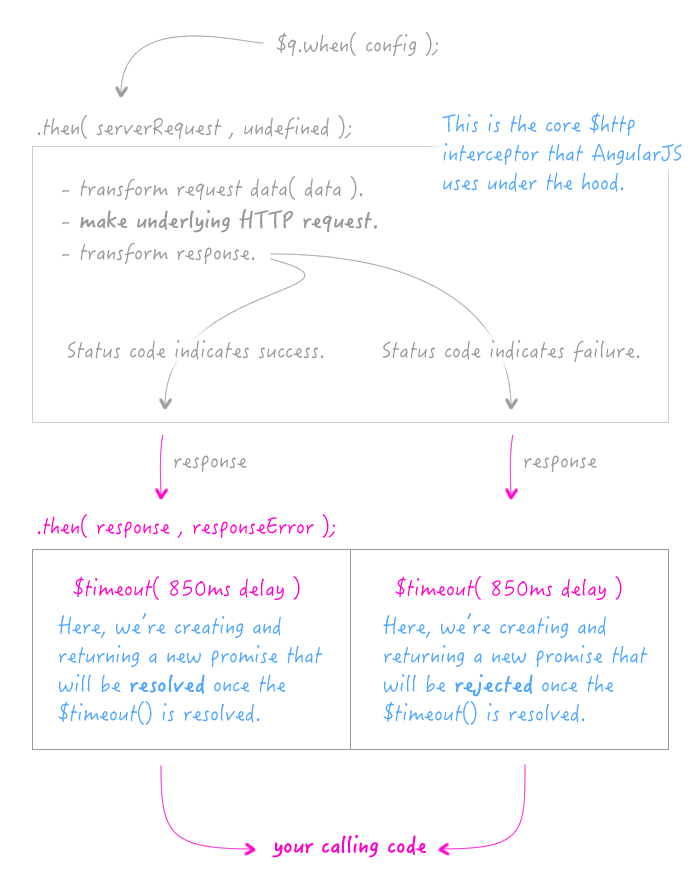

In the past, we've seen that AngularJS implements the $http request pipeline using a promise chain with hooks into both the pre-http and post-http portions of the request. This provides a perfect opportunity to inject a "dely promise" into the overall promise chain:

For this demo, I'm hooking into the "response" portion of the workflow, as opposed to the "request" portion. For some reason, that just feels a bit more natural. This works for both successful responses as well as failed responses, assuming we use both the "response" and "responseError" hooks:

| <!doctype html> | |

| <html ng-app="Demo"> | |

| <head> | |

| <meta charset="utf-8" /> | |

| <title> | |

| Simulating Network Latency In AngularJS With HTTP Interceptors | |

| </title> | |

| <link rel="stylesheet" type="text/css" href="./demo.css"></link> | |

| </head> | |

| <body ng-controller="AppController"> | |

| <h1> | |

| Simulating Network Latency In AngularJS With HTTP Interceptors | |

| </h1> | |

| <p ng-switch="isLoading"> | |

| <strong>State</strong>: | |

| <span ng-switch-when="true">Loading http data.</span> | |

| <span ng-switch-when="false">Done after {{ delta }} milliseconds</span> | |

| </p> | |

| <p> | |

| <a ng-click="makeRequest()">Make an HTTP request</a> | |

| </p> | |

| <!-- Load scripts. --> | |

| <script type="text/javascript" src="../../vendor/angularjs/angular-1.3.13.min.js"></script> | |

| <script type="text/javascript"> | |

| // Create an application module for our demo. | |

| var app = angular.module( "Demo", [] ); | |

| // -------------------------------------------------- // | |

| // -------------------------------------------------- // | |

| // I control the root of the application. | |

| app.controller( | |

| "AppController", | |

| function( $scope, $http ) { | |

| // I determine if there is pending HTTP activity. | |

| $scope.isLoading = false; | |

| // I keep track of how long it takes for the outgoing HTTP request to | |

| // return (either in success or in error). | |

| $scope.delta = 0; | |

| // I am used to move between "200 OK" and "404 Not Found" requests. | |

| var requestCount = 0; | |

| // --- | |

| // PUBLIC METHODS. | |

| // --- | |

| // I alternate between making successful and non-successful requests. | |

| $scope.makeRequest = function() { | |

| $scope.isLoading = true; | |

| var startedAt = new Date(); | |

| // NOTE: We are using the requestCount to alternate between requests | |

| // that return successfully and requests that return in error. | |

| $http({ | |

| method: "get", | |

| url: ( ( ++requestCount % 2 ) ? "./data.json" : "./404.json" ) | |

| }) | |

| // NOTE: We foregoing "resolve" and "reject" because we only care | |

| // about when the HTTP response comes back - we don't care if it | |

| // came back in error or in success. | |

| .finally( | |

| function handleDone( response ) { | |

| $scope.isLoading = false; | |

| $scope.delta = ( ( new Date() ).getTime() - startedAt.getTime() ); | |

| } | |

| ); | |

| }; | |

| } | |

| ); | |

| // -------------------------------------------------- // | |

| // -------------------------------------------------- // | |

| // Since we cannot change the actual speed of the HTTP request over the wire, | |

| // we'll alter the perceived response time be hooking into the HTTP interceptor | |

| // promise-chain. | |

| app.config( | |

| function simulateNetworkLatency( $httpProvider ) { | |

| $httpProvider.interceptors.push( httpDelay ); | |

| // I add a delay to both successful and failed responses. | |

| function httpDelay( $timeout, $q ) { | |

| var delayInMilliseconds = 850; | |

| // Return our interceptor configuration. | |

| return({ | |

| response: response, | |

| responseError: responseError | |

| }); | |

| // --- | |

| // PUBLIC METHODS. | |

| // --- | |

| // I intercept successful responses. | |

| function response( response ) { | |

| var deferred = $q.defer(); | |

| $timeout( | |

| function() { | |

| deferred.resolve( response ); | |

| }, | |

| delayInMilliseconds, | |

| // There's no need to trigger a $digest - the view-model has | |

| // not been changed. | |

| false | |

| ); | |

| return( deferred.promise ); | |

| } | |

| // I intercept error responses. | |

| function responseError( response ) { | |

| var deferred = $q.defer(); | |

| $timeout( | |

| function() { | |

| deferred.reject( response ); | |

| }, | |

| delayInMilliseconds, | |

| // There's no need to trigger a $digest - the view-model has | |

| // not been changed. | |

| false | |

| ); | |

| return( deferred.promise ); | |

| } | |

| } | |

| } | |

| ); | |

| </script> | |

| </body> | |

| </html> |

I don't have too much more to say about this. I just thought it was a fun use of the underlying $http implementation. And, it's yet another example of how promises make our lives better.

Want to use code from this post? Check out the license.

Reader Comments

If you can, you can simulate delays or server responses using ServiceWorker without requiring application code modifications

https://github.com/bahmutov/ng-wedge

Pretty advanced but might come in handy

@Gleb,

I'm having a little trouble following the code. I **think** you are redefining the "load()" method with a Function() constructor in order to change its lexical binding to the $http object you are defining inside the wedge? Is that accurate? If so, it's a very clever idea! I don't think I've seen the Function constructor used to redefine context like that.

Hi, very good article, i have a question: what if i need to delay the request, for example: show a toast before send the request itself. Thanks!

@Luis,

If you need the delay as part of your application logic, I would probably do that outside of an $http interceptor. Since it is a specific context that triggers the toast item, you don't want to try and incorporate that into into a generic $http interceptor.

I don't know the rules of your application; but, I assume there's some sort of Controller or Directive that manages the toast item. Perhaps you could have the toast $rootScope.$emit( "toastClosed" ) an event when it is closed. Then, you could have some other controller listen for that event and initiate the HTTP request at that time:

$rootScope.$on( "toastClosed", function() {

. . . . make the HTTP request

} );

You could also do this with promises and a host of other ways. It also depends on whether or not you want to pass data around, etc. But, definitely, I wouldn't try to incorporate that into the $http logic itself.