Thinking About Inversion Of Control (IoC) In Node.js

This year, I really want to get serious about building scalable systems with Node.js. And, anytime you move from one context to another, I think it's natural to consider forklifting practices from one context to the next. So, as I expand from AngularJS to Node.js (in terms of JavaScript), I wonder how much of what I love in AngularJS can be applied in a Node.js environment. Specifically, for this post, I am thinking about the Inversion of Control (IoC) and Dependency Injection (DI) for which AngularJS is famous.

In AngularJS, just about everything relies on dependency injection. Each service, and decorator, and interceptor, and resolver, and so on, can leverage dependency injection to "receive" values out of the DI container. But, in Node.js, the typical workflow is quite different - rather than having values injected into a context, each context will "require" its own dependencies.

The require() function is really cool. And, when you get started in Node.js, the require() function makes it super easy to dive right in. But, after coding AngularJS for a few years, the require() function can feel sketchy - like you're forgetting everything that you just learned about loose coupling.

As a thought experiment, I wanted to see what a Node.js module could look like if it resembled something a bit more like an AngularJS factory that depended on Inversion of Control (IoC) rather than the require() function. In AngularJS, a factory is just a function that, when invoked - with dependencies - returns the underlying service. In Node.js, the "module.exports" reference could be used as that factory function.

To play with this idea, I created a Greeter that returns greetings for a given name:

| // Require our vendor libraries. | |

| var chalk = require( "chalk" ); | |

| // Gather our application services. Each of these require() calls will return a factory | |

| // that, when invoked, will return the instance of the service we are looking for. We | |

| // can therefore invoke these factory methods with the necessary dependencies. | |

| var debuggy = require( "./debuggy" ).call( {}, chalk ); | |

| var greeter = require( "./greeter" ).call( {}, debuggy, "Good morning, %s." ); | |

| // Greet some people already! | |

| console.log( greeter.greet( "Sarah" ) ); | |

| console.log( greeter.greet( "Joanna" ) ); | |

| console.log( greeter.greet( "Kim" ) ); |

In this code, we still use the require() function - after all, this is still core to how Node.js works. But, you'll notice that I have two different approaches to require(). The first require(), for "chalk", loads a vendor library the way that you would normally require things in Node. The next two calls to require(), however, are for my custom service Factories. These calls don't return the service - they return the factories that can generate the services. I then invoke the factories and pass-in the necessary dependencies.

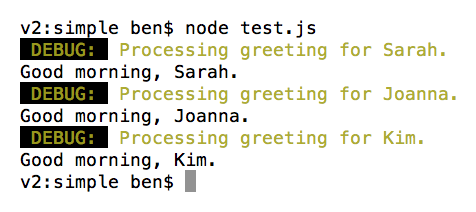

When we run the above code, we get the following terminal output:

Everything works as expected. Now, let's take a look at the two custom services that I am creating using factories and Inversion of Control (IoC). First, the Debuggy service:

NOTE: I am calling this "debuggy" and "debugger" since "debugger" is already a feature of various JavaScript environments.

| // I provide the debuggy service. | |

| module.exports = function DebuggyFactory( chalk ) { | |

| // Return the public API. | |

| return( debuggy ); | |

| // --- | |

| // PUBLIC METHODS. | |

| // --- | |

| // I log the debugging message to the console. The message supports %s style | |

| // interpolation as it is being handed off to the console.log(). | |

| function debuggy( message ) { | |

| // Rewire the first argument to be colorful. | |

| arguments[ 0 ] = ( | |

| chalk.bgBlack.yellow( " DEBUG: " ) + | |

| " " + | |

| chalk.yellow( arguments[ 0 ] ) | |

| ); | |

| console.log.apply( console, arguments ); | |

| } | |

| }; |

As you can see, the "module.exports" reference becomes our Factory function. When it is invoked, by the calling context, the dependency "chalk" must be passed-in. This dependency (and any other dependency in the invocation arguments) is then available to the rest of the module due to JavaScript's lexical binding.

The factory function is responsible for returning the underlying service. In this case, the entirety of the service is the "debuggy" function. But, as you'll see in the Greeter module, the return value can be a new object that exposes partial functionality through the revealing module pattern.

| // I provide the greeter service. | |

| module.exports = function GreeterFactory( debuggy, greeting ) { | |

| testGreeting( greeting ); | |

| // Return the public API. | |

| return({ | |

| greet: greet | |

| }); | |

| // --- | |

| // PUBLIC METHODS. | |

| // --- | |

| // I return a greeting message for the given name. | |

| function greet( name ) { | |

| ( debuggy && debuggy( "Processing greeting for %s.", name ) ); | |

| // Replace all instances of %s with the given name. | |

| var message = greeting.replace( | |

| /%s/gi, | |

| function interpolateName( $0 ) { | |

| return( name ); | |

| } | |

| ); | |

| return( message ); | |

| } | |

| // --- | |

| // PRIVATE METHODS. | |

| // --- | |

| // I test the incoming greeting to make sure it is suitable for our purposes. | |

| function testGreeting( newGreeting ) { | |

| if ( ! newGreeting || ( newGreeting.indexOf( "%s" ) === -1 ) ) { | |

| throw( new Error( "InvalidArgument" ) ); | |

| } | |

| } | |

| }; |

Here, the return value - which becomes the service - is a new object that selectively exposes some of the module's functions.

Now, using this approach doesn't preclude the use of require() in the traditional sense. I assume you'd still be able to build tightly coupled components that depend on require() internally but expose an API using the factory-method approach. This simply limits the coupling of the application to smaller, more cohesive units.

I've dabbled with Node.js before; but, for all intents and purposes, I'm a Node.js beginner. So, it's quite possible that this all comes off as the ramblings of a mad man. Or, maybe the Node.js community does use something like this. I'm not sure yet. All I know is that I've grown to really enjoy this pattern in AngularJS; and, I'd like to continue thinking about it in a Node.js context.

NOTE: I understand that AngularJS has a whole dependency-injection (DI) framework; all I'm talking about here is Inversion of Control (IoC) in which I'm implementing the DI manually.

Want to use code from this post? Check out the license.

Reader Comments

Is there a reason to do:

var debuggy = require( "./debuggy" ).call( {}, chalk );

var greeter = require( "./greeter" ).call( {}, debuggy, "Good morning, %s." );

Wouldn't

var debuggy = require( "./debuggy" )( halk );

var greeter = require( "./greeter" ).( debuggy, "Good morning, %s." );

Work the same?

@Julian,

Sorry, this:

var greeter = require( "./greeter" ).( debuggy, "Good morning, %s." );

Should be this:

var greeter = require( "./greeter" )( debuggy, "Good morning, %s." );

@Julian,

Yeah, that's just a stylistic choice. There's something I don't like about parenthesis right up next to each other:

foo( something )( somethingElse )

I like to have *something* between then. This is just 100% personal preference, though. The only substantive difference between using .call() is that I have the opportunity to provide a different this-binding. I *believe* that if you put the parenthesis back-to-back, it will execute in the global-context.

So, inside of the factory method, if you were to `console.log( this )`, mine would be "{}" and yours would be the Global object.

@Ben,

I was curious so I made a quick test just to make sure.

https://gist.github.com/jgautier/dd6877447c1188f12067

Just to comment in general on some other stuff in my experience using node. I have seen this pattern used but if the only reason why you are injecting is to use mock objects in your unit tests then I find using something like https://github.com/jhnns/rewire is a bit cleaner. This allows you to keep your requires but then override them in your tests.

@Julian,

To be honest, I don't do much testing at all; well, not programmatic testing - I do a lot manual testing. While I think that is a good "story", it's not generally my driving force. That said, I test to agree with the idea that more testable code is probably better organized code.

Now, this might sound crazy; but, having to manually inject dependencies has friction - but, that's a "Good Thing." I believe that the more you have to inject into a single module, the more likely it is that something is going wrong. The pain of having to manually inject a lot of things should be an indicator that something might not be organized properly.

While I'm new to Node, I'm not new to the pain of overly complex code :D When its super easy to require() anything you want, it's too easy to suddenly have code that requires 20+ things, which *may* be a problem.

That said, I think you do have to draw a line between "core" and "non-core" modules. For example, if I want all my stuff to operate on Promises, I don't necessarily want to inject "Q" into *everything*. If that's a core part of the app - not from a business standpoint but from a structure standpoint - I think that's likely OK to require(). Just as, no one injects "String" or "Array" - they are foundational.

But, again, I'm just riffing here on the theoretical - I'm not writing any production Node yet. I think as a next step, I'll try to rewrite one of my ColdFusion projects to use Node.js.

@Ben,

I completely agree with this. I find it very uncommon that there is a dependency that needs to be injected and for the cases where it does need to happen then this pattern works great. I also like it because it does not require you to pull in a whole separate framework or library just to do the injection for the few cases where it needs to happen.

I bring up the unit testing because I think this is the case that a lot developers choose as a reason fror DI and its silly to reorganize your code just to support your unit tests which is where I think libraries like rewire come in.

Came from .NET, a place you can see IoC anywhere, I thought the same idea, implemented it and it works wonderful for me till now, I use it for everything I can, that's it.

The main reason I've used it expect the great decoupling is for unit testing. It makes the way of building a kind of mock easy.

@Julian,

I've been trying to play around with this some more lately; and, what I'm running into is the fact that I'm just not very good at thinking about code organization :D This is definitely going to be an uphill battle. But, I'm trying to work with a real-world(ish) sample project to help me learn.

@Roy,

Thanks for the positive feedback. It sounds like favoring IoC is definitely a positive approach, although the various implementations may be different.

Thanks for an important post. Unfortunately when I try to discuss IoC on Node - I somtimes face this "ahhh, don't bother us with those Old World ideas"... Your code pin-points how it should look in the simple clean world of Node :)

Still there are some points which might justify additional thought and/or frameworks:

(1) Sub-contexts (for large applications), e.g. one file would wire up "chalk","debuggy","greeter", while another file wires up "payments","creditCards","receites" ...

(2) Registry of all available components: something like {"chalk":chalk, "debuggy":debuggy, "greeter":greeter}

(3) init/destroy: e.g on shutdown I might want to iterate over the Registry above and 'destroy' each component.

There's this popular discussion about Node dependency injection frameworks: http://www.mariocasciaro.me/dependency-injection-in-node-js-and-other-architectural-patterns but some of the mentioned frameworks seemed to have a strange interpretation of IoC, more like ServiceLocators... So I'm still investigating.

I have developed a lightweight inversion of control (IoC) container for TypeScript, JavaScript & nodejs apps you can learn more about it at http://blog.wolksoftware.com/introducing-inversifyjs

@Pelit,

The more I get into Node.js, the more difficult it is to think about DI in the traditional sense. At least, coming from an AngularJS world. In Angular, the DI is part of the instantiation, meaning the "things" are injected as constructor arguments. In Node.js, however, there is a hard line between instantiation (ie, resolving the module and wiring the "Exports") and configuration. This basically rules out constructor-based injection, unless you want to store Singletons *outside* of the module (ie, explicitly keep them in some cache).

You almost need a mix of both because there is just a vast landscape out there of modules. For example, you probably are totally fine just require()'ing lodash - no need to keep that instantiated and stored because it doesn't really have state. But, something like an AWS-SDK Client - that has state and probably needs to be cached. Or, better yet, a MongoDB driver that needs to maintain a pool of connections to the database. That has *serious* state.

The MongoDB example is something that I've been playing with, where my main bootstrap file (server.js), will require() and then configure the MongoDB pool in two different steps:

require( "./mongo-gateway" ).connect( options ).then( bindServerToPort );

.... here, the instantiation (require) and the configuration (connect) are two different steps managed by the bootstrapping of the app.

Anyway, just some more thoughts after another few months of tinkering.

You're on the right path. When I first started writing Node.js code, my immediate reaction was "cool, require is just a lightweight DI container".

Then I found Angular, and realized that a DI container in Javascript could be so much more.

And then I found promises. Which provide asynchronous behavior in a composable way.

The problem though with require is that it works great for the app, but not so great for testing. It really fails at setting up a mock environment for your components, since they are depending on require, and require doesn't have any built-in support for loading/mocking different libraries when in a test mode vs. production mode.

If you put all this together, you get my really lightweight majic library.

https://www.npmjs.com/package/majic

One thing you might really like about it is auto-scanning, a feature I love from the Spring world (arguably the best DI container in the world?). This lets you organize your code one way today, but a completely different way tomorrow. Start simple, make changes, and make more changes. Majic takes care of finding everything you need automatically, so code structure is for humans, not the computers!

@Ben,

> But, something like an AWS-SDK Client - that has state and probably needs to be cached. Or, better yet, a MongoDB driver that needs to maintain a pool of connections to the database. That has *serious* state.

require() actually has state. If you write a db module like this, you can require it in all of your modules, and they will all share the same instance:

db.js

----------

var db = require('mongodb');

var mongoUri = process.env.MONGO_URI;

module.exports = new Promise(function(resolve, reject) {

db.connect(mongoUri, function(err, conn) {

if (err) {

reject(err);

} else {

resolve(conn);

}

});

});

---------

var db = require('./db');

db.then(function(conn) {

// do something with conn

});

require() caches the modules.export object, and the cached object is returned in all subsequent require() calls.

I think it is a mistake to compare node's require with Angular's IoC . They each have different purposes.

An node module is essentially the same thing as a JAVA .jar file, a Ruby .gem file, or a .NET assembly. Using require in a node application is essentially the same thing as doing import in a JAVA application. Further, npm is equivalent to maven in JAVA world.

Node doesn't make any assumptions about how you should manage your dependencies, and it would be a mistake if they did. Node's power comes from it's flexibility.

Angular's IoC and DI framework force a certain type of development style. Angular also seems to have its own package management philosophy.

One isn't necessarily better than the other. They are simply trying to do different things.

I have noticed that angular developers tend to manage their dependencies better than node developers. I suspect that is because Angular forces people to manage their dependencies in a certain way.

You can still manage dependencies in node. You simply have to have a dependency management philosophy and the discipline to follow it.

In the Object Oriented world, we have 6 package principles:

* Reuse/Release Equivalency Principle

* Common Closure Principle

* Common Reuse Principle

* Acyclic Dependency Principle

* Stable Dependency Principle

* Stable Abstraction Principle

Personally, I feel that node developers would build better applications if they followed them, too.

I'm working on InversifyJS 2.0: A powerful IoC container for JavaScript apps powered by TypeScript http://blog.wolksoftware.com/introducing-inversify-2

Hi Ben:

Just in case you get time - you could review a DI framework I built by modifying Archietect.js (and I call the fork - Archiejs).

Archiejs is a DI framework for nodejs and have been using it for a while for larger nodejs projects. It allows you to break your nodejs project into loosely coupled modules, that are injected into each other during application startup.

Read a bit more here:

https://docs.google.com/document/d/1NgcQiNhoOaHq70iNOumNSdYaS3cOmq7TRx7rTDgGUno/edit?usp=sharing

Github: https://github.com/archiejs

I have recently tested Simple-dijs and it resolves theses problems - https://github.com/avighier/simple-dijs